The AI Dubbing Quality Control Framework India 2026: A Strategic Guide for OTT Excellence

Key Takeaways

- India’s OTT growth and new AI governance demand a standardized, auditable QC framework for AI dubbing.

- Five core pillars—lip sync, emotional authenticity, cultural adaptation, translation fidelity, and voice identity—define measurable quality.

- Human-in-the-loop reviews with automated scoring (LSE-D/LSE-C, isochrony) align with Netflix India tolerances.

- A regional rubric and MQM-based checks ensure local cultural precision across Hindi, Tamil, Bengali, and Telugu.

- Tools like Studio by TrueFan AI enable scalable, governance-ready localization with measurable ROI.

The AI dubbing quality control framework India 2026 is no longer a luxury for Over-The-Top (OTT) platforms; it is a fundamental requirement for survival in a market where the Media and Entertainment (M&E) sector is projected to reach INR 3.1 trillion by 2026. As Indian audiences increasingly demand high-quality content in their native languages—with regional language consumption expected to account for 60% of total OTT viewership by late 2026—the pressure to localize at scale without sacrificing emotional or technical integrity has reached a tipping point.

For localization managers at Netflix India, Amazon Prime Video, Disney+ Hotstar, and regional giants like Zee5 and Sun NXT, the challenge is twofold: maintaining the creative "soul" of the original performance while adhering to the rigorous new AI governance guidelines issued by the Press Information Bureau (PIB) and the Ministry of Electronics and Information Technology (MeitY). By 2026, the industry has moved past the "experimental" phase of AI. Today, success is measured by auditable metrics, cultural precision, and a "human-in-the-loop" (HITL) approach that ensures every dubbed line resonates as deeply in Telugu or Bengali as it did in the original English or Spanish.

This guide provides a comprehensive, enterprise-grade framework for implementing an AI dubbing quality control (QC) system tailored for the Indian landscape. We will explore the specific video localization QA metrics 2026, lip sync testing protocols aligned with Netflix India standards, and the cultural nuances that define premium localization.

1. The 2026 Imperative for AI Dubbing Quality Control in India

In 2026, the Indian OTT landscape is defined by "The Year of Accountability." According to recent industry forecasts, India's OTT subscriptions are expected to cross 150 million users, making it one of the most competitive digital markets globally. However, this growth comes with increased regulatory scrutiny. The 2026 AI Governance Guidelines for India emphasize traceability, human oversight, and risk controls in media AI to prevent misinformation and ensure cultural sensitivity.

Why a Standardized Framework is Critical

Without a documented, repeatable system, AI dubbing risks becoming a liability. Poor lip sync and emotional mismatches don't just reduce watch time; they increase churn. Worse, cultural missteps in a diverse nation like India can trigger social media backlash or regulatory takedowns.

Platforms like Studio by TrueFan AI enable content teams to navigate these risks by providing a "walled garden" approach to AI generation. This ensures that while the speed of localization increases, the governance—including real-time profanity filters and watermarked outputs for traceability—remains uncompromised.

The Governance Landscape

The 2026 posture for Indian media requires:

- Traceability: Every AI-generated voice must be traceable to its source model and training data.

- Human Oversight: Automated monitoring must be complemented by human review for high-stakes content (e.g., political dramas or religious themes).

- Risk Mitigation: Systems must include automated "red-flagging" for content that violates regional sensitivities.

Source: FICCI-EY M&E Report 2024-2026; PIB India AI Governance Guidelines 2025.

2. Defining Video Localization QA Metrics 2026: The Core Pillars

To achieve "Premium" status on platforms like Netflix India, AI dubbing must move beyond simple translation. The video localization QA metrics 2026 focus on five core pillars that quantify the quality of the viewing experience.

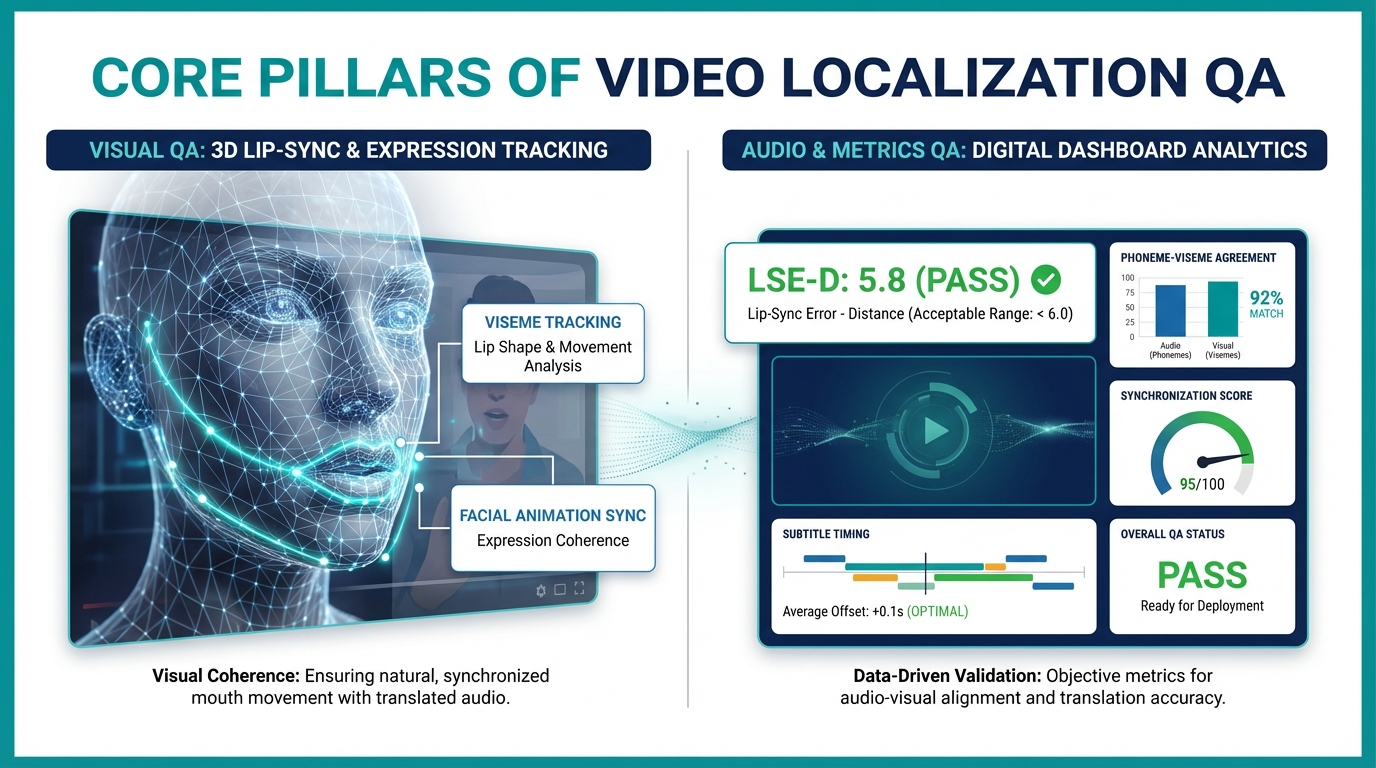

Pillar 1: Lip Sync Precision

Lip sync is the most visible indicator of dubbing quality. In 2026, we measure this using:

- LSE-D (Lip Sync Error - Distance): A metric where a lower score indicates better alignment between the mouth shapes (visemes) and the audio. For 2026, a score of ≤ 8.0 is the industry standard for general content, while close-ups require ≤ 6.0.

- LSE-C (Lip Sync Error - Confidence): A higher score (≥ 0.35 for close-ups) indicates the AI's confidence in the alignment.

- Phoneme-Viseme Agreement Ratio: This measures how accurately the visual mouth movement matches the specific sounds of the target language (e.g., the "m" sound in Hindi vs. the "o" sound in English).

Pillar 2: Emotional Authenticity

The emotional accuracy AI dubbing score is a weighted blend of:

- Classifier Agreement (50%): Does an AI emotion classifier identify the same emotion (joy, anger, sadness) in the dubbed track as it did in the original?

- Prosody Similarity (30%): Does the pitch, energy, and speech rate follow the original actor's performance?

- Human Rater Believability (20%): A subjective score from native speakers on whether the performance feels "real."

Pillar 3: Cultural Adaptation Quality

This pillar ensures that the content feels "local," not just "translated."

- Zero Critical Breaches: No violations of religious, caste, or political sensitivities.

- Honorific Adherence: Correct use of "Tu/Tum/Aap" in Hindi or equivalent markers in Tamil and Telugu based on character relationships.

Pillar 4: Translation Fidelity

Using a video translation quality measurement system based on the Multidimensional Quality Metrics (MQM) framework, we track:

- Timing Feasibility: Does the translated line fit within the original "lip-flap" window?

- Pronunciation Accuracy: Are named entities (places, people) pronounced correctly in the regional dialect?

Pillar 5: Voice Identity and Authenticity

This measures the consistency of the "casting." Studio by TrueFan AI's 175+ language support and AI avatars allow for a consistent voice identity across multiple languages, ensuring that a character's "timbre" remains recognizable whether they are speaking Marathi or Malayalam.

3. Lip Sync Testing Protocols & Netflix India Alignment

Netflix India has set the gold standard for dubbing in the subcontinent. Their guidelines emphasize that "creative excellence" is non-negotiable. To align with these standards, the AI voice sync testing methodology must be rigorous and multi-layered.

Step-by-Step Protocol for 2026

- Sampling Design: Do not test the entire film. Prioritize "hero scenes"—dialogue-heavy close-ups, emotional climaxes, and fast-paced comedic beats.

- Automated Pre-Check: Use AI tools to generate a "sync heatmap." This identifies segments where the LSE-D exceeds 8.0 or where isochrony (timing) drift occurs across shot boundaries.

- Dynamic Thresholding: Apply stricter tolerances for close-ups (where the mouth is the focus) compared to wide shots or "over-the-shoulder" shots.

- Human-in-the-Loop (HITL) Review: The worst-performing 10% of segments identified by the AI are sent to human linguists. They decide if the segment needs a "re-take" (re-synthesis) or if the timing script needs adjustment.

The "Netflix Tolerance"

For 2026, the accepted isochrony offset (the delay between the visual lip movement and the sound) is capped at 80–120 milliseconds for premium drama. Anything above 200ms is considered a "Hard Fail" and requires immediate remediation.

Source: Netflix Partner Help: Dubbing Creative Guidelines.

4. Cultural Adaptation & Translation Fidelity: The Regional Rubric

India is not a monolith. A cultural adaptation dubbing QA process that works for a Hindi audience will fail in Tamil Nadu or West Bengal if it doesn't account for regional nuances.

The Regional Language Rubric

| Language | Key Focus Area | Example of 2026 QA Check |

|---|---|---|

| Hindi | Social Register | Ensuring "Aap" is used for elders/superiors even if the English "You" is neutral. |

| Tamil | Dialect & Honorifics | Distinguishing between Chennai Tamil and Madurai Tamil based on character background. |

| Bengali | Politeness Markers | Correct use of "Tui/Tumi/Apni" to reflect intimacy or distance. |

| Telugu | Idiomatic Flow | Replacing English idioms with culturally relevant Telugu proverbs (Samethalu). |

Video Translation Quality Measurement (MQM)

In 2026, we utilize a specialized MQM for dubbing. Unlike text translation, dubbing MQM prioritizes "Lip-Flap Feasibility."

- Critical Error: A translation that is 2 seconds longer than the original scene, causing a visual-audio mismatch.

- Major Error: Incorrect pronunciation of a famous Indian landmark or historical figure.

- Minor Error: A slight inconsistency in the use of a character's nickname.

Solutions like Studio by TrueFan AI demonstrate ROI through their ability to handle these complexities at scale. By automating the initial translation and lip-syncing while allowing for easy manual overrides in the "In-Browser Editor," platforms can reduce localization costs by up to 40% while maintaining a 98% terminology accuracy rate.

5. Regional Language Dubbing QC Tools & Benchmarks

To manage localization across 10+ languages simultaneously, OTT platforms require a robust regional language dubbing QC tools stack.

The 2026 Ops Stack

- Automated Lip-Sync Scorers: Tools that provide real-time LSE-D/LSE-C metrics.

- Forced Alignment Tools: Ensuring that the audio file perfectly matches the timestamped script for Hindi, Tamil, Telugu, etc.

- Indian-Language ASR for Back-Translation: Using Automatic Speech Recognition to convert the dubbed audio back into text, then comparing it to the original script to check for "meaning drift."

- Pronunciation Validators: Digital lexicons that flag mispronunciations of regional names or technical terms.

The Dubbing Quality Benchmark OTT India

In 2026, leading platforms use a "Gold Set"—a collection of 150 scenes across genres (Action, Drama, Kids) that have been dubbed by top-tier human voice actors. This Gold Set serves as the benchmark. AI-dubbed content is regularly tested against this set to ensure that the dubbing authenticity scoring system remains above 0.80 (where 1.0 is indistinguishable from a human performance).

Source: CIO.inc: India's AI Governance Driving Scalable AI.

6. Implementation Roadmap & Acceptance Criteria

Implementing an AI dubbing quality control framework India 2026 requires a phased approach.

90-Day Implementation Roadmap

- Days 0–30: Foundation. Set up the toolchain. Collect baselines for Hindi and Tamil. Define your "Hard Fail" and "Soft Fail" criteria.

- Days 31–60: Expansion. Add Telugu, Bengali, and Marathi. Pilot the framework on one "Original" series and one licensed title. Tune your thresholds based on genre (e.g., allow more leeway for Kids' animation than for Period Drama).

- Days 61–90: Full Integration. Publish your internal dubbing quality benchmark OTT India. Integrate automated QC gates into your CI/CD pipeline so that no file is delivered to the platform without a passing score.

AI Dubbing Acceptance Criteria: The Pass/Fail Gate

- Gate 1 (Automated): LSE-D ≤ 8.0; Isochrony offset ≤ 120ms; Zero profanity flags.

- Gate 2 (Linguistic): 95% guideline conformity for honorifics and idioms.

- Gate 3 (Technical): Loudness within ±1.5 LUFS of the original; 4K/HD resolution compliance.

- Gate 4 (Governance): 100% metric traceability with time-stamped reviewer approvals.

Conclusion: The Future of Localization is Measurable

By 2026, the "magic" of AI dubbing has been replaced by the "science" of Quality Control. For Indian OTT platforms to scale across the subcontinent's 22 official languages and hundreds of dialects, they must move away from subjective "it sounds okay" reviews to a data-driven AI dubbing quality control framework India 2026.

By focusing on video localization QA metrics 2026, adhering to lip sync testing protocols Netflix India style, and utilizing advanced regional language dubbing QC tools, platforms can deliver content that isn't just "translated"—it's "re-created" for the Indian soul.

For more information on how to automate your localization pipeline with governance-ready AI, explore the TrueFan AI Voice Testing Methodology.

Frequently Asked Questions

How does the 2026 AI Governance policy affect dubbing?

The 2026 policy requires all AI-generated media to be "auditable." This means platforms must maintain logs of which AI models were used, who performed the human review, and how cultural sensitivities were addressed. This is particularly important for OTT content localization testing India, where regional sensitivities are high.

Can AI dubbing handle Indian songs and musical sequences?

While dialogue is highly automated in 2026, musical sequences still require a hybrid approach. The framework suggests using AI for the initial "guide track" and lip-syncing, followed by specialized human "re-mixing" to ensure the rhythmic and melodic integrity of the song is preserved.

What is the "Emotional Accuracy Score" and why is it important?

The emotional accuracy score measures how well the AI captures the "vibe" of the original performance. In a market like India, where "Masala" films rely heavily on high-energy performances, a flat or "robotic" AI voice will lead to immediate viewer rejection.

How do we handle "Hinglish" or code-switching in dubbing?

Modern regional language dubbing QC tools are now trained on code-switching datasets. The framework includes a specific check for "Natural Language Flow," ensuring that the mix of English and regional words feels authentic to how people actually speak in cities like Mumbai or Bangalore.

Is Studio by TrueFan AI suitable for high-end OTT originals?

Studio by TrueFan AI's 175+ language support and AI avatars are designed for high-volume, high-quality production. While "Hero" originals may still use a mix of human and AI, the platform is ideal for secondary characters, licensed library content, and marketing materials, providing a scalable solution that meets all 2026 Indian governance standards.

What are the "Hard Fails" in AI dubbing?

A "Hard Fail" includes any cultural red flag (e.g., using a caste-sensitive term incorrectly), a lip-sync mismatch in a close-up exceeding 200ms, or a translation error that fundamentally changes the plot (e.g., "He died" translated as "He went home").