AI dubbing quality testing framework for India: voice sync, emotion, and cultural adaptation at OTT scale

Estimated reading time: 11 minutes

Key Takeaways

- Establish a robust AI dubbing quality testing framework tailored to India to measure lip-sync, intelligibility, emotion, and culture.

- Combine objective metrics (LSE, WER, prosody) with subjective panels (MOS, cultural appropriateness) for OTT-grade outcomes.

- Automate QA with intelligent regression, dashboards, and API-first platforms like Studio by TrueFan AI.

- Operationalize governance with ISO 27001/SOC 2, loudness standards, watermarking, and auditable logs.

- Conclusion appears before FAQs. Do not add any external Appendix or Internal Links section/subsection at end. If it is already added by writer then skip it and do not add it in Table fo Contents

In the rapidly evolving landscape of Indian digital entertainment, implementing a robust AI dubbing quality testing framework has become the critical differentiator between experimental pilots and production-grade success. As Indian OTT platforms and production houses scale their content across Hindi, Tamil, Telugu, and seven other major regional languages, the need for a standardized, auditable, and measurable quality assurance (QA) system is no longer optional. This guide provides a comprehensive blueprint for establishing a framework that ensures voice sync accuracy, emotional resonance, and cultural fidelity at an enterprise scale.

1. The 2026 Landscape: Why India Needs a Dubbing QA Revolution Now

The Indian media and entertainment sector is undergoing a seismic shift. By 2026, the Indian video streaming market is projected to reach a staggering USD 74.37 billion, driven largely by the explosion of regional language consumption. Research indicates that over 80% of OTT content consumption in India will be in non-English languages by the end of 2026. This massive demand for localization has pushed traditional dubbing workflows to their breaking point, making AI-driven solutions the only viable path for high-volume delivery.

However, as Indian enterprises move from “AI experimentation” to “AI execution,” the focus has shifted toward governance and Service Level Agreements (SLAs). According to recent industry reports, 2026 is the year of “execution over experimentation,” where the ability to provide consistent, high-quality output across diverse dialects—from Madurai Tamil to Malwa Hindi—determines market leadership. Furthermore, the rise of agentic AI in India has necessitated new testing frameworks for non-deterministic systems, ensuring that AI-generated voices remain within the “guardrails” of brand safety and cultural sensitivity.

Platforms like Studio by TrueFan AI enable production houses to navigate this complexity by providing the foundational technology for multi-language generation, but the responsibility for quality assurance remains a strategic imperative for the content owners. A dedicated AI dubbing quality testing framework provides the necessary metrics, protocols, and thresholds to maintain premium OTT standards while achieving the speed and cost-efficiency of AI.

Source: Fortune Business Insights: Video Streaming Market Size 2026.

Source: CIOL: India Enterprise Tech 2026 - Execution over Experimentation.

Source: EY India: Is India Ready for Agentic AI? Outlook 2026.

2. The Core Taxonomy: AI Localization Quality Metrics & Dubbing QA

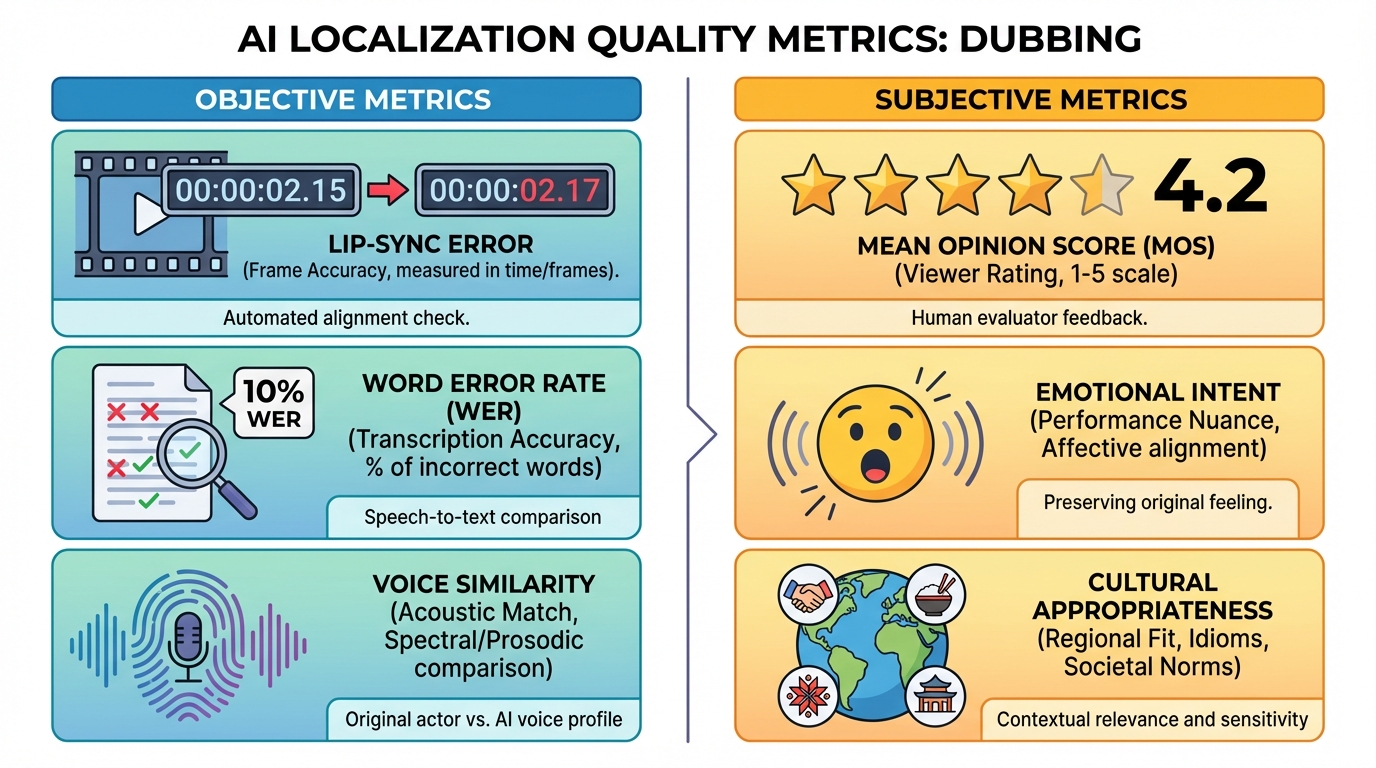

A successful framework must move beyond “it sounds good” to a data-driven taxonomy. We categorize these into objective metrics (machine-calculated) and subjective metrics (human-evaluated), specifically adapted for the Indian linguistic context.

Objective Metrics (The Data Layer)

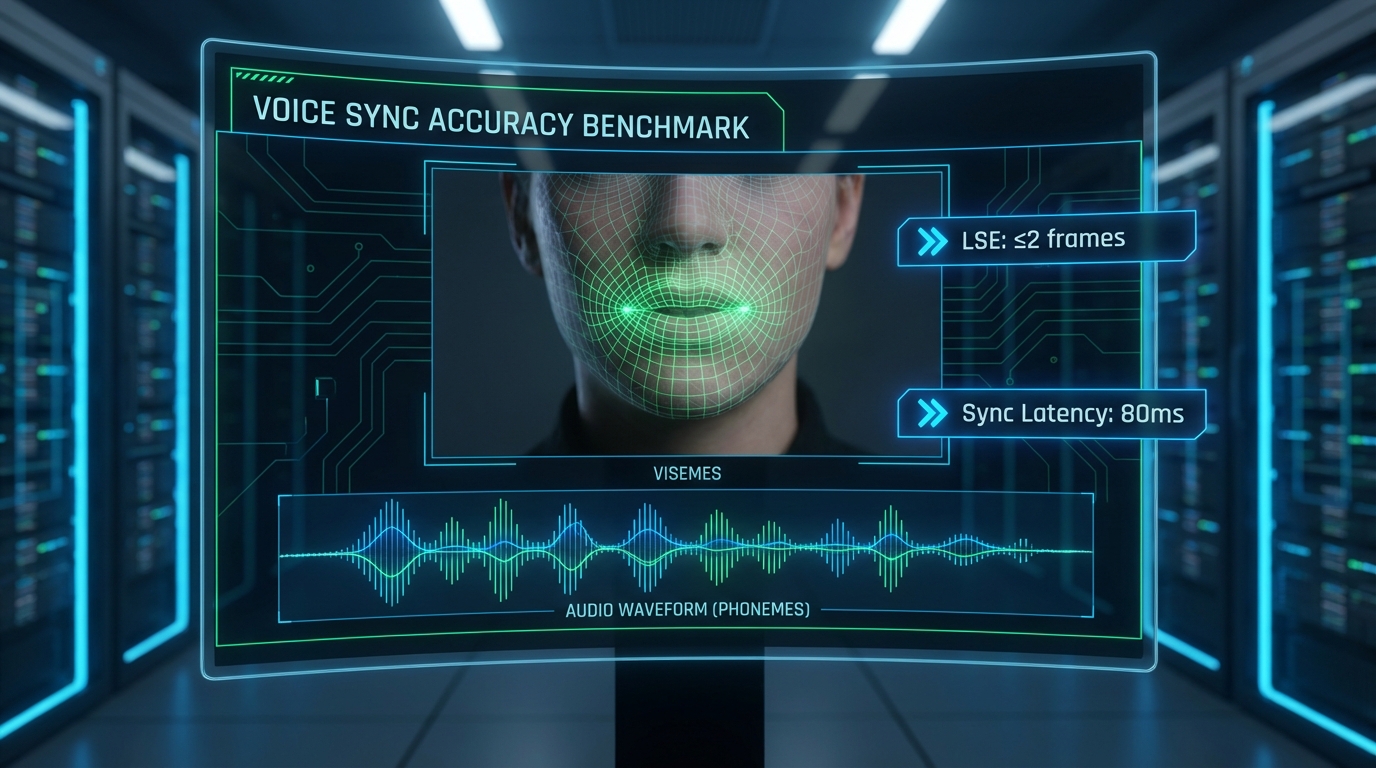

- Lip-Sync Error (LSE): Measured as the frame or phoneme alignment distance between the visual mouth movements (visemes) and the audio phonemes. For premium Indian OTT content, the target is an average LSE of ≤2 frames at 30 fps.

- Word Error Rate (WER) for Intelligibility: Utilizing advanced ASR models like AI4Bharat’s IndicWhisper, we measure the accuracy of the dubbed track against the intended script. A WER of ≤12% is the standard for scripted narrative content.

- Voice Similarity (Speaker Embedding): Using cosine similarity between the source actor's voiceprint and the AI-generated target, we ensure the “essence” of the character is preserved across languages. See AI voice cloning for Indian accents.

- Prosody and Timbre Match: Metrics such as Mel-Cepstral Distortion (MCD) and F0 Root Mean Square Error (RMSE) are used to quantify how closely the AI voice mimics the pitch and rhythm of the original performance.

- Sync Latency: The absolute audio-visual offset. For seamless playback on Indian mobile networks and smart TVs, this must remain below 80–120 ms.

Subjective Metrics (The Human Layer)

- Mean Opinion Score (MOS): A 1–5 scale where native speakers rate the naturalness of the voice. Premium content targets a MOS of ≥4.2.

- Emotional Intent Match: A panel-based assessment of whether the AI dub successfully conveys the intended emotion (e.g., sarcasm in a Hindi sitcom or grief in a Malayalam drama).

- Cultural Appropriateness: This evaluates the adherence to locale-specific conventions, such as the correct use of honorifics (e.g., “-ji” in Hindi or “Avargal” in Tamil) and the avoidance of regional taboos.

By integrating these AI localization quality metrics, teams can create a “Quality Scorecard” for every piece of content, ensuring that the multilingual quality assurance video pipeline is both rigorous and scalable.

Source: AI4Bharat: IndicWhisper ASR Benchmarks.

Source: NASSCOM: AI Redefining Software Engineering 2026.

3. Voice Sync Accuracy Benchmark India: Lip-Sync Testing Protocols

In the Indian context, where high-energy dialogue and musical sequences are common, a voice sync accuracy benchmark India requires a specialized dataset and protocol. Unlike Western content, Indian cinema often features rapid-fire dialogue and distinct phonetic structures that require higher precision in lip-sync.

The Testing Protocol

- Dataset Composition: A robust benchmark requires at least 10 hours of high-quality video per language, covering diverse genres (Drama, Sports, Kids, Animation). It must include dialectal variations like Madurai Tamil vs. Chennai Tamil to ensure the AI models are not biased toward “standard” accents.

- Phoneme-to-Viseme Alignment: Using state-of-the-art models like IIIT Hyderabad’s Wav2Lip, the framework computes the alignment between the audio phonemes and the video's lip movements.

- Thresholds for Acceptance:

- Average Lip-Sync Error: ≤2 frames.

- 95th Percentile Offset: ≤4 frames.

- Visual Artifacts: Zero tolerance for “ghosting” or “warping” around the mouth area.

Research Anchor: IIIT Hyderabad

The Centre for Visual Information Technology (CVIT) at IIIT Hyderabad has been at the forefront of this research. Their “Wav2Lip” model serves as a global reference for speech-to-lip generation. Implementing these lip sync testing protocols India involves running automated pipelines that flag any segment where the alignment falls outside the established tolerances. See the regional language dubbing test.

Studio by TrueFan AI's 175+ language support and AI avatars leverage these advanced alignment techniques to ensure that even the most complex Indian phonetic structures are synced with high precision, reducing the manual QC burden for localization teams.

Source: IIIT Hyderabad: Wav2Lip Research & Demos.

Source: Bhaasha IIIT: Lip-Sync Demo.

4. Emotional Accuracy & Cultural Adaptation: The Regional Language QA Framework

The greatest challenge in AI dubbing is not just the “what” (the words) but the “how” (the emotion and culture). An emotional accuracy AI dubbing test must be both objective and subjective to capture the nuance of Indian storytelling.

Objective Emotional Scoring

We utilize Speech Emotion Recognition (SER) classifiers fine-tuned for Indic languages. These models analyze the pitch contour, energy envelope, and pause durations of the dubbed track. See emotion detection in AI video marketing.

- Macro-F1 Target: ≥0.75 agreement between the source emotion label and the dubbed track.

- Pitch Correlation: A correlation of ≥0.8 between the source actor's pitch variance and the AI's output ensures the “musicality” of the performance is preserved.

The Regional Language QA Framework

Cultural adaptation goes beyond translation. It involves a “Regional Language QA Framework” that checks for:

- Honorifics and Register: Does the Hindi dub use “Tu,” “Tum,” or “Aap” correctly based on the relationship between characters?

- Idiomatic Substitution: Replacing English idioms with culturally relevant Indian equivalents (e.g., “Piece of cake” becoming “Baayein haath ka khel”).

- Taboo and Sensitivity Filters: Automated and manual checks for religious, caste-based, or political sensitivities that are unique to the Indian socio-political landscape.

This level of detail ensures a dubbing authenticity scoring system that respects the intelligence and cultural identity of the regional audience. Learn more about voice cloning and emotion control in India.

Source: Inc42: 2026 AI Predictions for India - Cost Control and Clarity.

Source: TRAI: Recommendations on Regional Content Localization.

5. Operationalizing the Framework: Methodology, Tools, and Automation

To scale a multilingual quality assurance video pipeline, one must move away from manual “spot-checking” to an automated AI voice testing methodology.

The Automation Stack

- Ingestion & Batch Processing: Automated pipelines ingest raw footage and generate AI dubs.

- Objective Metric Suite: Tools like SyncNet for lip-sync, IndicWhisper for WER, and x-vector embeddings for voice similarity run overnight.

- Intelligent Regression Testing: Following 2026 trends in software QA, we use “intelligent regression” to only re-test segments where the AI model or the underlying script has changed, saving massive compute and human resources.

- Dashboarding: A centralized KPI dashboard rolls up scores by language, genre, and vendor.

Integration with Production Workflows

Solutions like Studio by TrueFan AI demonstrate ROI through their ability to integrate directly into these automated pipelines via robust APIs and webhooks. By using such platforms, enterprises can:

- Generate rapid variants for A/B testing.

- Access production-grade lip-sync that already meets the baseline thresholds of the framework.

- Utilize “walled garden” moderation to ensure all generated content is pre-filtered for profanity and brand safety.

This methodology ensures that the dubbing accuracy measurement tools are not just diagnostic but are integrated into the creative process itself. Explore the best AI voice cloning software.

Source: Talent500: AI Transforming Software Testing in 2026.

Source: StrategyWorks: OTT Advertising and Operations in India.

6. Governance, Compliance, and the 2026 Implementation Playbook

As India becomes a global hub for content services, the AI dubbing quality testing framework must align with international standards for security and compliance.

Compliance Standards

- ISO 27001 & SOC 2: Essential for protecting sensitive IP and actor voice data.

- Loudness Standards: Adherence to EBU R128 or platform-specific loudness targets (e.g., -24 LKFS for most Indian OTTs).

- Traceability: Every AI-generated output should be watermarked and logged to prevent unauthorized deepfakes and ensure an audit trail.

The Implementation Playbook

- Phase 1 (Pilot): Select 2–3 languages (e.g., Hindi and Tamil). Establish baselines for WER and MOS.

- Phase 2 (Calibrate): Tune the weights of your dubbing authenticity scoring system. For example, in Kids' animation, “Emotional Accuracy” might be weighted higher than “Lip-Sync Precision.”

- Phase 3 (Scale): Roll out to all 10+ Indian languages. Integrate the automated QA dashboard.

- Phase 4 (Govern): Conduct quarterly audits and re-baseline thresholds as AI models evolve.

By following this playbook, Indian media houses can ensure their localization efforts are not just fast, but “Studio-grade.”

Source: EY India: A Studio Called India - Content and Media Services for the World.

Conclusion

The transition to AI-driven localization is the most significant shift in Indian media since the move to digital streaming. By implementing a structured AI dubbing quality testing framework, organizations can unlock the full potential of regional markets without sacrificing the artistic integrity of their content.

The future of Indian content is regional, and the future of regional content is AI. However, the bridge between these two is a rigorous, data-backed quality framework. By adopting the metrics and methodologies outlined in this guide, Indian enterprises can lead the global charge in AI-driven localization, delivering world-class stories to every corner of the country in the language of the heart.

Strategic Summary of Metrics for 2026

| Metric Category | Key KPI | 2026 Target Threshold |

|---|---|---|

| Sync | Lip-Sync Error (LSE) | ≤ 2.0 Frames (Avg) |

| Intelligibility | Word Error Rate (WER) | ≤ 12% (Scripted) |

| Naturalness | Mean Opinion Score (MOS) | ≥ 4.2 / 5.0 |

| Emotion | SER Macro-F1 Score | ≥ 0.75 |

| Security | Compliance | ISO 27001 / SOC 2 |

Frequently Asked Questions

What is an AI dubbing quality testing framework?

It is a repeatable, auditable system of metrics (like WER and LSE), protocols (sampling and panel reviews), and governance rules used to ensure that AI-generated dubbing meets the high standards of premium OTT platforms.

How do you run a voice sync accuracy benchmark in India across languages?

Use a diverse dataset of Indian regional content and apply phoneme-to-viseme alignment models (like Wav2Lip). Measure the average frame offset and set acceptance thresholds (typically ≤2 frames) to ensure the visual and audio are perfectly synced.

Which dubbing accuracy measurement tools can we start with?

Enterprises should look for tools that offer ASR for WER (like IndicWhisper), speaker embedding analysis for voice similarity, and automated lip-sync validation. Studio by TrueFan AI is an excellent example of a platform that provides the high-quality generation needed to feed into these measurement tools. See the regional language dubbing test.

How do we measure emotional accuracy in AI dubbing?

Combine Speech Emotion Recognition (SER) software, which analyzes pitch and energy, with subjective panels of native speakers who rate the “intent preservation” of the scene on a 5-point scale.

What belongs in a regional language QA framework for India?

Include checklists for honorifics, idiomatic accuracy, dialectal consistency, and sensitivity filters for religious or cultural taboos specific to each Indian state.