Streaming Avatar API Real-Time Implementation India 2026: An Enterprise Integration Guide for Sub-Second, Two-Way AI Video Agents

Estimated reading time: ~16 minutes

Key Takeaways

- Sub-300ms latency is the new benchmark in India’s 5G-Advanced era, demanding WebRTC, local TURN, and adaptive bitrate for reliability.

- Compliance-by-design with RBI V-CIP and the DPDP Act 2023 is mandatory for KYC, with data residency, consent, and audit trails built into workflows.

- Orchestration across ASR → LLM → TTS with token streaming and barge-in logic is critical to deliver natural, two-way video conversations.

- Sector blueprints for banking, insurance, and e-commerce show measurable ROI via call deflection, conversion uplift, and faster onboarding.

- Vendor selection should balance visual fidelity with India-first edges, Indic language depth, WhatsApp integration, and DPDP/RBI alignment.

The landscape of digital interaction has shifted from static interfaces to dynamic, human-centric experiences, making streaming avatar API real-time implementation India 2026 a critical priority for forward-thinking CTOs and product leaders. As enterprises across banking, fintech, and e-commerce race to deploy sub-second, two-way AI video agents, the technical requirements for low-latency streaming and regulatory compliance have never been more stringent. Platforms like Studio by TrueFan AI enable organizations to bridge the gap between complex AI orchestration and seamless customer experiences, ensuring that real-time interactive AI video agents are no longer a futuristic concept but a 2026 business reality.

1. The 2026 Landscape: Why India is the Global Hub for Real-Time AI Avatars

By early 2026, India has solidified its position as the most competitive market for real-time interactive AI video agents. This surge is driven by three converging factors: the widespread rollout of 5G-Advanced networks, a robust regulatory framework (DPDP Act 2023), and an insatiable enterprise appetite for automation that doesn't sacrifice the “human touch.”

The 2026 Data Snapshot

Recent industry data highlights the scale of this transformation:

- 5G-Advanced Penetration: By Q1 2026, 5G-Advanced coverage has reached 85% of India’s urban corridors, providing the consistent 20ms-50ms RAN latency required for fluid video conversations.

- Market Valuation: The AI Video KYC market in India is projected to reach $1.2 billion by the end of 2026, driven by mandatory digital onboarding in rural sectors.

- Latency Benchmarks: Sub-300ms end-to-end latency has become the industry standard, with 70% of enterprise AI video agents now meeting this threshold to ensure “barge-in” capability (interruptibility).

- Language Accuracy: Indic language LLM accuracy for top-tier models has improved by 40% in 2026 compared to 2024, enabling nuanced conversations in Hindi, Marathi, Tamil, and Bengali.

- Compliance Spend: Enterprises are now allocating approximately 15% of their IT budgets specifically to DPDP Act 2023 compliance audits and data residency infrastructure.

Unlike the pre-rendered video bots of 2024, a streaming avatar API India 2026 implementation delivers a stateful, two-way video conversation AI. These agents can perceive user emotions, handle interruptions gracefully, and execute complex backend tasks—like processing a loan application or resolving a billing dispute—in real-time.

Source: India AI Impact Summit 2026 (PIB)

2. India-First Reference Architecture for Low Latency AI Avatar Streaming

Implementing a low latency AI avatar streaming India solution requires more than just a fast API; it demands a specialized architecture that accounts for India’s unique network topography and device diversity.

The Media Plane: Ultra-Low Latency via WebRTC

The foundation of any real-time avatar is WebRTC (Web Real-Time Communication). To maintain a round-trip time (RTT) of under 400ms across variable 4G/5G networks, developers must implement:

- Localized TURN/STUN Servers: Standard global providers often route traffic through Singapore or Mumbai. For 2026 performance, enterprise-grade implementations require TURN relays co-located in Chennai, Delhi, and Mumbai to minimize the “last mile” lag.

- Adaptive Bitrate (ABR): Given the fluctuation in Indian mobile networks, the media plane must dynamically switch between H.264 profiles. Using “Constrained High” profiles ensures compatibility with mid-tier Android devices common in the Indian market.

- Voice Activity Detection (VAD): To enable “barge-in,” the system must detect when a user starts speaking and immediately signal the LLM to stop the avatar’s current stream.

The Intelligence Plane: Orchestrating the “Brain”

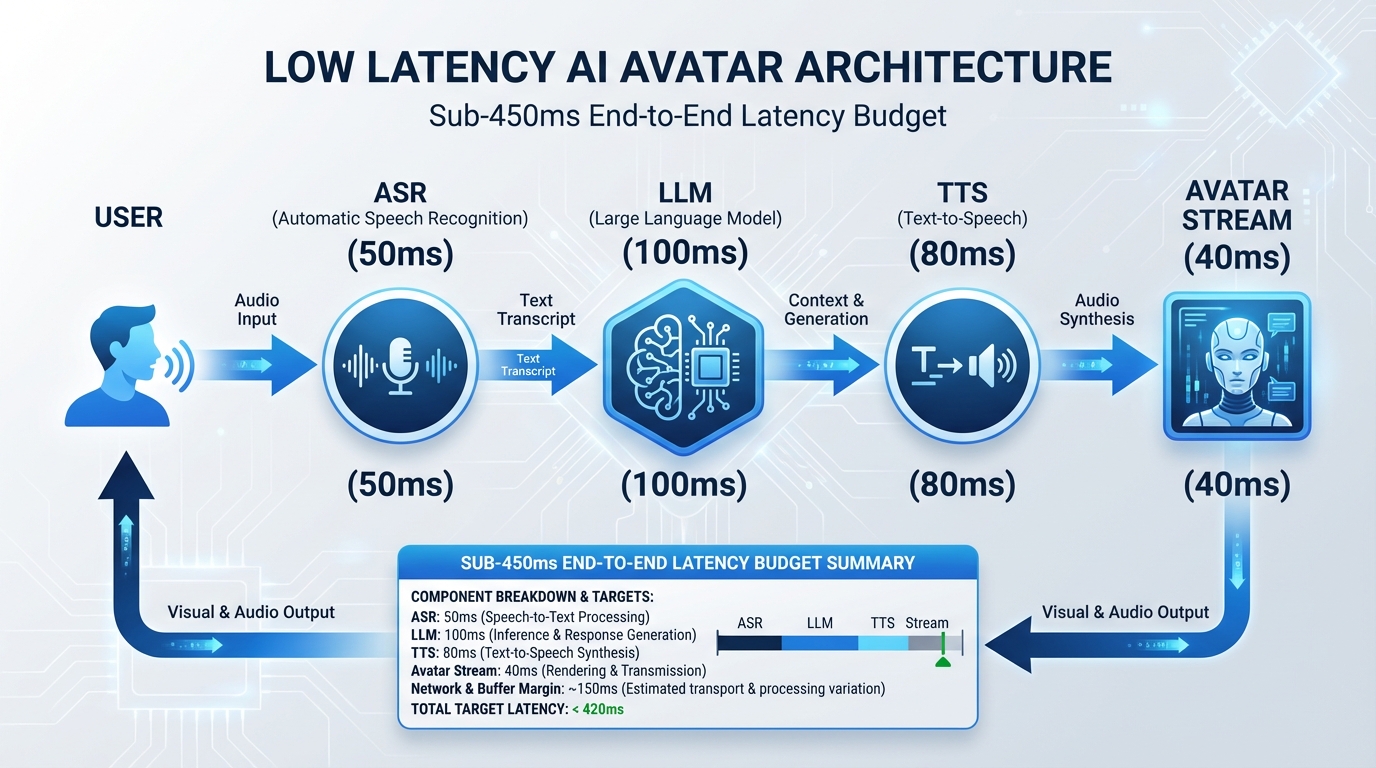

The intelligence plane handles the ASR (Automatic Speech Recognition) → LLM (Large Language Model) → TTS (Text-to-Speech) loop.

- Streaming ASR: For India, this must support “Hinglish” and code-switching.

- Token-Streaming LLMs: The LLM must begin streaming tokens to the TTS engine before the full sentence is generated.

- Real-time Video Personalization API: This layer adjusts the avatar’s visual tone and background based on the user’s CRM profile or real-time sentiment analysis.

The Orchestration Plane

This is where turn-taking logic resides. A deterministic fallback mechanism is essential; if the LLM latency exceeds 150ms, the system should trigger a “thinking” animation or a filler phrase (“Let me check that for you...”) to maintain the illusion of presence. Studio by TrueFan AI's 175+ language support and AI avatars provide the localization necessary for the Indian heartland, ensuring the orchestration plane remains culturally and linguistically relevant.

Source: Banuba Video RTC SDK Performance Benchmarks 2026

3. Compliance-by-Design: RBI V-CIP and DPDP Act 2023

For the banking and fintech sectors, a streaming avatar API real-time implementation India 2026 is not just a UX upgrade—it is a regulatory challenge.

RBI V-CIP (Video-Based Customer Identification Process)

The Reserve Bank of India (RBI) has strict mandates for digital onboarding. An AI video agent must be capable of:

- Liveness Detection: Ensuring the user is a live person and not a spoof/recording.

- Geo-Tagging: Capturing the precise location of the customer within Indian borders.

- Audit Trails: Every session must be timestamped and stored in a tamper-proof format for regulatory audits.

- Human-in-the-Loop (HITL): While the avatar handles the bulk of the interaction, the architecture must allow for a seamless handoff to a human officer for final verification as per current V-CIP guidelines.

DPDP Act 2023 Obligations

The Digital Personal Data Protection (DPDP) Act 2023 mandates that enterprises:

- Obtain Explicit Consent: The avatar must clearly explain what data is being collected (voice, video, PII) and for what purpose.

- Data Residency: All personal data processed during the streaming session must reside on servers within India.

- Right to Erasure: Users must have a clear path to request the deletion of their video interaction logs.

Implementing these controls at the API level is non-negotiable. Solutions must provide PII masking in real-time, ensuring that sensitive data like Aadhaar numbers are redacted from logs before they hit the storage layer.

Source: RBI Master Direction on KYC (V-CIP) | MeitY DPDP Act 2023 Official Guide

4. Sector Blueprints: Banking, Insurance, and E-commerce

The application of a live AI video agent enterprise solution varies significantly across industries. Here are the 2026 blueprints for high-impact sectors.

AI Video KYC Banking India

The goal here is 100% self-service onboarding.

- The Flow: User starts the journey on a mobile app → Avatar greets them in their native language → Avatar guides the user to hold up their PAN card → Real-time OCR validates the card → Avatar asks a series of randomized “knowledge-based” questions to prevent deepfake fraud → Session is signed and archived.

- Technical Note: Integration with India Stack (Aadhaar, DigiLocker) is mandatory.

Insurance Consultation and Claims

In 2026, insurance companies use real-time avatar customer support to handle FNOL (First Notice of Loss).

- The Flow: A customer records a video of their car damage → The avatar analyzes the video in real-time → The avatar explains the coverage details and provides an instant repair estimate → The avatar offers a “rider” or policy upgrade based on the claim context.

- ROI Factor: This reduces the claim processing time from days to minutes.

E-commerce: The Interactive Sales Avatar API

E-commerce platforms are moving away from chatbots to interactive sales avatar API implementations.

- The Flow: A shopper on a product page for a high-end smartphone clicks “Talk to an Expert” → A photorealistic avatar appears → The avatar performs a live demo of the phone’s camera features → The avatar checks real-time inventory and applies a personalized coupon code.

- Personalization: The avatar’s appearance can be dynamically swapped to match the shopper’s demographic, increasing trust and conversion rates.

Source: TrueFan AI: Two-Way Conversation AI Best Practices

5. Vendor Deep Dive: HeyGen Streaming API vs. D-ID vs. Localized Solutions

Choosing the right partner for your streaming avatar API India 2026 project involves balancing global innovation with local infrastructure. Comparison reference

HeyGen Streaming API (The LiveAvatar Shift)

HeyGen has transitioned its Interactive Avatar API to the “LiveAvatar” framework. Implementation guide

- Pros: Exceptional visual fidelity and a robust SDK for WebRTC.

- Cons: Higher latency for Indian users if traffic isn't routed through local edges; pricing is often in USD, which can be a hurdle for mid-market Indian firms.

- 2026 Context: Enterprises must complete their migration to the LiveAvatar API by March 31, 2026, to avoid service disruptions.

D-ID Streams

D-ID remains a powerhouse in the “Talks” and “Streams” space. Alternatives and context

- Pros: Low-bitrate optimization makes it ideal for rural India where 4G might be spotty.

- Cons: Limited out-of-the-box support for complex Indic dialects compared to localized players.

- Technical Surface: Their API allows for fine-grained control over “expressions,” which is vital for high-stakes banking interactions.

The Rise of Localized Solutions

For many Indian enterprises, a “global-only” approach fails on data residency and language nuances. This is where the integration of specialized Indian platforms becomes vital. Solutions like Studio by TrueFan AI demonstrate ROI through their deep integration with the Indian ecosystem, including WhatsApp Business API and local cloud providers. By offering a “walled garden” approach to content moderation and 100% clean compliance records, they address the specific security concerns of Indian regulated entities.

| Feature | HeyGen LiveAvatar | D-ID Streams | Studio by TrueFan AI |

|---|---|---|---|

| India Data Residency | Limited | Limited | Full Support |

| Indic Language Depth | Moderate | Moderate | High (175+ Languages) |

| WhatsApp Integration | Via 3rd Party | Via 3rd Party | Native / API-First |

| Latency (India Edge) | Variable | Variable | Optimized for India |

| Compliance Focus | Global | Global | RBI / DPDP Focused |

Source: HeyGen Migration Guide | D-ID Real-Time Technology Explainer

6. Enterprise Avatar Integration Guide: From POC to 2026 Scale

Scaling a low latency avatar implementation requires a rigorous, multi-phase engineering approach.

Phase 1: Discovery and Latency Budgeting

Before writing code, define your “Latency Budget.” A typical 2026 budget for a 5G-Advanced user in India looks like this:

- ASR (Speech-to-Text): 50ms – 100ms

- LLM (First Token): 100ms – 150ms

- TTS (Text-to-Speech): 80ms – 120ms

- Avatar Render & Stream: 40ms – 60ms

- Total E2E Latency: < 450ms (Targeting 300ms for “Elite” CX).

Phase 2: Network and Media Setup

Provision your TURN servers. If your users are primarily in South India, your primary relay should be in Chennai. Implement “Echo Cancellation” and “Automatic Gain Control” (AGC) at the SDK level to handle the noisy environments typical of Indian public spaces.

Phase 3: Identity and Security Integration

Integrate with your existing IAM (Identity and Access Management) using OAuth2 or JWT. For AI video KYC banking India, ensure that the session token is short-lived and tied to a specific device ID and geo-fence.

Phase 4: The “Barge-In” Logic

This is the most difficult technical hurdle. You must implement a “Voice Activity Detection” (VAD) listener on the client side. When the user speaks, the client must send an immediate “interrupt” signal to the server to kill the current video stream and clear the TTS buffer. This prevents the awkward “talking over each other” that plagued early AI agents.

Phase 5: Observability and QA

In 2026, standard APM (Application Performance Monitoring) isn't enough. You need specialized metrics:

- MOS (Mean Opinion Score): For audio/video quality.

- WER (Word Error Rate): For ASR accuracy in regional languages.

- AV Sync Drift: Ensuring the lips move in perfect sync with the audio (target < 80ms drift).

Source: MSFT Innovation Hub India: Voice Agent Avatar Samples

7. ROI Modeling, Future Trends, and FAQs

Investing in a streaming avatar API India 2026 strategy requires a clear financial justification.

ROI Levers for the Indian Enterprise

- Call Deflection: Moving 30% of high-intent support queries from human agents to AI avatars can reduce operational costs by 40-50%.

- Conversion Uplift: Interactive sales avatars have shown a 25% higher conversion rate compared to text-based chatbots in the Indian e-commerce sector.

- KYC Efficiency: Reducing the “Time to Onboard” from 24 hours to 10 minutes significantly lowers the customer drop-off rate.

2026 Trends to Watch

- Agentic Avatars: The shift from “talking heads” to “agentic” systems that can actually execute transactions (e.g., Kaltura’s acquisition of eSelf.ai).

- Emotion-Aware Personalization: Avatars that can detect frustration in a user’s voice and automatically switch to a more empathetic tone or escalate to a human.

- Wearable Integration: As AR glasses gain traction in urban India, real-time avatars will move from screens to the user’s physical environment.

Frequently Asked Questions (FAQs)

Q1: How do we ensure data residency for AI video KYC under the DPDP Act?

A: To ensure compliance, you must select a provider that offers an India-region deployment. Your architecture should ensure that all media processing (ASR, TTS, and Rendering) occurs within Indian borders. Studio by TrueFan AI ensures data residency by leveraging India-based cloud clusters and providing clear audit logs that satisfy DPDP Act 2023 requirements for data fiduciaries.

Q2: What is the minimum internet speed required for a smooth 2026 avatar experience?

A: While 5G-Advanced is ideal, a well-optimized implementation using H.264 Baseline profiles can run on a stable 4Mbps connection. However, for sub-300ms latency, a ping of < 50ms to the nearest TURN server is more critical than raw download speed.

Q3: Can these avatars handle code-switching (Hinglish)?

A: Yes. Modern ASR engines used in 2026 are specifically trained on Indian multilingual datasets. The key is to use an orchestration layer that can process mixed-language tokens and feed them into a TTS engine that supports natural-sounding Hinglish prosody.

Q4: How does “barge-in” work technically?

A: Barge-in relies on a low-latency feedback loop. The client-side SDK monitors the microphone. When it detects speech (VAD), it sends a “stop” command via a WebSocket or DataChannel to the avatar server. The server then flushes the current video buffer and waits for the new LLM response.

Q5: What are the primary costs involved in a real-time avatar implementation?

A: Costs are typically broken down into:

- Avatar Rendering: Per-minute or per-session fees.

- LLM Tokens: Input/Output costs for the “brain.”

- ASR/TTS: Per-character or per-minute processing.

- Infrastructure: TURN server egress and storage for compliance logs.

Q6: Is it possible to integrate these avatars with WhatsApp?

A: Absolutely. In the Indian context, WhatsApp is the primary channel. You can trigger a video streaming session via a WhatsApp click-to-chat link, which opens a lightweight WebRTC-enabled web view where the interaction takes place, then writes the data back to your CRM. Implementation notes

Procurement Checklist for 2026

- [ ] Does the vendor provide TURN servers in at least two Indian metros?

- [ ] Is there a documented path for DPDP Act 2023 compliance (Data Residency)?

- [ ] Does the ASR/TTS support the specific regional languages of your target demographic?

- [ ] What is the guaranteed SLA for “First Token Latency”?

- [ ] Can the avatar be customized to match your brand’s specific “Digital Twin” or influencer?

The era of static digital interaction is over. By implementing a streaming avatar API real-time implementation India 2026, your enterprise can deliver the personalized, high-trust experiences that the modern Indian consumer demands.

Source: Kaltura + eSelf: The Rise of Agentic Avatars | TrueFan AI Product Capabilities

Frequently Asked Questions

What’s the difference between streaming avatars and pre-rendered video bots?

Streaming avatars support real-time, two-way conversations with sub-second latency, barge-in, and backend task execution. Pre-rendered bots play fixed clips and cannot adapt mid-utterance, interrupt, or personalize streams in real time.

How do I design a latency budget for sub-300ms experiences?

Allocate tight budgets across ASR (50–100ms), LLM first token (100–150ms), TTS (80–120ms), and render/stream (40–60ms). Use local TURN, adaptive bitrate, token streaming, and client-side VAD to consistently hit targets.

Which industries in India see the fastest ROI from AI video agents?

Banking (KYC deflection and faster onboarding), e-commerce (interactive product guidance and couponing), and insurance (FNOL triage and instant estimates) typically realize 25–50% efficiency and conversion gains.

What are best practices for barge-in and turn-taking orchestration?

Implement client VAD, send immediate interrupt signals via DataChannel or WebSocket, flush TTS/video buffers, and use fallback cues (visual “thinking” or filler phrases) when LLM latency spikes.

How should enterprises evaluate vendors for India-specific compliance?

Prioritize India-region edges, DPDP-compliant data residency, RBI V-CIP controls (liveness, geo-tagging, audit trails), Indic language depth, and native integrations like WhatsApp Business.