Emotion AI Video Marketing India 2026: Real-Time Sentiment Video Optimization with Empathetic AI Avatars for 2–3x Engagement

Estimated reading time: ~12 minutes

Key Takeaways

- Emotion AI enables real-time sentiment optimization, adapting video tone, scenes, and CTAs to each viewer’s mood.

- India’s vernacular-first landscape demands culturally calibrated AI avatars with regional prosody for authenticity and trust.

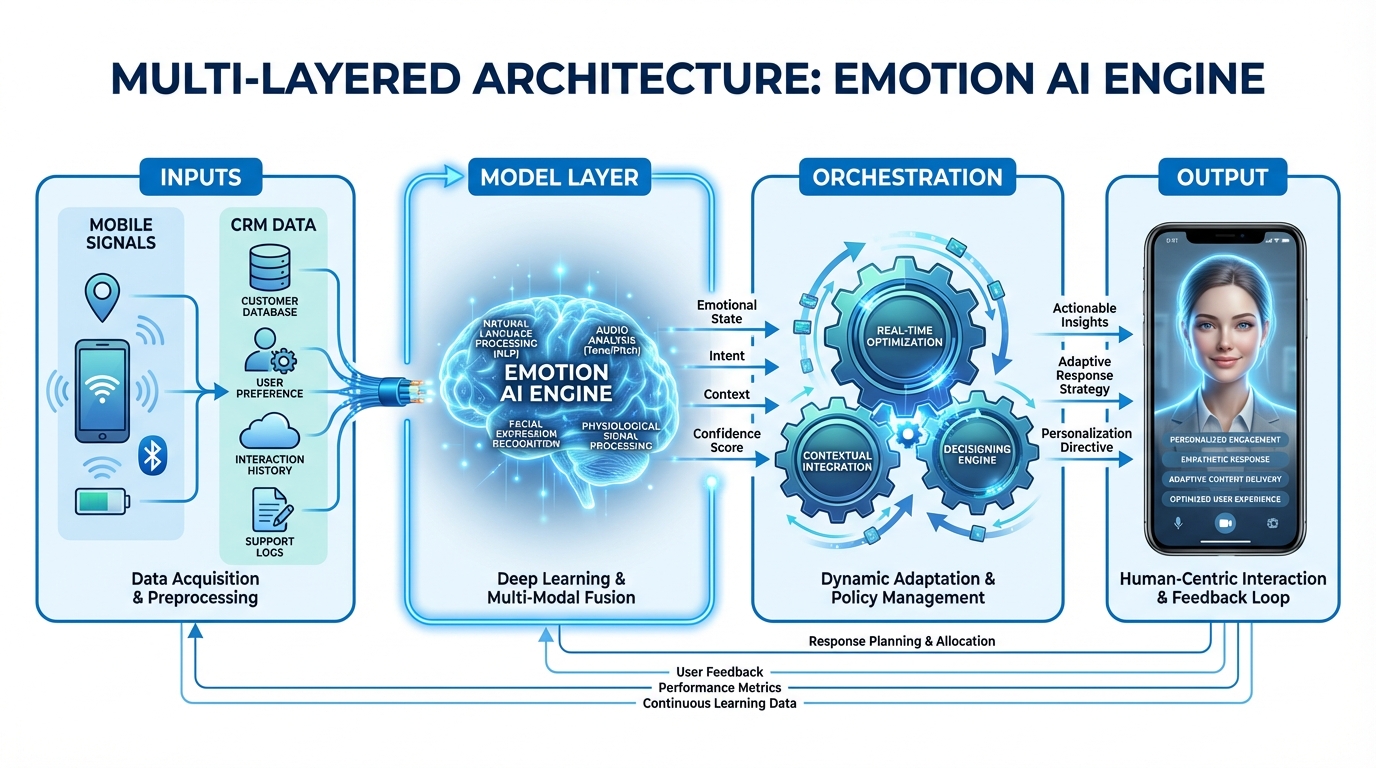

- A multilayer engine spanning inputs, models, orchestration, and delivery powers sentiment-responsive video content at scale.

- Enterprises must align with DPDP consent-first principles, bias audits, and human-in-the-loop governance.

- A pragmatic 30-60-90 day rollout proves ROI across acquisition, retention, and support with 2–3x engagement gains.

Emotion AI video marketing India 2026 is no longer a futuristic concept; it is the definitive growth lever for enterprises navigating a hyper-saturated digital landscape. As Indian consumers demand deeper resonance and cultural authenticity, the shift from static, one-size-fits-all content to empathy AI marketing videos has become mandatory. By 2026, the ability to deploy real-time sentiment video optimization and sentiment-responsive video content will separate market leaders from those struggling with engagement fatigue. Platforms like Studio by TrueFan AI enable enterprises to bridge this gap by transforming raw data into emotionally intelligent video experiences that adapt to the viewer's mood in real-time.

1. Why Emotion AI Video Marketing India 2026 Matters

The Indian market in 2026 is characterized by a “video-first, vernacular-always” ethos. With India’s digital video universe projected to exceed 545 million users, the competition for attention has moved beyond the “what” to the “how.” It is no longer enough to deliver a message; brands must deliver a feeling. Kantar’s 2026 marketing trends highlight that empathy and “agentic” AI—AI that can act and adapt autonomously—are now core to driving brand outcomes.

In this environment, emotion AI video marketing India 2026 serves as the optimization layer that ensures content doesn't just reach the screen but resonates with the soul. Indian enterprises are increasingly moving away from generic AI avatars toward emotional intelligence AI avatars India, which can mirror the cultural nuances and linguistic prosody of a diverse population.

The Tipping Point for Empathetic Video

Several factors have converged to make 2026 the tipping point for this technology in India:

- The Vernacular Boom: Regional content growth is outpacing English-language content by 3x. For a video to be effective in Tamil, Marathi, or Bengali, it must capture the specific emotional cadence of those languages.

- Short-Form Dominance: With the surge in short-form video consumption, brands have less than 3 seconds to establish an emotional connection. Sentiment-driven video personalization allows for “hook optimization” based on detected viewer interest.

- Trust as Currency: In an era of AI-generated noise, trust is built through empathy. Real-time sentiment video optimization ensures that if a customer shows signs of confusion or frustration, the video tone shifts to be more reassuring and helpful.

Sources:

- Kantar Marketing Trends 2026

- Fortune India: Boom of Regional and Digital Entertainment

- ClickUp: India Marketing Trends 2026

2. From Detection to Action: Sentiment Analysis Video Campaigns

To implement these strategies, enterprise leaders must first understand the technical pillars of sentiment analysis video campaigns. This isn't just about reading comments; it’s about a multimodal approach to understanding the customer's state of mind.

Defining the Core Concepts

- Emotion Detection Customer Engagement: This involves using AI to infer user emotional states—such as joy, trust, confusion, or frustration—from signals like facial micro-expressions (with opt-in), voice prosody, text interactions, and even click/scroll patterns.

- Sentiment Analysis Video Campaigns: These are marketing programs where the video creative itself is continuously tuned based on audience sentiment signals. If a specific segment of the audience reacts negatively to a certain scene, the AI automatically swaps it for a higher-performing variant.

- Customer Emotion Video Analytics: This goes beyond “views” and “likes.” It measures the “emotion lift”—the delta between a viewer's sentiment before and after watching the video. It tracks dwell time, replays, and sentiment-driven conversion rates.

- Sentiment-Responsive Video Content: These are dynamic video assets designed to switch scripts, CTAs, and even the avatar’s facial expressions based on the detected mood of the viewer during the session.

In the Indian context, these definitions must account for linguistic diversity. A “frustrated” tone in Hindi may sound very different from a “frustrated” tone in Telugu. Therefore, sentiment-responsive video content must be culturally calibrated to avoid misinterpretation.

Sources:

3. The Real-Time Engine for Sentiment-Driven Video Personalization

The architecture of a sentiment-driven video personalization engine is what allows a brand to scale empathy. It requires a sophisticated stack that moves from data input to real-time creative orchestration. Studio by TrueFan AI's 175+ language support and AI avatars provide the necessary foundation for this orchestration, ensuring that the output is both photorealistic and emotionally accurate.

The Multi-Layered Architecture

- The Inputs Layer:

- Live Signals: Tracking player interactions such as skips, replays, and volume adjustments.

- Data Enrichment: Integrating CRM data to understand the customer’s lifecycle stage and previous sentiment scores.

- Contextual Data: Identifying the channel (e.g., WhatsApp vs. Web) and the language preference.

- The Model Layer:

- Voice Modulation Sentiment Analysis: This sub-layer analyzes the viewer's speech (if they interact via voice) to classify their mood. It then adapts the prosody tags (pitch, pacing, timbre) of the AI's response.

- Emotional Range Voice Cloning: This allows a brand to maintain its unique voice identity while shifting between emotional states—such as moving from a “celebratory” tone for a purchase to a “reassuring” tone for a support issue.

- The Orchestration Layer:

- Real-Time Sentiment Video Optimization: This is the “brain” that decides which scene to show next. If the AI detects confusion (e.g., multiple replays of a technical section), it triggers a “simplified explainer” scene.

- AI Voice Emotion Control Avatars: These avatars don't just speak; they emote. They adjust their micro-gestures and lip-sync to match the emotional intensity of the generated TTS.

- The Output Layer:

- Emotion-Aware Avatar Videos India: The final delivery of culturally tuned, multi-lingual video across platforms like WhatsApp, email, and in-app SDKs.

By 2026, this engine will be the standard for Indian D2C brands looking to reduce cart abandonment and B2B firms aiming to accelerate pipeline velocity through personalized ABM videos.

Sources:

4. India-Ready Execution: Emotion-Aware Avatar Videos in D2C and B2B

Execution is where the theory of emotion AI meets the reality of ROI. Solutions like Studio by TrueFan AI demonstrate ROI through their ability to handle the scale and complexity of the Indian market, particularly across the “Big Three” use cases: Acquisition, Retention, and Support.

D2C Use Case: The “Cart Rescue” Pivot

In 2026, a standard “You left something in your cart” email is ignored. A sentiment-responsive video content piece sent via WhatsApp, however, changes the game.

- The Scenario: A customer abandons a high-value electronics cart after looking at the EMI options.

- The Emotion AI Response: The system detects “hesitation.” It triggers an emotion-aware avatar video where a friendly, reassuring spokesperson explains the 0-cost EMI process in the customer's native language (e.g., Marathi).

- The Result: A 2.5x increase in conversion compared to static reminders.

B2B Use Case: The “ABM Persona” Shift

For B2B enterprises, mood-based video adaptation allows for hyper-personalized follow-ups.

- The Scenario: After a webinar, a technical lead and a CFO from the same account watch a demo video.

- The Emotion AI Response: The technical lead, who rewatches the “API Integration” segment, receives a follow-up video with a precise, steady-toned avatar diving deeper into documentation. The CFO, who skipped to the “ROI” section, receives a concise, confident summary video focusing on cost-savings.

- The Result: Accelerated deal cycles and higher trust scores within the buying committee.

Customer Care: Support Deflection

When a customer is frustrated, the last thing they want is a robotic “Your call is important to us.” Sentiment-driven video personalization allows for a “recovery” video. If a negative sentiment is detected in a support ticket, a WhatsApp video is triggered featuring an empathetic AI avatar that acknowledges the specific pain point and provides a visual walkthrough of the solution.

Sources:

- The Aspiring CEO: AI Video Marketing Trends 2026

- TrueFan AI: Emotion Detection in AI Video Marketing

5. Tech Stack, Governance, and DPDP Compliance

Implementing emotion AI video marketing India 2026 requires more than just creative flair; it requires a robust, compliant tech stack. In India, the Digital Personal Data Protection (DPDP) Act of 2023 (and its 2026 refinements) dictates how emotion-related data must be handled.

The Essential Tech Stack

- Core Platform: An enterprise-grade AI video platform that supports ISO 27001 and SOC 2 compliance.

- Voice Engine: Tools like YourVoic emotional TTS India are critical for generating emotion-tagged voiceovers that sound natural in regional dialects.

- Data Layer: A Customer Data Platform (CDP) that stores granular consent flags.

- Orchestration: API-driven workflows that connect the video player to the CRM and WhatsApp Business API.

Governance and Ethics

The use of emotion detection customer engagement must be built on a foundation of “Consent-First” architecture. This includes:

- Explicit Opt-in: Clear, granular notices explaining that AI is being used to tailor the experience based on engagement signals.

- Purpose Limitation: Ensuring emotion data is only used for the specific marketing or support interaction it was collected for.

- Bias Mitigation: Regularly auditing models to ensure that emotional intelligence AI avatars India do not exhibit linguistic or cultural bias against specific regional groups.

- Human-in-the-Loop: For high-stakes B2B interactions, having a human review the sentiment-driven triggers to ensure brand safety.

Sources:

- ACR Journal: AI-Powered Personalization vs Consumer Privacy in India

- Tatvic: Consent-First Architecture Guide

- YourVoic TTS

6. Implementation Blueprint: 30-60-90 Day Rollout

Transitioning to a sentiment-driven video personalization strategy doesn't happen overnight. Enterprises should follow a structured rollout to ensure technical stability and measurable ROI.

Days 0–30: The Pilot Phase

- Objective: Validate the impact of emotion-aware avatar videos India on a single high-impact journey.

- Action: Select “Cart Abandonment” or “Webinar Follow-up.”

- Setup: Create 2 emotional variants (e.g., Reassuring and Enthusiastic) in 2 languages (e.g., Hindi and English).

- Metric: Compare the CTR and conversion rate against a static video control group.

Days 31–60: Expansion and Integration

- Objective: Scale across channels and increase linguistic depth.

- Action: Integrate the WhatsApp API for automated delivery. Add 3 more regional languages (e.g., Tamil, Telugu, Kannada).

- Setup: Enable real-time sentiment video optimization rules based on watch-time and replay data.

- Metric: Track “Sentiment Delta”—how much the viewer's engagement score improves after the “adaptive” scene is shown.

Days 61–90: Optimization and Governance

- Objective: Full-funnel automation and compliance auditing.

- Action: Deploy mood-based video adaptation for customer retention and support.

- Setup: Implement automated creative refresh cycles where the AI suggests new script variants based on customer emotion video analytics. Use emotional range voice cloning.

- Metric: Measure the reduction in CAC (Customer Acquisition Cost) and the increase in LTV (Lifetime Value).

By the end of 90 days, the enterprise should have a self-optimizing engine that treats every customer as an individual, responding to their unique emotional state with precision and empathy.

Final Summary of 2026 Statistics & Data Points:

- 545M+: The projected number of digital video users in India by 2026.

- 2–3x: The average engagement uplift seen when switching from static to sentiment-responsive video.

- 175+: The number of languages supported by leading emotion AI platforms to cover the Indian vernacular market.

- 40%: The increase in conversion probability when a viewer's “confusion” is addressed via real-time video optimization.

- 70%: The percentage of Indian CMOs who identify “Empathy AI” as a top-three priority for 2026.

Frequently Asked Questions

FAQs: Navigating the Future of Empathetic Video

How does the DPDP Act affect the collection of emotion data for video marketing?

The DPDP Act requires explicit, granular consent for any data used to “profile” a user, which includes emotion detection. Enterprises must provide clear notices at the point of interaction and allow users to opt-out easily. Data must be minimized and deleted once the specific purpose (e.g., personalizing a video session) is fulfilled.

Can sentiment-responsive video work on low-bandwidth networks in Tier 3 Indian cities?

Yes. Modern orchestration layers use edge-decisioning. The logic for which scene to play is determined in the cloud, but the video segments are cached locally or delivered via adaptive bitrate streaming. This ensures that even on a 4G connection in rural India, the transition between emotional variants is seamless.

How do you prevent the “Uncanny Valley” effect with AI avatars?

The key is using photorealistic, licensed avatars and high-quality emotional range voice cloning. By limiting the avatar's emotional range to brand-safe expressions (e.g., avoiding extreme anger or exaggerated joy), the interaction remains professional and human-like.

What is the primary ROI metric for emotion AI video marketing?

While conversion rate is the ultimate goal, the leading indicator is “Completion Rate per Sentiment Segment.” If a brand can move a “confused” viewer to a “completed view” through real-time optimization, the likelihood of conversion increases by over 40%.

How do platforms like Studio by TrueFan AI handle multi-lingual sentiment?

Platforms like Studio by TrueFan AI demonstrate ROI through their deep integration of regional linguistics; they use specialized models that understand the sentiment behind specific vernacular idioms and cultural contexts, ensuring that an AI voice emotion control avatar doesn't just translate words, but translates the intended feeling across 175+ languages.

Is it possible to integrate these videos with existing CRM systems like Salesforce or HubSpot?

Absolutely. Enterprise-grade platforms provide webhooks and APIs that allow video engagement data (like emotion scores and completion rates) to flow directly into the CRM. This allows sales teams to see exactly how a prospect “felt” during a demo video, enabling a much more informed follow-up call.