Emotion AI Video Marketing India 2026: Real-Time Sentiment, Voice Modulation, and Emotion-Aware Avatars That 2–3x Enterprise Engagement

Estimated reading time: ~9 minutes

Key Takeaways

- Emotion AI video marketing will define India’s 2026 playbook, shifting from static to sentiment-responsive personalization.

- Real-time detection orchestrates avatars, voice modulation, and CTAs to match viewer mood, driving 2–3x engagement and lower CPA.

- An end-to-end affective tech stack blends emotion-aware avatars, emotional TTS/voice cloning, and a policy engine for dynamic adaptation.

- DPDP Act compliance demands explicit consent, data minimization, and rigorous bias mitigation.

- Proven blueprints across D2C, BFSI, B2B, and Telecom; platforms like Studio by TrueFan AI enable multilingual, culturally resonant scale.

In the rapidly evolving digital landscape, emotion AI video marketing India 2026 has emerged as the definitive frontier for brands seeking to break through engagement fatigue. As we move into 2026, the shift from static personalization to empathy AI marketing videos is no longer a luxury but a strategic necessity for Indian enterprises. By integrating real-time sentiment analysis with localized AI avatars, businesses are finally bridging the gap between algorithmic efficiency and human resonance.

The core thesis for 2026 is clear: video marketing in India is transitioning toward sentiment-driven video personalization. In this new paradigm, avatars and synthetic voices do not just deliver a script; they adapt their tone, facial expressions, and calls-to-action (CTAs) based on the live emotional state of the viewer. This level of responsiveness is critical in a market where regional diversity is the norm. Data indicates that by 2026, over 60% of video consumption in India will happen in non-English languages, making localized, emotion-aware content the primary driver of conversion.

1. The Evolution of Engagement: Why Emotion AI is the 2026 Standard for India

The Indian consumer of 2026 is hyper-connected yet increasingly resistant to generic digital advertising. Traditional video marketing, which relied on broad demographic segments, is being replaced by sentiment-driven video personalization that treats every viewer as a unique emotional entity. This shift is fueled by three primary drivers: the dominance of regional languages, the rise of short-form content, and the maturation of India’s AI infrastructure.

The Multilingual Mandate

According to recent industry projections, regional languages now account for approximately 60% of all online video consumption in India. This linguistic shift demands more than just translation; it requires cultural and emotional localization. A "one-size-fits-all" approach fails to capture the nuances of sentiment across Hindi, Tamil, Telugu, Marathi, and Bengali audiences. Emotion-aware avatar videos India are filling this gap by providing culturally resonant digital twins that can pivot their delivery style to match local sensibilities.

From Static to Sentiment-Responsive

The marketing trends for 2026 emphasize a move away from "pre-rendered" personalization toward sentiment-responsive personalized video. Platforms like Studio by TrueFan AI enable enterprises to generate content that reacts to user signals—such as dwell time, interaction patterns, and even facial cues—to adjust the narrative flow in real-time. This ensures that a frustrated user at a checkout page receives a reassuring, calm video guide, while an excited shopper in an upsell flow is met with high-energy, celebratory content.

ROI and Performance Metrics

The shift toward empathy AI marketing videos is backed by rigorous performance data. Enterprises adopting emotion-aware stacks are reporting a 2–3x lift in engagement rates compared to standard personalized videos. Furthermore, the integration of AI-led personalization is projected to reduce Cost Per Acquisition (CPA) by up to 45% by late 2026, as brands stop wasting impressions on emotionally misaligned content.

Sources:

- TrueFan AI: Emotion-aware Avatar Videos (2026 Projections)

- EY-FICCI: Regional Video Consumption Trends

- Tantrash: Top Digital Marketing Trends India 2026

2. Defining the Sentiment-Driven Tech Stack: Avatars, Voice, and Intelligence

To execute emotion AI video marketing India 2026 at scale, enterprises must move beyond simple Text-to-Speech (TTS) tools and embrace a comprehensive "Affective Stack." This stack combines vision, audio, and decisioning engines to create a seamless loop of emotional intelligence.

Emotion-Aware Avatars and Emotional Intelligence

At the heart of this stack are emotional intelligence AI avatars. These are not merely animated figures; they are multimodal models trained to perceive inferred emotions and render appropriate micro-gestures. For the Indian market, this means avatars that understand the subtle differences in head tilts, eye gaze, and hand movements that signify agreement or confusion in different regional contexts. Emotion-aware avatar videos India utilize these nuances to build trust, particularly in high-stakes sectors like BFSI and Healthcare.

The Voice Layer: Modulation and Cloning

The auditory component of emotion AI is equally critical. Voice modulation sentiment analysis allows the system to analyze the intonation, pitch, and energy of a user’s voice (if interacting via a voice bot) or the perceived mood of the session. The system then applies emotional range voice cloning to adjust the AI’s response. For example, if a customer sounds hurried, the AI voice can increase its tempo and focus on concise, authoritative delivery. Conversely, for a customer seeking support, the voice can shift to a lower pitch and slower pace to provide reassurance.

AI Voice Emotion Control and TTS Integration

Modern enterprises are increasingly looking for AI voice emotion control avatars that support real-time emotion tags. While tools like YourVoic emotional TTS India offer high-scale multilingual speech synthesis with emotional markers, the true power lies in the orchestration. Studio by TrueFan AI's 175+ language support and AI avatars provide the necessary breadth to ensure that these emotional voices are paired with visual representations that are equally expressive and culturally accurate.

Decisioning and Orchestration

The "brain" of the stack is the policy engine. This component maps detected emotional states—such as joy, frustration, or confusion—to specific adaptation policies. If the emotion detection customer engagement AI identifies a "confusion" state during a product demo, the orchestrator can trigger a script swap, inserting a 15-second simplified explainer branch into the video timeline. This real-time adaptation is what separates 2026-era marketing from the static campaigns of the past.

Sources:

- IndiaAI: Does Emotion AI Enhance Human-Machine Interaction?

- AtomComm: AI Marketing Services in India 2026

3. Real-Time Sentiment Video Optimization: The Workflow of 2026

The implementation of real-time sentiment video optimization requires a closed-loop pipeline that connects user signals to dynamic content generation. This workflow ensures that the video experience evolves as the user interacts with the brand.

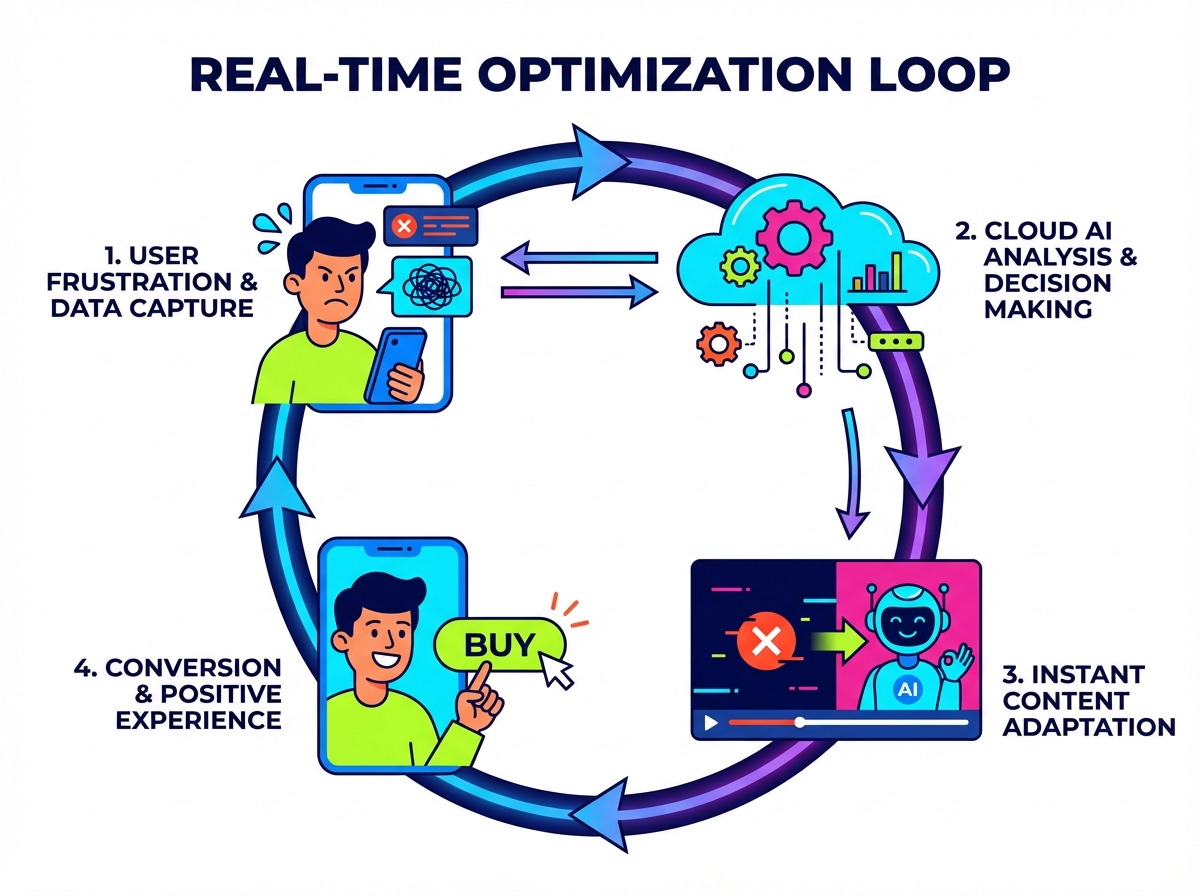

The Optimization Loop

- Signal Capture: The system gathers data from various touchpoints—web session metrics (like rage clicks), WhatsApp chat sentiment, or even opt-in camera feeds for facial expression analysis.

- Emotion Inference: Multimodal AI models decode these signals into affective states (e.g., high arousal/negative valence for anger).

- Policy Mapping: The orchestration engine selects the best content variant based on the inferred emotion.

- Dynamic Adaptation: The avatar’s affect, the TTS prosody, and the CTA are updated instantly.

- Measurement & Tuning: The system tracks the user's response to the adaptation, feeding the data back into the model for continuous improvement.

Mood-Based Video Adaptation Marketing Examples

- E-commerce Cart Recovery: If a user shows signs of frustration (repeatedly clicking a broken discount code), the recovery video features an avatar with a softened gaze and a calm, empathetic voice. The CTA shifts from "Buy Now" to "Talk to an Agent."

- SaaS Onboarding: If a user is lingering on a complex dashboard, a sentiment-responsive personalized video pops up. The avatar uses a "helpful guide" persona, speaking at a slower rate and highlighting specific UI elements on the screen.

- OTT Upsell: For a user who has just finished a binge-watching session (high delight), the system triggers a high-energy video with a celebratory avatar tone, offering a limited-time premium upgrade.

Trigger Fabric and Integration

For these workflows to succeed, they must be integrated with the existing enterprise tech stack. This includes:

- CRM/CDP Hooks: Pre-seeding the likely emotion based on the lead score or past purchase history.

- WhatsApp Business API: Using chat sentiment to trigger a personalized video response directly in the messaging thread.

- Webhooks: Enabling sub-second latency for web-based video adaptations.

Sources:

4. Measuring the "Feel": Customer Emotion Video Analytics

In the world of emotion AI video marketing India 2026, traditional KPIs like click-through rate (CTR) are only half the story. Enterprises are now prioritizing customer emotion video analytics to understand the "why" behind the "what."

Advanced KPI Mapping

To truly gauge the success of sentiment analysis video campaigns, marketers are tracking:

- Sentiment Shift: Does the user move from a "negative/frustrated" state at the start of the video to a "neutral/positive" state by the end?

- Valence and Arousal Distributions: Mapping the intensity and positivity of emotions across the video timeline to identify "drop-off" points.

- Branch Path Selection: In interactive videos, which emotional branch (e.g., "reassurance" vs. "directness") leads to higher conversion?

- Dwell Time on Emotional Peaks: Measuring how long users engage with specific high-emotion segments of the content.

Dashboarding for the Enterprise

A modern emotion analytics dashboard must segment data by language, region, and device. In India, where a campaign might run in eight different languages simultaneously, it is vital to see if the "empathetic" tone in Tamil is performing as well as it is in Marathi. Solutions like Studio by TrueFan AI demonstrate ROI through these granular insights, allowing brands to attribute revenue lift directly to sentiment-aware variants.

The Impact on CPA and LTV

By aligning the emotional tone of the video with the viewer's current state, brands are seeing a significant reduction in CPA. When a video "feels" right, the friction to convert is lowered. Over the long term, this builds stronger brand affinity, increasing Customer Lifetime Value (LTV) as users feel understood on a deeper, more human level.

5. India Enterprise Guardrails: Privacy, Safety, and the DPDP Act

As emotion AI video marketing India 2026 becomes mainstream, governance and ethical considerations have moved to the forefront. The processing of emotional data—often considered sensitive—requires a robust legal and ethical framework.

Compliance with the DPDP Act 2023

The Digital Personal Data Protection (DPDP) Act of 2023 is the primary regulatory pillar for emotion AI in India. Enterprises must ensure:

- Informed Consent: Users must be explicitly informed if their emotional data (facial expressions, voice tone) is being analyzed.

- Purpose Limitation: Data collected for sentiment analysis must not be used for unrelated profiling without additional consent.

- Right to Erasure: Users must have a simple way to opt-out and request the deletion of their emotional profiles.

- Data Minimization: Only the necessary signals for emotion inference should be processed, and raw biometric data should ideally be discarded after the inference is complete.

Governance and Bias Mitigation

Emotion detection customer engagement AI must be rigorously tested for bias. In a diverse country like India, models must be trained on datasets that include various skin tones, age groups, regional accents, and cultural expressions of emotion. Failure to do so can lead to "emotional misinterpretation," where a neutral expression in one culture is incorrectly flagged as negative, leading to inappropriate video adaptations.

Brand Safety and Content Moderation

For enterprises, brand safety is paramount. This involves:

- Real-Time Moderation: Ensuring that AI-generated avatars do not produce disallowed content or respond to user prompts in a way that violates brand guidelines.

- Watermarking: Every AI-generated video should have a digital watermark to ensure traceability and prevent the spread of deepfakes.

- Audit Trails: Maintaining logs of why specific emotional adaptations were triggered for compliance and troubleshooting.

Sources:

6. Industry Blueprints: Use-Cases for D2C, BFSI, and B2B

The application of sentiment-driven video personalization varies significantly across industries. Below are the blueprints for the most successful implementations in the Indian market for 2026.

D2C E-commerce: The Cart Recovery Specialist

- The Problem: High cart abandonment due to shipping costs or technical glitches.

- The Solution: A mood-based video adaptation marketing trigger. If the system detects "frustration" (rage clicks), it sends a WhatsApp video featuring a localized avatar.

- The Adaptation: The avatar uses a calm, reassuring tone, offers a small discount, and explains the return policy.

- Target Outcome: 15–30% uplift in checkout completion; neutralization of negative sentiment.

BFSI: Trust-Based Lead Generation

- The Problem: Low trust and high drop-off in complex insurance or loan applications.

- The Solution: Sentiment-responsive personalized video for disclosures and lead nurturing.

- The Adaptation: The avatar maintains a "neutral-trust" tone, steady gaze, and low arousal. If the user pauses for a long time (indicating confusion), the avatar proactively offers to explain the current clause in simpler terms.

- Target Outcome: 20% increase in watch-through rates; 10% lift in lead-to-meeting conversion.

B2B ABM: The Executive Outreach Engine

- The Problem: Generic outreach emails being ignored by C-suite executives.

- The Solution: High-fidelity empathy AI marketing videos tailored to the prospect's recent public sentiment (e.g., LinkedIn posts).

- The Adaptation: The avatar (often a digital twin of the sales rep) acknowledges specific industry challenges with an "authoritative yet empathetic" tone. The CTA is dynamically adjusted based on the prospect's interaction with the video.

- Target Outcome: 2x higher reply rates compared to static video outreach.

Telecom & OTT: The ARPU Accelerator

- The Problem: Stagnant Average Revenue Per User (ARPU) and high churn.

- The Solution: Sentiment-aware upsell videos triggered after a positive customer service interaction or a binge-watching event.

- The Adaptation: An "excited" tone, celebratory micro-expressions from the avatar, and a time-bound "special reward" offer.

- Target Outcome: 10–20% ARPU lift in test cohorts.

7. Conclusion and FAQ

The era of emotion AI video marketing India 2026 represents a fundamental shift in how brands communicate. By moving beyond the "what" of a message and focusing on the "how" it is delivered—emotionally, linguistically, and culturally—enterprises can build unprecedented levels of engagement. The technology to detect, analyze, and respond to human emotion is here, and for Indian brands, it is the key to winning the 2026 digital economy.

Frequently Asked Questions

1. What is emotion AI video marketing and why is it critical in India for 2026?

Emotion AI video marketing India 2026 refers to the use of artificial intelligence to detect a viewer's emotional state and dynamically adapt video content—including avatar expressions, voice tone, and script—in response. It is critical in India because of the massive shift toward regional language consumption (60%+) and the need for brands to provide more human-centric, empathetic digital experiences to stand out in a crowded market.

2. How do sentiment analysis video campaigns work with avatars and TTS?

These campaigns use a multimodal stack. First, sentiment analysis engines decode user signals (text, voice, or behavior). Then, this data is sent to a platform like Studio by TrueFan AI, which generates a video where the avatar’s micro-expressions and the TTS voice’s prosody (pitch, speed, tone) are perfectly aligned with the desired emotional response. See: sentiment analysis video campaigns

3. Is emotion detection legal in India under the DPDP Act?

Yes, it is legal provided that enterprises adhere to the DPDP Act 2023 guidelines. This includes obtaining explicit, informed consent from the user, providing a clear opt-out mechanism, and ensuring data minimization. Brands must be transparent about what emotional signals are being tracked and for what purpose.

4. What is the difference between YourVoic emotional TTS India and a full-funnel platform?

YourVoic emotional TTS India is a specialized tool for generating high-quality, emotionally expressive speech. However, a full-funnel platform like Studio by TrueFan AI provides the entire orchestration layer—including the photorealistic avatars, 175+ language support, real-time APIs for WhatsApp and CRM, and the analytics needed to measure emotional impact.

5. Can real-time sentiment video optimization really improve ROI?

Absolutely. Solutions like Studio by TrueFan AI demonstrate ROI through significantly higher engagement rates (2–3x) and lower CPAs. By delivering the right emotional message at the right time, brands reduce friction in the buyer's journey, leading to higher conversion rates and improved long-term customer loyalty.

6. How do I start a pilot for sentiment-driven video personalization?

Most enterprises begin with a 30–45 day pilot focusing on a high-impact use case like D2C cart recovery or B2B executive outreach. The pilot typically involves selecting 2–3 languages, defining the emotional triggers (e.g., frustration vs. delight), and integrating the video generation API with a messaging platform like WhatsApp.

7. How does the system handle different Indian accents and dialects?

Advanced emotion-aware avatar videos India are trained on diverse datasets that include a wide range of Indian accents and regional nuances. This ensures that the voice modulation sentiment analysis is accurate regardless of whether the user is speaking in "Hinglish," "Tanglish," or pure regional dialects.

Recommended Internal Links

- Emotion-aware Avatar Videos: AI Marketing for 2026

- AI Voice Cloning Indian Accents: Scale Multilingual Content with Authenticity

- The Ultimate Guide to the Best AI Voice Cloning Software of 2025

- Real-time Interactive AI Avatars India: Live Video Chat

- AI Video Analytics Heatmap Tools: India 2026 Insights

- Sentiment Analysis Video Campaigns: Real-Time Optimization

- Sentiment Analysis Video Campaigns: Strategies for 2026

Frequently Asked Questions

How does emotion AI adapt videos in real time?

A multimodal engine infers the viewer’s affect from signals (behavioral, voice, or optional video). A policy layer then maps the detected state to changes in avatar micro-expressions, TTS prosody, script branches, and CTAs—updating the experience within milliseconds.

What tech stack do I need for sentiment-driven video?

You need emotion-aware avatars, emotional TTS/voice cloning, and a decisioning/orchestration engine connected to your CRM/CDP, messaging (e.g., WhatsApp), and webhooks for low-latency updates. Platforms like Studio by TrueFan AI unify these layers.

How does the DPDP Act impact emotion AI deployments?

Brands must secure informed consent, state clear purposes, practice data minimization, and enable erasure. Bias testing, watermarking, and audit trails are recommended to ensure fairness, safety, and compliance.

Which industries benefit most in India?

D2C e-commerce (cart recovery), BFSI (trust-building and disclosures), B2B ABM (executive outreach), and Telecom/OTT (ARPU uplift) see the strongest ROI from emotion-aware, multilingual personalization.