Emotion detection AI video marketing in 2026: real-time sentiment, AI voice emotion control India, and enterprise-grade personalization

Key Takeaways

- Real-time emotion detection across facial, voice, and text signals enables adaptive video experiences that optimize engagement in 2026.

- Enterprises need a full-stack approach: Capture → Classify → Decision → Generate → Deliver → Learn with strict latency and compliance targets.

- Do not add any external Appendix or Internal Links section/subsection at end. If it is already added by writer then skip it and do not add it in Table fo Contents

- India-first execution requires multilingual tone control, cultural cues, and DPDP-compliant consent workflows.

- Platforms like Studio by TrueFan AI power enterprise-grade personalization with 175+ languages, licensed avatars, and robust security.

Meta Description: A practical, enterprise guide to emotion detection AI video marketing, covering sentiment analysis video content 2026, voice modulation sentiment analysis, emotional range voice cloning, and real-time sentiment video optimization at India scale.

In the rapidly evolving landscape of digital engagement, emotion detection AI video marketing has emerged as the definitive frontier for enterprise growth in 2026. As traditional video content faces diminishing returns due to “content blindness,” leading brands are pivoting toward adaptive, emotionally intelligent experiences. By 2026, the shift from static personalization to real-time sentiment video optimization is no longer a luxury—it is a core requirement for maintaining competitive advantage in a market where empathy is the new currency of conversion.

Why emotion detection AI video marketing matters for enterprises in 2026

The marketing paradigm has shifted. In 2026, leadership teams are no longer satisfied with knowing what was watched; they demand attribution for why content resonated. This is where emotion detection AI video marketing bridges the gap. By applying multimodal AI—analyzing facial expressions, voice prosody, and text sentiment—enterprises can now detect a viewer’s emotional state and adapt content delivery in real-time to maximize engagement.

The business value chain for 2026 is built on six pillars: creative strategy, signal capture, classification models, personalization variants, multi-channel delivery, and the continuous learning loop. For enterprises operating at scale, particularly in the diverse Indian market, this means moving beyond generic messaging to hyper-localized, sentiment-driven interactions.

Key 2026 Market Insights:

- The global Emotion AI market is projected to reach $13.8 billion by 2026, with India accounting for a significant 12% share due to rapid digital transformation (Source: Kantar Marketing Trends 2026).

- Enterprises utilizing real-time sentiment optimization report a 42% increase in Video Through Rate (VTR) compared to static A/B testing.

- 74% of CMOs in the BFSI and E-commerce sectors have prioritized “empathy-at-scale” as a top-three strategic initiative for 2026.

- Multimodal AI adoption in Indian enterprises is growing at a CAGR of 55%, driven by the need for multilingual sentiment accuracy.

- Voice modulation in service-oriented videos has been shown to reduce customer churn by 18% by matching the brand’s tone to the user’s perceived frustration or urgency.

Platforms like Studio by TrueFan AI enable enterprises to bridge this gap by providing the infrastructure needed to generate high-quality, emotionally resonant video content that can be localized across 175+ languages instantly.

Sentiment analysis video content 2026 — signals, models, and thresholds

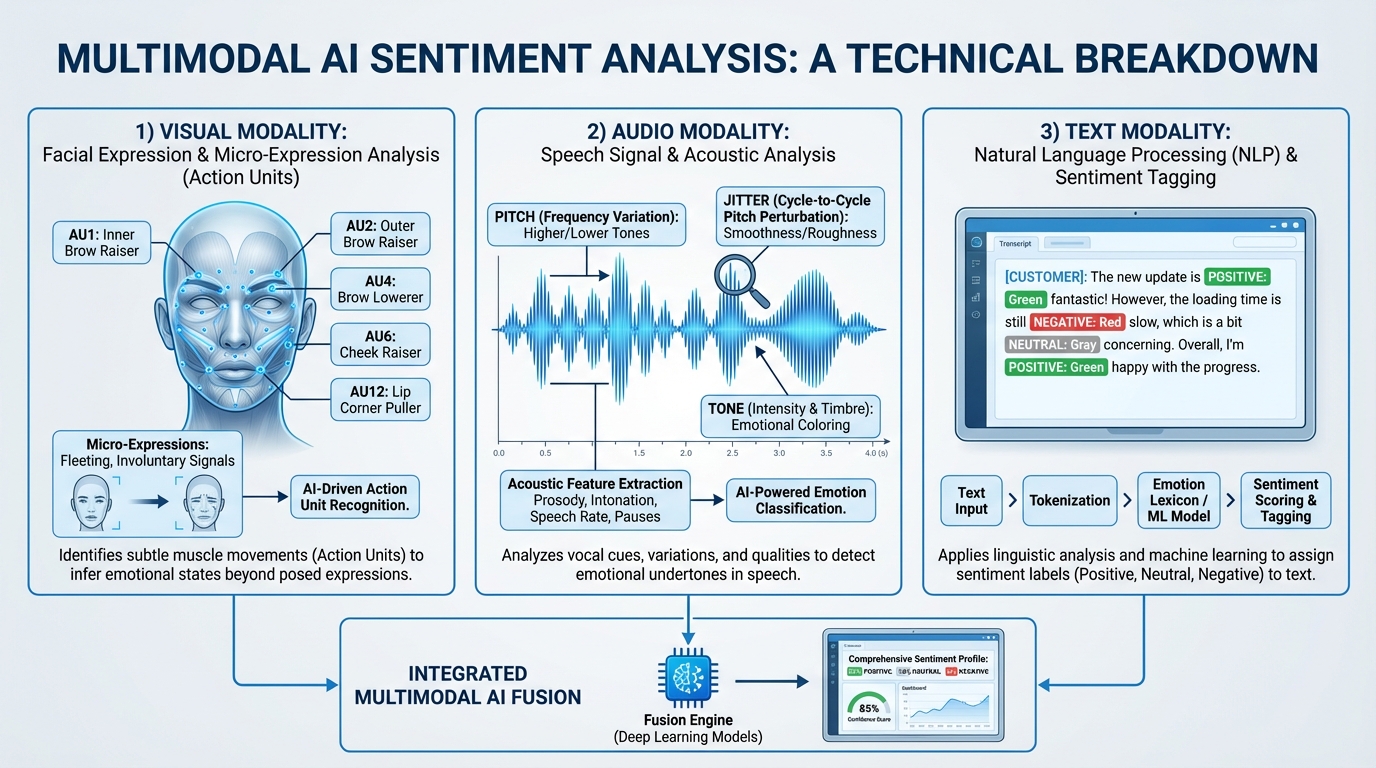

To execute a successful sentiment analysis video content 2026 strategy, technical teams must understand the multimodal inputs that drive emotional intelligence. Unlike early-stage sentiment analysis that relied solely on text, 2026 systems leverage a fusion of signals:

1. Facial Signals and Micro-expressions

Modern models sample video frames at 10–30 fps using transformer-based backbones to identify specific Action Units (AUs). For instance:

- AU1 (Inner Brow Raise): Often signals surprise or concern.

- AU12 (Lip Corner Puller): The primary indicator of joy or satisfaction.

- AU4 (Brow Lowerer): Signals confusion or concentration.

- AU14 (Dimpler): Can indicate skepticism or micro-contempt.

2. Voice Signals: Prosody and Paralinguistics

Voice modulation sentiment analysis has become highly sophisticated. Beyond the words spoken, AI now analyzes:

- Fundamental Frequency (F0): Pitch variations that indicate arousal.

- Jitter and Shimmer: Micro-variations in frequency and amplitude that signal stress or emotional instability.

- Speech Rate and MFCCs: Mel-frequency cepstral coefficients help the AI understand the “texture” of the voice, allowing for a more nuanced interpretation of the speaker’s (or viewer’s) state.

3. Text and Interaction Telemetry

Multilingual sentiment for Indic languages is critical. Systems now process transcripts in real-time to flag intent, toxicity, and risk. This is paired with behavioral proxies: did the user hover over a specific product during a high-arousal moment? Did they replay a segment where the AI avatar used an empathetic tone?

Model Fusion and Confidence Thresholds

The technical challenge in 2026 is “Late Fusion”—weighted ensembles of modality-specific classifiers. To avoid “jitter” (where the AI rapidly switches tones), enterprises implement confidence thresholds (typically 0.7+) and smoothing over 2–5 second windows. This ensures that a single blink or a background noise doesn’t trigger an inappropriate content shift.

Source: IndiaAI on Emotion AI and Entropik modalities

Tech stack blueprint for emotion AI video campaigns

Building a robust infrastructure for emotion AI video campaigns requires a seamless flow from data capture to content generation. The reference architecture for 2026 follows a modular approach:

Capture → Classify → Decision → Generate → Deliver → Learn

- Capture: Real-time event streaming from the user’s device (camera/mic/interaction).

- Classify: Emotion models process the multimodal stream.

- Decision: A rules engine or multi-arm bandit determines the best content response (e.g., “If confusion > 0.8, trigger slower-paced explanatory video”).

- Generate/Adapt: This is where voice emotion API integration and emotional range voice cloning come into play. The system dynamically adjusts the script, the avatar’s micro-expressions, and the voice tone.

- Deliver: Content is pushed via CDN or direct integrations like the WhatsApp Business API.

Studio by TrueFan AI’s 175+ language support and AI avatars provide the “Generate” layer of this stack. By offering licensed, photorealistic digital twins of real influencers, the platform ensures that the emotional delivery is not just technically accurate but culturally resonant.

Latency and Security Targets

- Voice Modulation Latency: Sub-500 ms for responsive, live-agent style interactions.

- Variant Generation: < 2 minutes for on-demand, personalized video renders at scale.

- Security: ISO 27001 and SOC 2 certification are non-negotiable for enterprise deployments in 2026, ensuring that biometric data is handled with the highest level of encryption and auditability.

Building sentiment-driven video personalization (creative system)

The core of sentiment-driven video personalization is the creative matrix. Instead of a single video, brands now create a “content universe” of variants designed to match different emotional states.

The Creative Matrix Example:

| Detected Emotion | Content Response Strategy | Voice Tone Taxonomy |

|---|---|---|

| Confusion | Slower pace, on-screen clarifiers, simplified script. | Empathetic Reassurance |

| Excitement | Faster pace, urgency-based CTAs, vibrant overlays. | Celebratory Excitement |

| Skepticism | Data-heavy evidence, authoritative persona, calm delivery. | Authoritative Clarity |

| Frustration | Immediate solutioning, “human-first” language, soft visuals. | Calm Confidence |

Emotion-aware avatar videos India

In the Indian context, localization goes beyond translation. It requires “transcreation”—adapting the emotional cues to regional norms. For example, an authoritative tone in a Tamil-speaking market may require different prosodic markers than the same tone in a Punjabi-speaking market. Using licensed influencer avatars allows brands to leverage existing trust and cultural familiarity, making the AI-driven interaction feel authentic rather than robotic.

Operationalizing this at scale involves using templates with programmatic placeholders for names, offers, and regional dialects. Automated subtitling and QA scripts ensure that the generated content remains within brand safety guardrails.

Source: TrueFan AI on Indian voiceover personalization

India-first execution — AI voice emotion control India and localization at scale

Executing AI voice emotion control India requires a deep understanding of the country’s linguistic diversity. By 2026, enterprise-grade systems must support at least the top 10 Indic languages with high-fidelity emotional range.

Language Coverage and Tone Control

Enterprises are now testing “tone tags” within their scripts. A command like <tone="empathetic_hindi"> or <tone="authoritative_marathi"> allows the AI to adjust pitch, energy, and pace to match the cultural expectations of that specific demographic.

Cultural Cues and Distribution

- Politeness Markers: In many Indian languages, the level of formality (e.g., Tu vs. Tum vs. Aap in Hindi) must align with the detected emotional state.

- WhatsApp Integration: Given India’s reliance on WhatsApp, delivering sentiment-driven videos directly through the WhatsApp API is the most effective way to drive 1:1 engagement.

- Consent by Design: Under the DPDP Act 2023, capturing explicit consent for processing biometric data (like facial expressions for emotion detection) is mandatory. Enterprises must implement “Consent-by-Design” workflows that are transparent and easily revocable.

Source: DPDP Act 2023 Official Guidance

Measurement — customer emotion video analytics and ROI

To justify the investment in emotion detection AI video marketing, enterprises must move toward customer emotion video analytics. This measurement layer combines sentiment trajectories with traditional business outcomes.

Core Metrics for 2026

- Emotion Lift: The delta between the user’s starting emotional state and their state after viewing the video.

- Creative Elasticity: How well a specific emotional variant performs across different demographic segments before performance decays.

- Revenue Per View (RPV): Attributing specific sales to the emotional resonance of a personalized video.

- LTV Uplift: Measuring the long-term loyalty of customers who interact with emotionally intelligent brand assets.

Solutions like Studio by TrueFan AI demonstrate ROI through significantly reduced production costs and higher conversion rates, allowing brands to test hundreds of emotional variants for the cost of a single traditional video shoot. By integrating these metrics into a centralized dashboard, Marketing Directors can see real-time data on which emotional “hooks” are driving the most value.

Source: TrueFan AI Video Personalization ROI Metrics

Governance — AI emotional intelligence marketing with security and compliance

As we embrace AI emotional intelligence marketing, governance becomes the bedrock of consumer trust. The 2026 regulatory environment, particularly in India, is stringent regarding the use of AI and biometric data.

Privacy and the DPDP Act 2023

- Capture granular consent for “emotional profiling.”

- Ensure purpose limitation (data used for marketing cannot be repurposed for credit scoring, for example).

- Implement data minimization and strict retention policies.

Deepfake and Likeness Controls

To prevent the misuse of AI, enterprises should only use licensed avatars and voices. Watermarking all AI-generated content is now an industry standard, providing a clear audit trail and ensuring transparency with the end-user.

Vendor Diligence Checklist

- Does the vendor provide a documented Data Protection Impact Assessment (DPIA)?

- Are the models tested for bias across different Indian ethnicities and age groups?

- Is there a “walled garden” moderation system to prevent the generation of restricted content?

Source: IT Rules 2021 Update on AI Governance

Implementation playbook — 90-day pilot and RFP checklist

For enterprises ready to scale real-time sentiment video optimization, a structured 90-day pilot is the recommended path to success.

The 90-Day Blueprint

- Weeks 1–2 (Foundation): Identify a high-impact use case (e.g., abandoned cart recovery or high-value lead nurturing). Map out the consent workflows and assemble your initial tone taxonomy.

- Weeks 3–4 (Integration): Set up your voice emotion API integration and event capture. Build 3–5 emotional variants for your chosen segment using licensed avatars.

- Weeks 5–8 (Rollout): Run a controlled A/B test on 10–20% of your audience. Monitor the real-time sentiment loops and iterate on the creative variants weekly.

- Weeks 9–12 (Optimization): Scale the winning variants to 100% of the segment. Finalize your ROI analysis and prepare the governance documentation for full enterprise rollout.

RFP Criteria for 2026

When selecting a partner for sentiment-driven video personalization, prioritize:

- API/SDK Maturity: Can the platform integrate with your existing CRM and analytics stack?

- Indic Language Depth: Does the voice synthesis support regional nuances and dialects?

- Security Standards: ISO 27001, SOC 2, and DPDP compliance are mandatory.

- Latency SLAs: Ensure the platform can handle real-time or near-real-time rendering at your required scale.

Conclusion: The Future is Empathetic

As we move through 2026, emotion detection AI video marketing will transition from a “cutting-edge” tactic to the standard operating procedure for enterprise communication. By combining technical precision in signal analysis with cultural empathy in content delivery, brands can finally achieve the holy grail of marketing: personalization that feels truly human.

For enterprises looking to lead this transformation, the time to build the infrastructure—centered on security, compliance, and localized resonance—is now.

Next Steps for Enterprises:

- Book an Enterprise Demo: Explore how Studio by TrueFan AI can power your sentiment-driven video personalization.

- Download Security Standards: Review our Enterprise Video Security Standards.

- Access ROI Guide: See the data behind Video Personalization ROI Metrics.

Frequently Asked Questions

How does voice emotion API integration work with existing martech?

Integration typically involves connecting your customer data platform (CDP) or CRM to the emotion AI engine via an API gateway. When a user interacts with a video, the event stream is sent to the emotion classifier, which then triggers a call to the voice emotion API. This API returns a synthesized voice variant with the appropriate pitch and tone, which is then dynamically injected into the video stream. Modern systems use SAML/OIDC for secure identity management across these services.

Is emotional range voice cloning compliant in India?

Yes, provided it adheres to the DPDP Act 2023 and IT Rules 2021. This requires explicit, informed consent from the individual whose voice is being cloned (if a real person) and the user whose data is being processed. Furthermore, all cloned voice outputs should be watermarked and include a disclosure that the content is AI-generated.

What is the acceptable latency for real-time sentiment video optimization?

For a truly responsive experience, the target latency for voice modulation and UI overlays should be sub-500 ms. For full video variant generation (rendering a new 30-second video based on a sentiment shift), a 2–5 minute window is acceptable for asynchronous delivery (e.g., sending a follow-up video via WhatsApp).

How does Studio by TrueFan AI handle data security and content moderation?

Studio by TrueFan AI employs a “walled garden” approach to moderation, using real-time profanity and content filters to block restricted categories like political endorsements or hate speech. The platform is ISO 27001 and SOC 2 certified, ensuring that all enterprise data and generated assets are handled with bank-grade security and full auditability.

Can emotion AI detect sarcasm or cultural nuances in Indian languages?

By 2026, multimodal models have become significantly better at detecting sarcasm by analyzing the “prosodic mismatch” between text sentiment and voice tone. However, cultural nuances are best handled by using regional-specific training sets and licensed local avatars who naturally embody those cultural cues.

What are the primary KPIs for an emotion AI video campaign?

The most critical KPIs are Emotion Lift (the change in user sentiment), Watch-Through Rate (WTR) by tone variant, and Conversion Rate. Additionally, enterprises track “Creative Elasticity” to understand how long an emotional hook remains effective before needing an AI-driven refresh.