AI Video Prompt Engineering 2026: Advanced Prompt Techniques for Cinematic Text-to-Video Results in India

Estimated reading time: ~11 minutes

Key Takeaways

- 2026 prompts use a slot-based scaffold covering subject, action, camera, lighting, style, motion, and negatives for cinematic consistency.

- Optimize for each platform—Sora, Runway, and Kling—with camera-first language, anchor frames, and short, stitchable beats.

- Prompt chaining and agentic multi-step reasoning eliminate identity drift and scale production like a studio.

- Indian success requires localization and compliance: cultural cues, device-first formats, and MeitY-aligned labeling/consent.

- Enterprise pipelines with libraries, bilingual automation, and analytics deliver repeatable ROI at scale.

AI video prompt engineering 2026 is the decisive lever for achieving cinematic results from text-to-video models like Sora, Runway, and Kling—without blockbuster budgets. As we move into an era where generative video has matured from a novelty into a core enterprise asset, the difference between a “good” video and a “world-class” production lies in the precision of the prompt and the robustness of the underlying context.

In 2026, the landscape has shifted. We are no longer just “wordsmithing” simple descriptions; we are orchestrating complex, multi-agent systems. According to Gartner’s 2026 Strategic Predictions, AI software spending is projected to reach nearly $297 billion by 2027, with a significant portion of that growth driven by generative media in emerging markets like India. For Indian creators and agencies, mastering advanced prompt techniques is no longer optional—it is the foundational skill of the digital economy.

This guide explores the evolution of prompt engineering from a fragile art to a systematic discipline, focusing on how Indian enterprises can leverage these tools for localized, high-impact storytelling.

1. The Anatomy of a 2026 Text-to-Video Prompt

The “text to video prompt guide” of 2024 is now obsolete. In 2026, professional-grade prompts follow a rigid, slot-based anatomy that ensures the AI understands not just the what, but the how and the why of a scene.

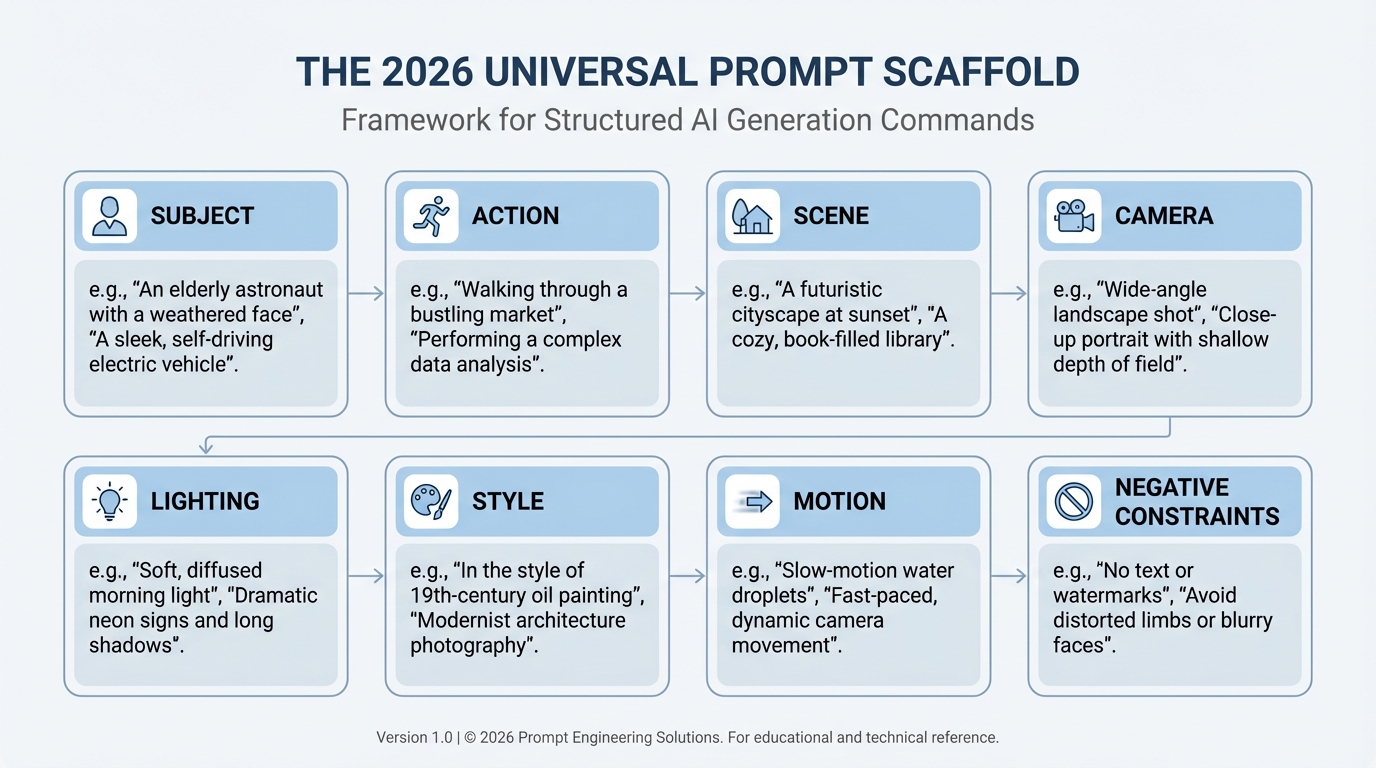

The Universal Prompt Scaffold

To achieve cinematic consistency, your prompts must include these eight critical dimensions:

- Subject: The core entity (e.g., “A 28-year-old Indian entrepreneur in a linen blazer”).

- Action: The specific, kinetic movement (e.g., “Adjusting a VR headset while walking through a neon-lit Bangalore tech park”).

- Scene/Environment: The spatial context (e.g., “Golden hour, reflections on glass buildings, bustling crowd in soft bokeh”).

- Camera Language: Technical specs (e.g., “35mm anamorphic lens, low-angle dolly-in, slight handheld micro-jitter”).

- Lighting: Source and quality (e.g., “Rembrandt lighting, warm 3200K key light, cool blue rim light from digital billboards”).

- Style/LUT: The aesthetic intent (e.g., “Kodak 2383 film stock, teal-and-orange color grade, high contrast”).

- Motion Cues: Speed and physics (e.g., “Slow-motion 60fps, fluid tracking shot, hair fluttering in a light breeze”).

- Negative Constraints: What to exclude (e.g., “No morphing, no extra limbs, no flickering, no cartoonish saturation”).

Token Budgeting and Slot Management

Modern models like Sora 2 and Runway Gen-4 prioritize tokens based on their position. HyScaler’s 2026 insights suggest that “evaluation discipline” is key: start with a concise core and expand using controlled slots. By using a JSON-like structure for repeatable templates, agencies can maintain brand consistency across hundreds of video variants.

Source: IBM: What is Prompt Engineering? | HyScaler: Mastering AI Communication

2. Platform Landscape: Sora, Runway, and Kling in India

Each major platform in 2026 requires a specialized optimization strategy. Understanding these nuances is essential for advanced prompt techniques video creation.

Sora Prompting Best Practices

OpenAI’s Sora remains the gold standard for temporal coherence. In 2026, Sora 2 emphasizes “camera-first” language.

- Do: Use specific focal lengths (e.g., “85mm portrait”) and motion verbs like “orbit,” “pan,” or “tilt.”

- Don’t: Use contradictory style cues (e.g., “vintage film” and “ultra-modern 8K” in the same prompt).

- Pro Tip: Leverage “seeds” for reproducibility. If a specific seed yields a perfect lighting setup, lock that seed and only vary the subject or action tokens.

Runway Prompt Optimization

Runway Gen-4 has become the favorite for Indian D2C brands due to its “Image-to-Video” anchoring.

- Anchor Strategy: Use a high-quality product shot as the “anchor frame” to ensure the product doesn’t warp during the video.

- Single-Beat Clips: To avoid the “AI drift” where a character’s face changes over 10 seconds, plan for short, 3-5 second “beats” and stitch them in post-production.

Kling AI Prompt Templates

Kling AI has seen massive adoption in India’s creative hubs like Mumbai and Hyderabad. It excels at high-motion sequences, such as Bollywood-style dance numbers or sports highlights.

- The Scaffold: [Subject] + [Action] + [Camera] + [Lighting] + [Style].

- Example: “Indian cricketer, mid-swing, 24mm wide angle, stadium floodlights, cinematic grain, 120fps slow motion.”

Source: OpenAI: Sora Prompting Guide | Runway: Gen-4 Guide | Segmind: Kling AI Prompts

3. Advanced Techniques: Chaining and Multi-Step Reasoning

The most significant evolution in AI video prompt engineering 2026 is the shift from single-shot generation to systematic orchestration.

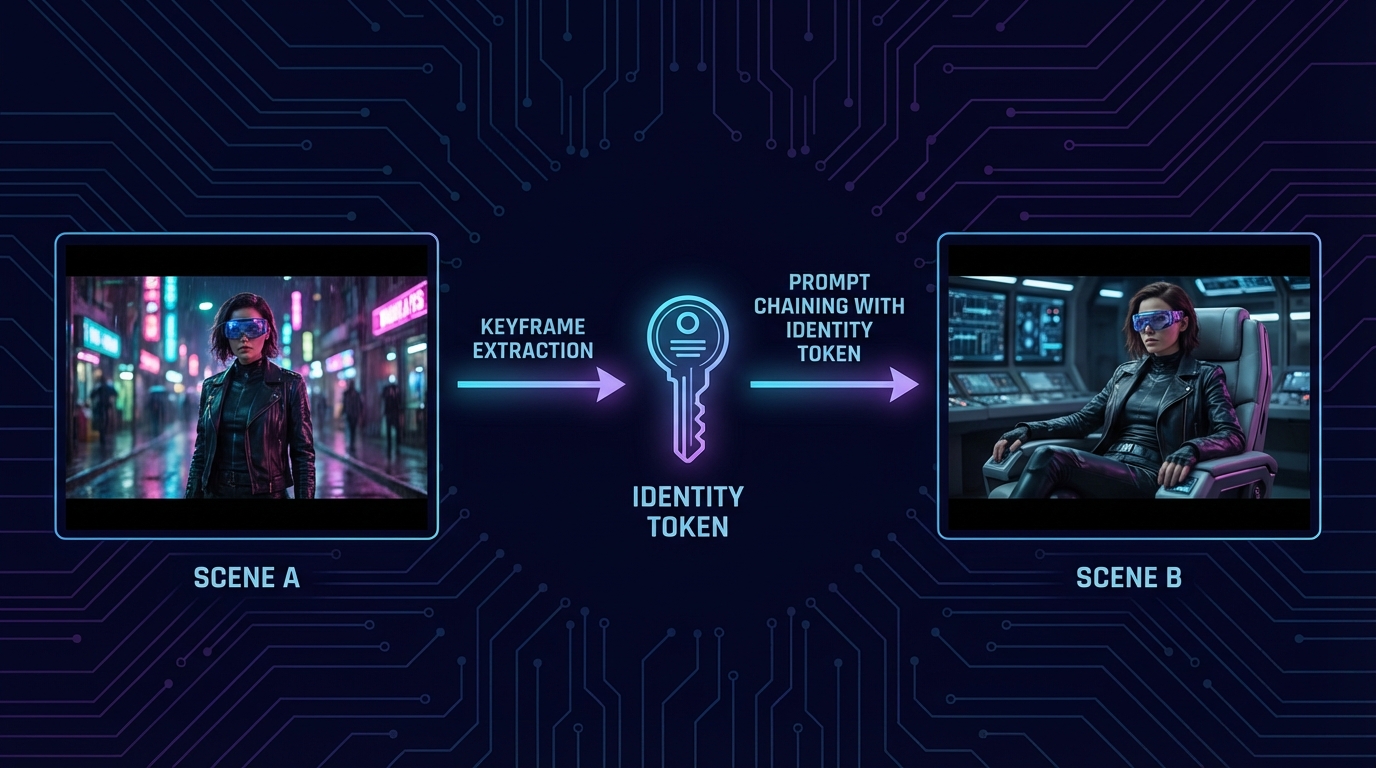

Prompt Chaining Video Generation

Prompt chaining involves decomposing a narrative into linked shots where each output informs the next. Instead of asking for a “1-minute ad,” you generate a sequence of 4-second shots using consistent “identity tokens.”

- Workflow: Generate Scene A → Export keyframe → Use keyframe as “Image Prompt” for Scene B → Maintain the same “Seed” and “Lighting Tokens.”

- Result: A seamless narrative with zero character drift.

Multi-Step Reasoning Video AI (Agentic Planning)

In 2026, we use Agentic AI to handle the heavy lifting. A reasoning pipeline might involve three specialized agents:

- The Director Agent: Drafts the shot list and camera angles.

- The Continuity Agent: Ensures the character’s wardrobe and the environment’s lighting remain identical across shots.

- The Style Agent: Applies the specific “Indian Festive” or “Corporate Tech” LUT to every generated frame.

This “Superagency” approach, as highlighted by McKinsey (2025), allows a single creator to perform the work of an entire production house. Platforms like Studio by TrueFan AI enable this level of scale by providing a centralized environment where these complex workflows are simplified for the end-user.

Source: NVIDIA: What is Agentic AI? | IBM: Prompt Chaining

4. The Human Element: Avatars and Emotion Control

For Indian enterprises, the “human” aspect of AI video is paramount. Whether it’s a customer support bot or a virtual influencer, the avatar must feel authentic.

Prompt Engineering for Avatars

To achieve a “human” feel, prompts must control for:

- Gaze and Framing: “Direct-to-camera, 10% headroom, eye-level medium shot.”

- Identity Lock: Using a fixed descriptor string like “Aryan, 30s, sharp jawline, salt-and-pepper hair” across all prompts.

- Prosody: Providing scripts with specific punctuation to guide the AI’s vocal inflection.

Emotion Control Prompts AI Video

In 2026, we use “Mood Matrices” to encode emotion. Instead of just saying “happy,” we use intensity scales:

- JSON Slot: {emotion: “anticipation”, intensity: “0.8”, arc: “rising to excitement”}.

- Visual Coupling: Pair high-intensity emotions with “warm 3500K lighting” and “dynamic camera moves” to reinforce the mood.

Studio by TrueFan AI’s 175+ language support and AI avatars are specifically designed to handle these nuances, ensuring that a “joyful” message in Hindi carries the same emotional weight as its Tamil or English counterpart.

Source: Google Cloud: Ultimate Prompting Guide for Veo | Analytics India Magazine: Indic Avatars

5. Context Engineering: Localization and Compliance in India

“Context engineering video creation India” is the practice of supplying cultural, linguistic, and regulatory constraints as structured context to the AI. This reduces revision loops and ensures brand safety.

The Indian Localization Matrix

To resonate with the Indian audience, prompts must incorporate:

- Cultural Cues: “Marigold decorations for festive scenes,” “Diyas for Diwali,” or “Monsoon rain with petrichor-inspired color grading.”

- Linguistic Nuance: Moving beyond literal translation to localized idioms in Hindi, Telugu, or Bengali.

- Device Optimization: Since 85% of Indian video consumption is on mobile, prompts should specify “9:16 aspect ratio” and “bold, high-contrast subtitles” for readability on mid-tier smartphones.

Regulatory Compliance (MeitY 2026)

As of early 2026, the Ministry of Electronics and Information Technology (MeitY) has implemented strict rules regarding AI-generated media.

- Labeling: All AI videos must carry a visible “AI-generated” watermark.

- Consent: Using an individual’s likeness without an explicit digital-twin license is strictly prohibited.

- Safety: Real-time moderation is required to prevent the spread of misinformation or deepfakes.

Solutions like Studio by TrueFan AI demonstrate ROI through their built-in compliance engines, which automatically apply watermarks and filter content against India-specific safety guidelines, protecting brands from legal risks.

Source: Hindustan Times: Draft Rules to Label AI Media | IndiaAI: Safe & Trusted AI

6. Enterprise Scaling: The Future of AI Video in India

By 2026, the “AI gap” is narrowing. Deloitte’s TMT Predictions 2026 suggest that the winners will be those who move from “one-off prompts” to “enterprise-grade pipelines.”

For an Indian agency, this means:

- Centralized Prompt Libraries: Storing “Gold Standard” prompts for different genres (e.g., “The Perfect IPL Hype Reel Prompt”).

- Bilingual Automation: Generating a single campaign in English and then “one-clicking” it into 10 regional languages with perfect lip-sync.

- ROI-Driven Iteration: Using analytics to see which “Cinematic AI video prompts” result in the highest watch time on Instagram Reels and YouTube Shorts.

The shift toward Model Context Protocol (MCP) allows enterprises to pass structured brand kits—logos, color palettes, and product specs—directly into the AI’s reasoning engine, ensuring that every video generated is “on-brand” by default.

Conclusion: Mastering the 2026 Workflow

The era of “typing a sentence and hoping for the best” is over. AI video prompt engineering 2026 is a technical discipline that blends cinematography, linguistics, and systems design. By adopting a slot-based prompt anatomy, leveraging multi-step reasoning, and respecting the unique cultural context of the Indian market, creators can produce cinematic content that was previously reserved for high-budget studios.

As the market continues to grow—with Kantar’s 2026 Marketing Trends highlighting “Generative Authenticity” as a top priority—the tools you choose will define your success. Whether you are a solo creator or a national agency, the goal remains the same: to tell stories that resonate, using the most advanced technology available.

Book a demo of Studio by TrueFan AI to operationalize these advanced prompt engineering workflows with enterprise-grade governance and localization.

Frequently Asked Questions

Is prompt engineering still a relevant skill in 2026?

Yes, but it has evolved. It is no longer about “guessing” words; it is about “context engineering.” You need to understand camera physics, lighting theory, and multi-agent orchestration to get professional results.

How do I ensure my AI-generated characters look the same in every shot?

Use “Prompt Chaining.” By reusing seeds and providing the AI with “reference frames” from previous generations, you can maintain 99% identity consistency.

What are the legal requirements for AI video in India?

According to the latest MeitY guidelines, you must label all AI-generated content as such. Additionally, you must have explicit licenses for any human likeness used. Studio by TrueFan AI simplifies this by providing a library of fully licensed, photorealistic avatars, ensuring your content is always compliant.

Can AI video handle complex Indian languages like Malayalam or Odia?

Modern platforms now support over 175 languages. The key is to use a platform that offers “phoneme-level” lip-sync to ensure the mouth movements match the specific nuances of regional Indian dialects.

What is the ROI of switching to AI video for Indian D2C brands?

Brands typically see a 70–90% reduction in production costs and a 10x increase in content volume. This enables hyper-personalized marketing—delivering unique, localized videos to every customer segment.