Emotion AI Video Marketing India 2026: Real-time Sentiment, Empathetic Avatars, and 2–3x Engagement for Enterprise Campaigns

Estimated reading time: ~11 minutes

Key Takeaways

- Real-time sentiment-responsive video delivers 2–3x engagement by adapting content to user mood across D2C and B2B.

- Empathetic AI avatars and multilingual voice modulation mirror user emotion for human-like interactions at scale.

- Enterprise-scale orchestration connects emotion models with rendering engines, WhatsApp distribution, and CRM triggers.

- A governance-first approach (consent, watermarking, DPDP compliance) safeguards brand and user trust.

- Emotion analytics dashboards tie sentiment shifts to revenue outcomes for continuous optimization.

In the rapidly evolving digital landscape, emotion AI video marketing India 2026 has emerged as the definitive frontier for brands seeking to bridge the gap between automation and human connection. By deploying sentiment-responsive personalized video, Indian enterprises are no longer just broadcasting messages; they are engaging in real-time, empathetic dialogues that adapt to a user’s current mood, leading to a measurable 2–3x uplift in engagement metrics across D2C and B2B sectors.

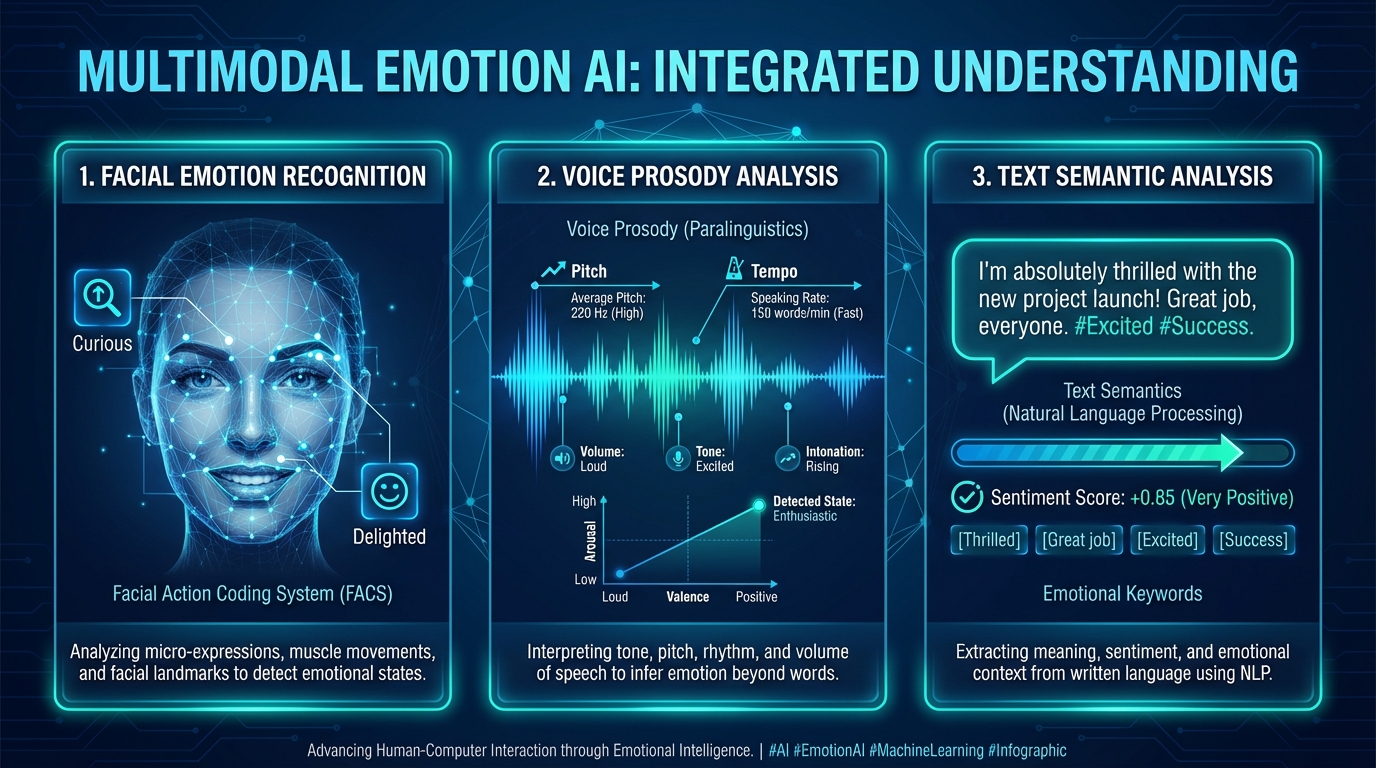

As we navigate 2026, the “Emotion AI Paradox”—the tension between high-speed digital infrastructure and the human need for empathy—is being solved by agentic AI systems. These systems don’t just analyze data; they perceive human emotional states (valence and arousal) from multimodal signals like voice prosody, text semantics, and facial micro-expressions to deliver content that resonates on a visceral level.

1. The Rise of Emotion AI in India's 2026 Digital Landscape

The shift toward emotion AI video marketing India 2026 is driven by a fundamental change in consumer expectations. According to the Kantar Marketing Trends 2026 report, 82% of Indian enterprises are expected to adopt agentic AI for customer empathy by the end of the year. This transition marks the evolution from basic sentiment analysis—which merely classifies language as positive or negative—to granular emotion detection customer engagement AI.

Defining the Multimodal Frontier

Emotion AI, or affective computing, involves systems that infer emotional states from a variety of signals. In the context of 2026 marketing, this includes:

- Voice Prosody: Analyzing pitch, energy, and tempo to detect hesitation or excitement.

- Text Semantics: Using Transformer-based models to understand the nuance in chat or comment interactions.

- Facial Action Coding: (With explicit consent) Mapping micro-expressions to emotional labels like “curious,” “frustrated,” or “delighted.”

Why India, Why Now?

India’s digital ecosystem is uniquely positioned for this revolution. With the world’s largest vernacular-first internet user base, generic video assets are no longer sufficient. Data from MarketYFY suggests that the Emotion AI market in India is projected to hit $1.2B by 2026, fueled by the need for culturally tuned, empathetic creatives. Platforms like Studio by TrueFan AI enable enterprises to bridge this gap by offering high-fidelity, licensed avatars that can be deployed across 175+ languages, ensuring that the emotional resonance is never lost in translation.

Sources:

2. Empathetic Avatars and Multilingual Voice Modulation

The core of any sentiment-responsive personalized video campaign lies in the avatar’s ability to mirror the user’s emotional state. In 2026, we have moved past “uncanny valley” CGI to emotional intelligence AI avatars enterprise solutions that use real human digital twins.

AI Voice Emotion Control

A critical component of this technology is AI voice emotion control avatars. Traditional text-to-speech (TTS) often sounds robotic, but modern stacks integrate YourVoic emotional TTS India to provide programmatic control over prosody. This allows a brand to modulate:

- Pitch Contour: Rising intonation for excitement in festive campaigns.

- Speaking Rate: Slower, calmer pacing for service de-escalation videos.

- Energy Levels: High energy for flash sales; soft, reassuring tones for high-value B2B consultations.

Emotional Range and Voice Cloning

Emotional range voice cloning personalization allows brands to calibrate speaker identity. For instance, a D2C brand might use a “festive warmth” preset for Diwali greetings in Marathi, while a B2B SaaS firm might opt for a “confident authority” tone in a Hinglish ROI explainer. Studio by TrueFan AI's 175+ language support and AI avatars provide the necessary infrastructure to execute these nuanced campaigns at scale, ensuring that every interaction feels bespoke rather than generated. Learn more about real-time interactive AI avatars in India.

By utilizing emotion-aware avatar videos India, brands can detect signals of confusion or hesitation in-session. If a user lingers on a pricing page, the AI avatar can switch from a “sales” tone to an “empathetic guide” tone, offering a simplified breakdown of the ROI.

Sources:

3. Architecting Mood-Based Video Personalization at Scale

Scaling sentiment-driven video personalization scale requires a robust enterprise architecture that connects the “brain” (the emotion AI models) to the “voice” (the video rendering engine).

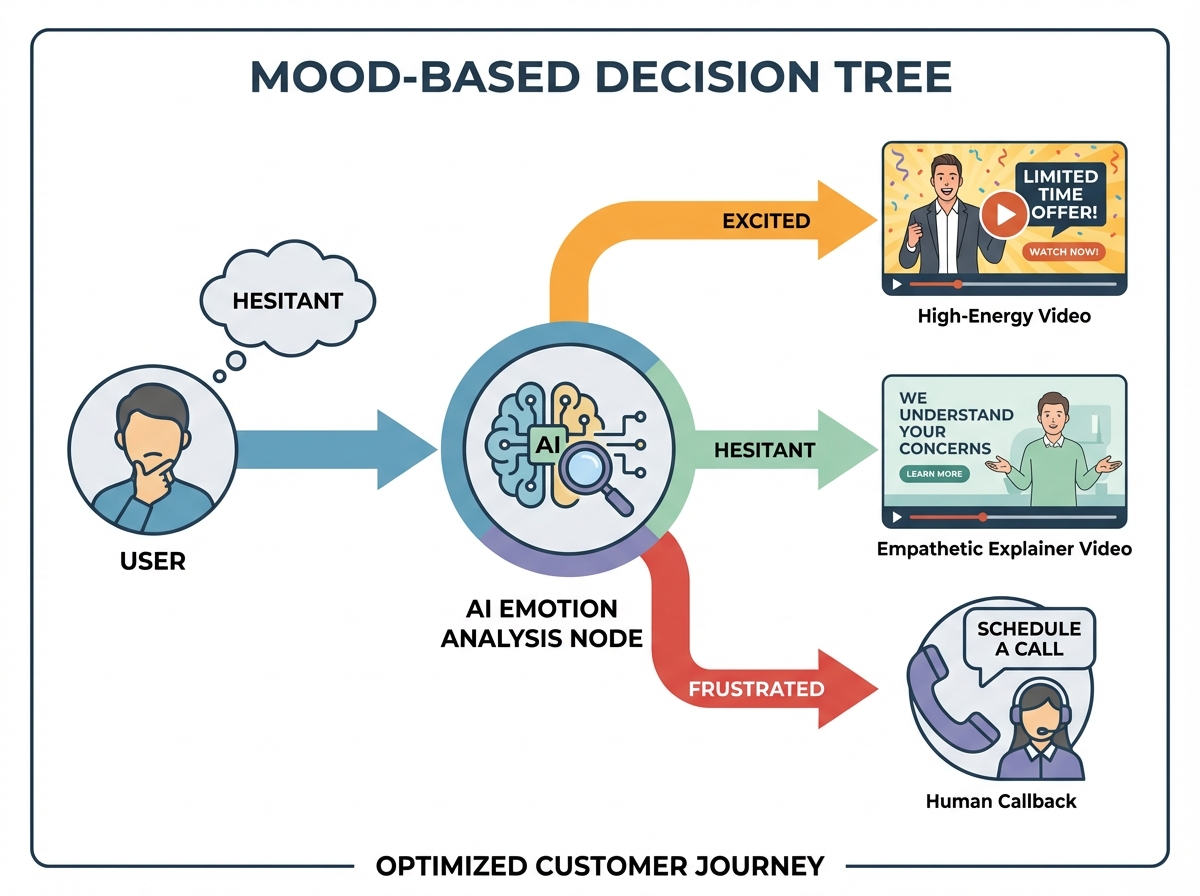

The Decision Tree: Mood-Based Adaptation

Mood-based video adaptation marketing relies on a dynamic selection of video segments and CTAs based on the user’s current “mood band.” Consider this logic:

- High Arousal + Positive Valence (Excited): The system triggers a short, punchy video with a high-intensity CTA and a celebratory tone.

- Low Arousal + Negative Valence (Hesitant): The system swaps the current scene for an empathetic explainer, adds a “money-back guarantee” overlay, and softens the voice tone.

- High Arousal + Negative Valence (Frustrated): The AI immediately routes the user to a “human callback” option, using a patient, de-escalating avatar tone.

Enterprise Scale and Latency

To achieve real-time sentiment video optimization, latency is the primary challenge. Indian enterprises are leveraging edge caching and TTS streaming to ensure that “branch swaps” (changing the video mid-stream) occur in under 2 seconds. This is supported by India’s growing digital infrastructure, as noted by DE-CIX India, which highlights the readiness for real-time AI processing at the edge.

Integration with WhatsApp and CRM

The distribution of these videos often happens via the WhatsApp Business API. By integrating webhooks from a CRM like Salesforce or HubSpot, a brand can trigger a personalized video the moment a cart is abandoned. The video isn’t just a reminder; it’s a sentiment-tuned message that addresses the likely reason for abandonment based on the user’s last three site interactions.

Sources:

4. Sector-Specific Playbooks: D2C and B2B Enterprise Strategies

The application of empathy AI marketing videos D2C and B2B varies significantly in tone and logic, yet both rely on the same underlying sentiment-responsive technology.

D2C Playbook: The Empathy-First Funnel

In the D2C space, emotion AI is used to humanize the digital storefront.

- Cart Recovery: Cart recovery video automation and AI video Shopify cart recovery. Instead of a generic “You forgot something” email, a user receives a WhatsApp video featuring a licensed avatar like Gunika or Aryan. If the user’s previous site behavior showed they were comparing prices, the avatar adopts a “helpful consultant” tone, highlighting the 30-day return policy.

- Post-Purchase NPS: If a customer leaves a neutral review, a voice modulation sentiment analysis campaigns trigger can send a personalized apology video. The AI detects the “ambivalence” in the text and responds with a calm, resolution-oriented tone, offering a loyalty discount to win back trust.

B2B Playbook: High-Stakes ABM

- 1:1 Outreach: An AI avatar representing a company spokesperson can deliver a personalized ROI pitch. If the recipient watches the video but drops off during the “technical specs” section, the next follow-up video automatically switches to a “simplified overview” tone with a case study overlay.

- Webinar No-Shows: Instead of a “Sorry we missed you” text, send an empathetic summary video. The avatar’s tone is tuned to be respectful of the executive’s time, offering a 2-minute “highlight reel” based on the executive’s specific industry interests stored in the CRM.

Sources:

5. Data Intelligence: The Customer Emotion Video Analytics Dashboard

You cannot manage what you cannot measure. The customer emotion video analytics dashboard is the command center for 2026 marketing teams. It moves beyond traditional metrics like “views” to “emotional resonance.”

Core Metrics for 2026

- Emotion Recognition Confidence: The statistical probability that the mood classification (e.g., “curious”) was accurate.

- Emotion Lift: The delta in a user’s valence/arousal from the start of the video to the end. A successful campaign should move a “hesitant” user to “confident.”

- Watch-Through Rate (WTR) by Mood Band: Identifying which emotional cohorts are most engaged with specific script variants.

- Revenue per View (RPV) by Sentiment: Measuring the direct financial impact of mood-responsive branches.

Real-Time Optimization

Solutions like Studio by TrueFan AI demonstrate ROI through their ability to feed this data back into the generation loop. If the dashboard shows that a “joyful” tone is underperforming in the South Indian market compared to a “nostalgic” tone, the system can autonomously promote the nostalgic branch for that specific cohort. This level of real-time sentiment video optimization ensures that marketing spend is always directed toward the most emotionally resonant creative.

According to HubSpot’s State of Marketing Report 2026, nearly 75% of marketers now use AI for media creation, but the top 10% are those who integrate real-time emotional feedback loops into their analytics stack.

Sources:

6. Governance, Ethics, and the 90-Day Implementation Roadmap

As Indian enterprises scale emotion AI video marketing India 2026, compliance with the Digital Personal Data Protection (DPDP) Act is non-negotiable.

Ethical Guardrails

- Explicit Consent: Users must opt-in for any biometric or emotion-tracking features.

- Watermarking: Every AI-generated video must carry a clear watermark to distinguish it from “real” footage, a standard feature in the Studio by TrueFan AI “walled garden” approach.

- Purpose Limitation: Data collected for emotion detection must not be used for unrelated profiling or sold to third parties.

The 90-Day Roadmap to Empathy

- Days 1–15 (Pilot Design): Identify one high-impact journey (e.g., cart recovery). Define your mood taxonomy (e.g., Calm, Excited, Hesitant).

- Days 16–45 (Build & Calibrate): Integrate your CRM with an AI video engine. Train your emotional intelligence AI avatars enterprise on brand-specific tone presets.

- Days 46–75 (Bandit Rollout): Deploy A/B tests comparing static video vs. sentiment-responsive personalized video. Use multi-armed bandit strategies to auto-optimize branches.

- Days 76–90 (Scale): Expand to regional languages (Hindi, Tamil, Telugu) and institutionalize the governance framework.

Brand Safety in the Age of AI

Brand safety is maintained by using licensed digital twins of real influencers—like Gunika, Annie, and Aryan—rather than unauthorized deepfakes. This ensures that the enterprise remains 100% compliant while benefiting from the “influencer effect” in their automated campaigns.

Sources:

7. FAQs and the Future of Sentiment-Responsive Video

What is the difference between sentiment analysis and emotion detection in video?

Sentiment analysis typically focuses on the polarity of text (positive, negative, neutral). Emotion detection is multimodal; it uses AI voice emotion control avatars and facial analysis to identify specific states like “frustration,” “curiosity,” or “delight,” allowing for much more granular content adaptation.

How does Emotion AI handle India's diverse regional languages?

Modern platforms use YourVoic emotional TTS India to ensure that prosody—the rhythm and intonation of speech—is culturally accurate. Whether it’s Hinglish, Marathi, or Bengali, the AI can modulate its tone to match local emotional nuances, making emotion-aware avatar videos India highly effective across the subcontinent.

Is real-time video adaptation possible with low internet speeds?

Yes. By using “scene branching” and edge caching, the system doesn’t generate a whole new video on the fly. Instead, it swaps pre-rendered segments or adjusts the audio track (TTS) in real-time. Studio by TrueFan AI's 175+ language support and AI avatars are designed to work within these latency constraints, ensuring a smooth user experience even on 4G networks.

How do I measure the ROI of an “empathetic” video?

ROI is measured through the customer emotion video analytics dashboard. Key indicators include a reduction in “drop-off” rates at points where users previously showed hesitation, an increase in Average Order Value (AOV) for “delighted” cohorts, and a 2–3x improvement in CTR compared to non-responsive video assets.

Are these AI avatars legal under India's DPDP Act?

Yes, provided the platform uses a “consent-first” model. This involves using licensed avatars (digital twins of real people who have given explicit permission) and ensuring that any user data used for personalization is encrypted and handled according to DPDP residency requirements.

Can Emotion AI detect sarcasm or cultural nuances in Indian speech?

2026 models are significantly more advanced in “code-mixing” (e.g., mixing Hindi and English). By analyzing both the text semantics and the voice prosody, the AI can distinguish between a frustrated “Great!” and a genuinely happy “Great!” with over 90% accuracy.

What is the first step for an enterprise to start with Emotion AI?

The first step is to audit your existing customer journey data to identify where “emotional friction” occurs. Once identified, you can deploy a pilot using sentiment-responsive personalized video to address those specific friction points, such as high abandonment during a complex sign-up process.

Final Strategic Takeaway: As we move through 2026, the brands that win will not be those with the loudest message, but those with the most empathetic one. By integrating emotion AI video marketing India 2026 into your enterprise stack, you are investing in a future where every digital interaction feels as personal and understood as a face-to-face conversation.

Frequently Asked Questions

What is Emotion AI video marketing?

Emotion AI video marketing uses multimodal signals (voice, text, facial cues with consent) to infer user mood and dynamically adapt video scripts, voice, and CTAs in real time for higher engagement.

How do empathetic avatars boost engagement?

Empathetic, licensed digital-twin avatars mirror user sentiment with calibrated prosody and tone, making interactions feel human and driving 2–3x uplift in click-through and conversions.

Can this scale across regional languages?

Yes. Platforms like Studio by TrueFan AI support 175+ languages with emotion-aware TTS and avatars, preserving cultural nuance across India’s vernacular markets.

How is ROI measured for Emotion AI videos?

Use an emotion analytics dashboard tracking emotion recognition confidence, emotion lift, WTR by mood band, and revenue per view to connect sentiment shifts to business outcomes.

Is it DPDP compliant and privacy-safe?

A consent-first model with watermarking, purpose limitation, and data encryption ensures DPDP compliance and brand safety while using emotion detection responsibly.