Emotion AI Video Marketing India 2026: How Enterprise Brands Achieve 2–3x Engagement with Sentiment-Responsive AI Avatars

Estimated reading time: ~12 minutes

Key Takeaways

- Sentiment-responsive avatars and voice control now drive 2–3x engagement for Indian enterprises.

- Multimodal affective computing fuses text, voice prosody, and visual cues with real-time orchestration to adapt tone and CTAs.

- Scalable personalization uses an emotional variant taxonomy for D2C and B2B, lifting CTR by 20–40%.

- DPDP-compliant consent, brand safety filters, and watermarking underpin enterprise-grade governance.

- A 90-day playbook enables design, integration, pilot, and scale with analytics-led iteration.

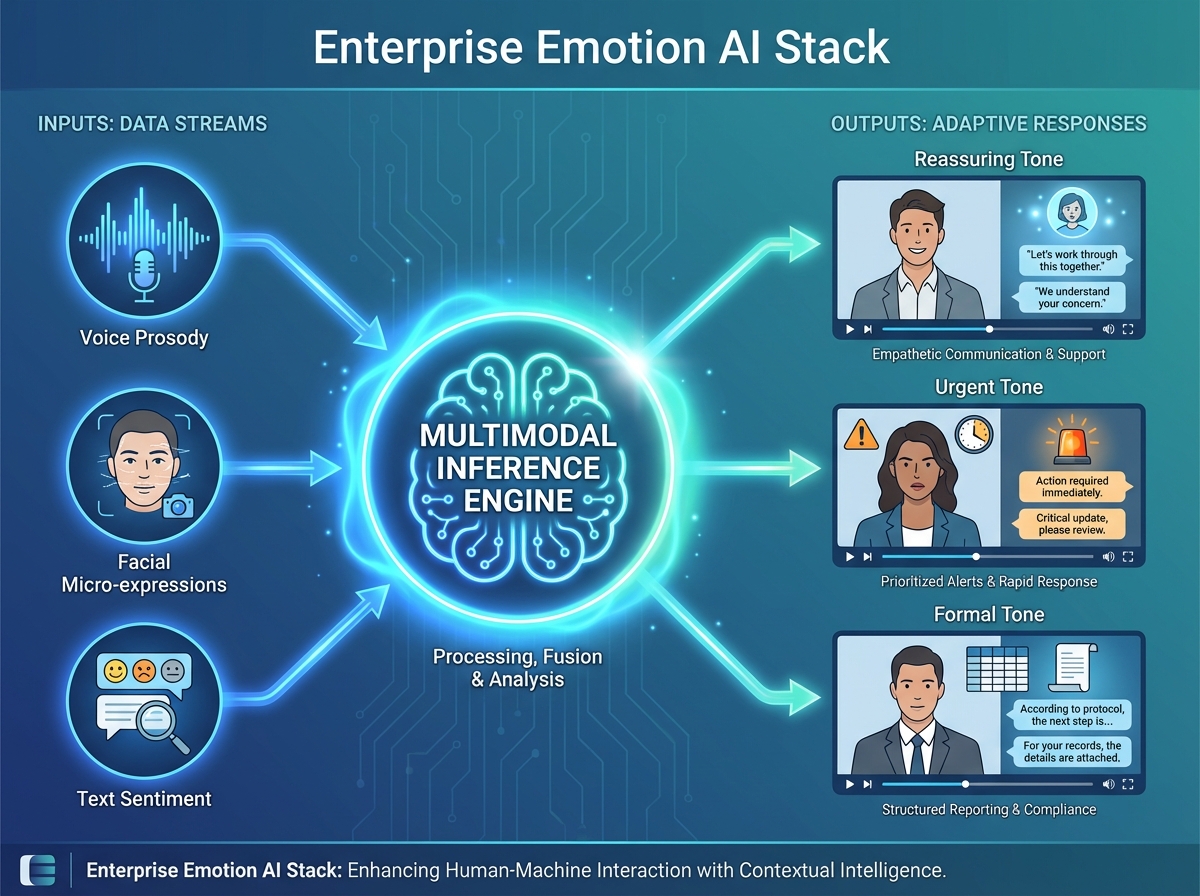

In the rapidly evolving digital landscape, emotion AI video marketing India 2026 has emerged as the definitive frontier for enterprise growth, moving beyond static personalization into the realm of real-time empathy. By 2026, the practice of using multimodal affective computing—analyzing voice prosody, text sentiment, and facial micro-expressions—has become the gold standard for Indian D2C and B2B brands looking to drive 2–3x engagement lifts. This shift allows enterprises to automatically adapt video scripts, tones, and CTAs to match a viewer's emotional state, ensuring that every digital interaction feels human, relevant, and trustworthy.

The "why now" is driven by a convergence of technological maturity and shifting consumer expectations. According to Kantar’s 2026 Marketing Trends, empathy and agentic AI are now the primary imperatives for brand growth. In India, where digital marketing has undergone a massive shift toward AI-integrated video content, brands are leveraging these tools to combat creative fatigue and media inflation. Research from Social Beat and MarketYfy highlights that by 2026, over 70% of Indian enterprises will have integrated some form of sentiment-responsive personalized video content into their WhatsApp and email marketing funnels.

1. What "Emotion AI Video Marketing India 2026" Means for Enterprise Growth

To understand the impact of emotion AI video marketing India 2026, one must first distinguish between simple sentiment analysis and true emotional intelligence. While sentiment analysis typically focuses on the valence of language (positive, negative, or neutral), emotion AI utilizes multidimensional affect states—such as joy, trust, confusion, or frustration—inferred from multimodal signals. For an enterprise in India, this means a video can detect a user’s hesitation during a checkout process on WhatsApp and instantly switch to a "calm confidence" tone to provide reassurance.

Several key drivers are pushing this adoption in the Indian market:

- Creative Fatigue and ROAS Optimization: With digital ad costs rising, static creative no longer delivers the required Return on Ad Spend (ROAS). Dynamic, mood-aware video content is essential to maintain high engagement levels.

- Vernacular Nuance: India’s linguistic diversity requires more than just translation. Emotion AI allows for the adjustment of prosody (the rhythm and intonation of speech) across 175+ languages, ensuring that a message in Tamil carries the same emotional weight as one in Hindi or Bengali.

- Agentic AI Adaptation: Modern AI agents can now adapt creative elements in-session. If a viewer shows signs of confusion (detected through engagement proxies or direct feedback), the AI can swap the current video segment for a simplified explainer variant.

- The "Calm Confidence" Trend: Data from Spinta Digital indicates a 38% shift in audience preference toward a "calm confidence" tone in social media interactions, moving away from the high-energy, "hype-driven" marketing of previous years.

Platforms like Studio by TrueFan AI enable enterprises to deploy these sophisticated emotional layers at scale, ensuring that brand messaging remains authentic while being technologically advanced.

2. The Enterprise Stack for Sentiment-Responsive Video Campaigns

Building a robust system for sentiment analysis video campaigns requires a sophisticated stack that integrates data, models, and real-time orchestration. This isn't just about generating a video; it's about creating a responsive ecosystem. Sentiment Analysis Video Campaigns

Inputs and Data Foundations

The foundation of any emotion AI strategy is the data it consumes. Enterprises in 2026 rely on:

- First-Party Signals: Data from CRMs and CDPs, including past purchase behavior and product interest.

- Behavioral Signals: Real-time metrics such as watch time, pauses, rewinds, and comment sentiment.

- Channel Signals: Events triggered within WhatsApp Business or email clicks that indicate a user's current intent.

Crucially, all data collection must be compliant with India’s Digital Personal Data Protection (DPDP) Act, ensuring that consent is captured at every touchpoint.

Multimodal Models and Inference

To detect emotion accurately, the stack employs multiple AI models:

- Text Analysis: Transformer-based classifiers analyze the sentiment of user comments or chat inputs.

- Voice Prosody: Analysis of pitch, energy, and tempo to detect arousal and valence. For instance, Uniphore’s acquisition of Emotion Research Lab has paved the way for voice and video AI to work in tandem to identify customer frustration or satisfaction.

- Visual Cues: Where explicit consent is provided, facial action units and micro-expressions are analyzed. In cases where camera access is unavailable, the system falls back to engagement proxies.

Decisioning and Orchestration

The "brain" of the operation is the real-time router. This component maps the detected emotion to a specific creative policy. If the system detects "confusion," it might route the user to a video variant with a slower tempo and a simplified CTA. To maintain a seamless experience, latency targets are strictly managed: ≤300ms for routing to pre-rendered variants and ≤2s for low-friction in-session swaps.

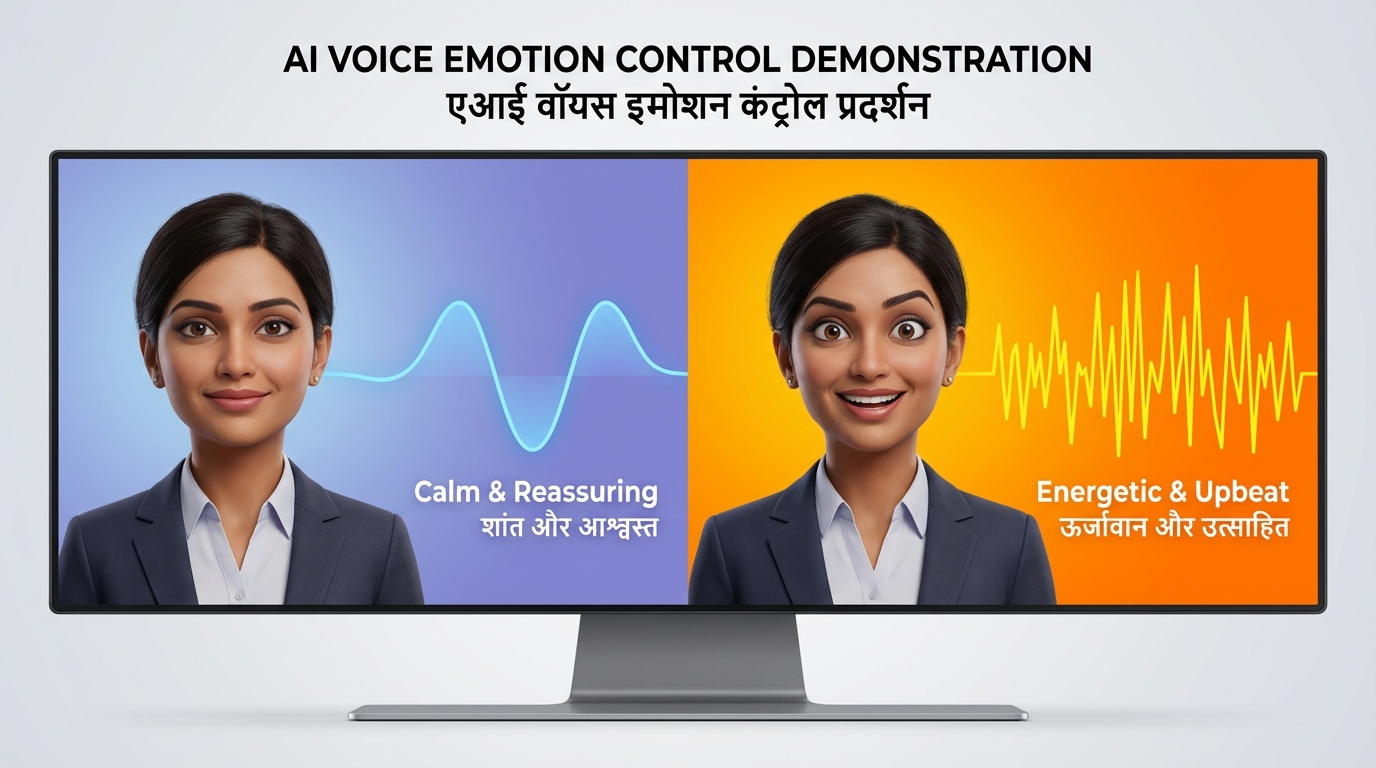

3. AI Voice Emotion Control and India-Ready Avatar Videos

A critical component of emotion AI video marketing India 2026 is the ability to control the "vibe" of the AI avatar. This goes beyond lip-syncing; it involves deep AI voice emotion control avatars that can modulate their tone to match the viewer's context. Voice Cloning Emotion Control in India

Emotional Range and Voice Cloning

Enterprises can now train AI on a brand spokesperson’s voice and define controllable parameters. For a luxury brand in India, this might mean a "formal, warm" tone in Hindi with a 0.7 energy level and a slightly slower 0.9x tempo. This level of emotional range voice cloning personalization ensures that the AI doesn't sound robotic but carries the specific cultural weight required for the Indian market. This includes handling "Hinglish" code-mixing and regional idioms with the correct prosodic emphasis. AI Voice Cloning for Indian Accents

Emotion-Aware Avatar Videos in India

Authenticity is the currency of 2026. Brands are moving away from generic CGI and toward photorealistic virtual humans. Studio by TrueFan AI's 175+ language support and AI avatars provide a library of licensed Indian influencers and actors, ensuring that the digital twins used in campaigns are both relatable and legally compliant. Real-time Interactive AI Avatars in India

Key features of these India-ready avatars include:

- Production-Grade Lip-Sync: Ensuring that the visual movements perfectly match the emotional weight of the audio.

- Content Safety Filters: Built-in blocks for political, hateful, or explicit content to protect brand reputation.

- Watermarking and Traceability: Essential for transparency and meeting ISO 27001 & SOC 2 standards.

As noted by Meltwater’s 2026 Predictions, the shift toward "narrative intelligence" means that avatars must not only speak but also "feel" the story they are telling.

4. Sentiment-Driven Video Personalization at Scale (D2C and B2B)

Scaling sentiment-driven video personalization requires a systematic approach to variant generation. Instead of creating one video for everyone, brands create a "matrix" of content that can be assembled on the fly.

Variant Taxonomy and Rules

Brands typically define a taxonomy of emotional narratives:

- Reassurance: Calm, confident tone for high-value checkouts or service issues.

- Urgency: Brisk, upbeat tone for limited-time offers.

- Authority: Formal and credible for B2B whitepapers or executive summaries.

- Delight: Warmth and smiles for loyalty rewards and birthdays.

D2C Empathy AI Marketing Videos

In the D2C sector, empathy AI marketing videos are revolutionizing cart recovery. If a user abandons a cart after looking at the EMI options, the system can trigger a WhatsApp video. WhatsApp Video Commerce Integration Setup Instead of a generic "You forgot something," the avatar—speaking in the user's preferred regional language—uses a reassuring tone to explain the no-cost EMI process. This "confusion-to-clarity" journey has been shown to result in a 10–25% conversion lift.

B2B Account-Based Marketing (ABM)

For B2B, the tone is adjusted based on the persona. A CFO might receive a video focused on risk clarity with a formal tone, while a Marketing Director receives a version focused on growth urgency with a more upbeat delivery. Solutions like Studio by TrueFan AI demonstrate ROI through these precise, persona-aligned interactions that significantly increase meeting booking rates.

According to Cloud9 Digital's 2026 Report, Indian enterprises using sentiment-responsive video saw a 40% increase in lead quality, as the emotional alignment filtered for higher-intent prospects.

5. Real-Time Optimization and Measurement Dashboards

The "detect → adapt → measure → iterate" cycle is the heartbeat of real-time sentiment video optimization. Sentiment Analysis Video Campaigns: Guide To manage this, enterprises utilize a customer emotion video analytics dashboard that provides a granular view of how emotional states correlate with business outcomes. Sentiment Analysis Video Campaigns 2026

Triggering Logic and Optimization

The optimization framework uses contextual multi-armed bandits to assign probabilities to different tone/CTA variants.

- Negative Cues: If a user drops off within the first 3 seconds or uses "frustrated" keywords in a chat, the system automatically switches to a clarity-first variant.

- Positive Cues: If the system detects high engagement or positive sentiment, it may trigger an immediate upsell or "refer-a-friend" CTA.

- Neutral/Low Arousal: The system might introduce humor or social proof to re-engage the viewer.

Measurement Benchmarks for 2026

Success in 2026 is measured by "Emotion-Lift-to-CTR Elasticity." Target benchmarks for high-performing Indian campaigns include:

- 2–3x Engagement: Compared to static, non-responsive video controls.

- 20–40% CTR Improvement: On mood-matched CTAs (e.g., using a "reassuring" CTA for a "confused" user).

- Zero-Tolerance Safety: 100% compliance with brand safety and profanity filters.

The dashboard doesn't just show views; it shows the "sentiment trajectory" of a customer journey. Did the customer start "confused" and end "satisfied"? This trajectory is a leading indicator of Long-Term Value (LTV).

6. Governance, Compliance (DPDP Act), and the 90-Day Playbook

As Indian enterprises scale their use of emotion AI video marketing India 2026, governance is no longer optional—it is a core competitive advantage. Navigating the DPDP Act requires a "privacy-by-design" approach.

DPDP Act-Aligned Practices

- Granular Consent: Users must provide explicit opt-ins for emotion and sentiment processing.

- Purpose Limitation: Data collected for sentiment analysis cannot be used for unrelated profiling without fresh consent.

- Verifiable Parental Consent: Any emotion AI targeting minors requires stringent verification, as per DLA Piper’s 2026 guidelines.

The 90-Day Enterprise Playbook

For brands ready to implement these technologies, a structured rollout is essential:

- Days 0–15 (Design & Compliance): Define target journeys (e.g., onboarding or cart recovery). Draft DPDP-compliant privacy notices and define your creative taxonomy (tones, languages, and CTAs).

- Days 16–45 (Build & Integrate): Connect your CRM to the video generation API. Set up WhatsApp Business API flows and pre-render the top "K" variants for your most common emotional triggers.

- Days 46–75 (Pilot Live): Launch to 10–20% of your traffic. Monitor the customer emotion video analytics dashboard to iterate on hooks and tones weekly.

- Days 76–90 (Scale & Codify): Roll out to 80% of traffic. Finalize SLAs and train internal teams on managing the "emotion-aware" content calendar.

By following this structured approach, Indian enterprises can ensure they are not just adopting a trend, but building a sustainable, empathetic, and high-ROI marketing engine for the future.

Sources:

- Kantar: 2026 Marketing Trends

- Social Beat: Digital Marketing Trends 2026 in India

- MeitY: Digital Personal Data Protection (DPDP) Act

- Uniphore: Acquisition of Emotion Research Lab

- Spinta Digital: Social Media Marketing Trends 2026

- Cloud9 Digital: AI Marketing Stats 2026

Recommended Internal Links

- Real-time Interactive AI Avatars in India

- WhatsApp Video Commerce Integration Setup

- Sentiment Analysis Video Campaigns

- Sentiment Analysis Video Campaigns: Guide

- Sentiment Analysis Video Campaigns 2026

- AI Voice Cloning for Indian Accents

- Voice Cloning and Emotion Control in India

Recommended Internal Links

- Real-time Interactive AI Avatars India: Live Video Chat

- WhatsApp video commerce integration setup: AI guide 2026

- Sentiment Analysis Video Campaigns: Real-Time Optimization

- Sentiment Analysis Video Campaigns: India 2026 Strategies

- Sentiment Analysis Video Campaigns: Strategies for 2026

- AI Voice Cloning Indian Accents: Scale Multilingual Content with Authenticity

- Voice Cloning Emotion Control India: tools, ethics 2026

Frequently Asked Questions

Is emotion AI video marketing legal under India's DPDP Act?

Yes, provided there is clear notice and explicit consent. Brands must inform users that their interactions (voice, text, or video) are being analyzed to personalize the experience. Data minimization and storage limitation rules apply strictly to the emotional metadata generated during these sessions.

How does emotion AI handle the diversity of Indian languages?

Modern emotion AI uses prosody analysis which focuses on the "how" of speech (pitch, tempo, energy) rather than just the "what." This allows the AI to detect frustration or joy in a Tamil speaker just as accurately as in a Hindi speaker, even if the specific vocabulary differs.

What is the typical ROI for sentiment-responsive video campaigns?

Enterprises typically see a 2–3x increase in engagement rates and a 20–40% improvement in CTR. By aligning the video's tone with the user's current emotional state, brands reduce friction and build trust faster, leading to higher conversion rates in both D2C and B2B sectors.

Can I use my own brand influencers as AI avatars?

Absolutely. Studio by TrueFan AI's 175+ language support and AI avatars include the capability to train custom "digital twins" of your brand spokespeople or influencers. This ensures that while the delivery is automated and emotion-aware, the face and voice remain consistent with your established brand identity.

How do you prevent the AI from misinterpreting emotions?

We use a "human-in-the-loop" QA process for initial model training and set conservative thresholds for real-time adaptation. If the AI's confidence in an emotional state is low, the system defaults to a "neutral-professional" tone to ensure brand safety and avoid awkward or inappropriate interactions.

What is a customer emotion video analytics dashboard?

It is a specialized reporting tool that maps viewer emotional states (detected during video interaction) against traditional KPIs like CTR and conversion. It allows marketers to see which emotional "journeys" (e.g., moving from 'confused' to 'confident') are driving the most revenue.