Multimodal AI Video Creation in 2026: A Unified AI Video Platform for Enterprise Creative Teams in India

Estimated reading time: 8 minutes

Key Takeaways

- Multimodal AI has replaced simple text-to-video tools, integrating text, images, and audio for precise brand control.

- Agentic workflows now orchestrate entire production timelines, allowing Indian enterprises to scale regional content efficiently.

- Identity Locking (ID-Lock) technology ensures 100% character consistency across different scenes and lighting conditions.

- Compliance with Indian IT Rules 2026 is mandatory, requiring invisible watermarking and licensed digital twin consent.

In the rapidly evolving landscape of digital media, multimodal AI video creation has emerged as the definitive standard for enterprise creative teams in 2026. Moving beyond text-to-video AI tools, modern production workflows now integrate text, high-fidelity images, and synchronized audio to deliver brand-safe, hyper-localized content at an unprecedented scale. As India’s digital economy surges toward a projected $1.2 billion AI video market by the end of 2026, the shift from single-prompt generation to complex, multi-input orchestration is no longer a luxury—it is a competitive necessity.

1. The Evolution of Content: Beyond Text-to-Video AI Tools

The journey of generative AI in video production has moved through three distinct eras. We began with basic text-to-video (T2V) models that, while impressive, often lacked the granular control required for professional brand work. In 2026, we have entered the era of multimodal AI video creation, where the "black box" of generation has been replaced by a transparent, multi-layered controller stack.

Why Multimodality is the 2026 Baseline

Single-modality tools (text-only) often struggle with "hallucinations"—unintended visual artifacts or style drifts that can compromise brand identity. Multimodal systems solve this by allowing creators to "condition" the AI using multiple inputs simultaneously. For instance, a creator can provide a text script for the narrative, a styleboard image to lock the color palette, and a specific audio track to drive the rhythm of the cuts.

Platforms like Studio by Truefan AI enable creative teams to bridge the gap between abstract prompts and brand-consistent reality, ensuring that every frame aligns with the enterprise’s visual DNA.

The Shift to Agentic Workflows

By 2026, the industry has moved toward "agentic" workflows. These are AI agents that don't just generate a clip but orchestrate the entire production timeline—selecting the best takes, aligning them to a beat, and ensuring temporal consistency across scenes. According to recent industry analysis, nearly 70% of Indian enterprises are expected to adopt agentic AI video workflows by the end of this year to manage the sheer volume of content required for regional markets.

Source: YourStory: Google’s AI Film Tools and Agentic Shift

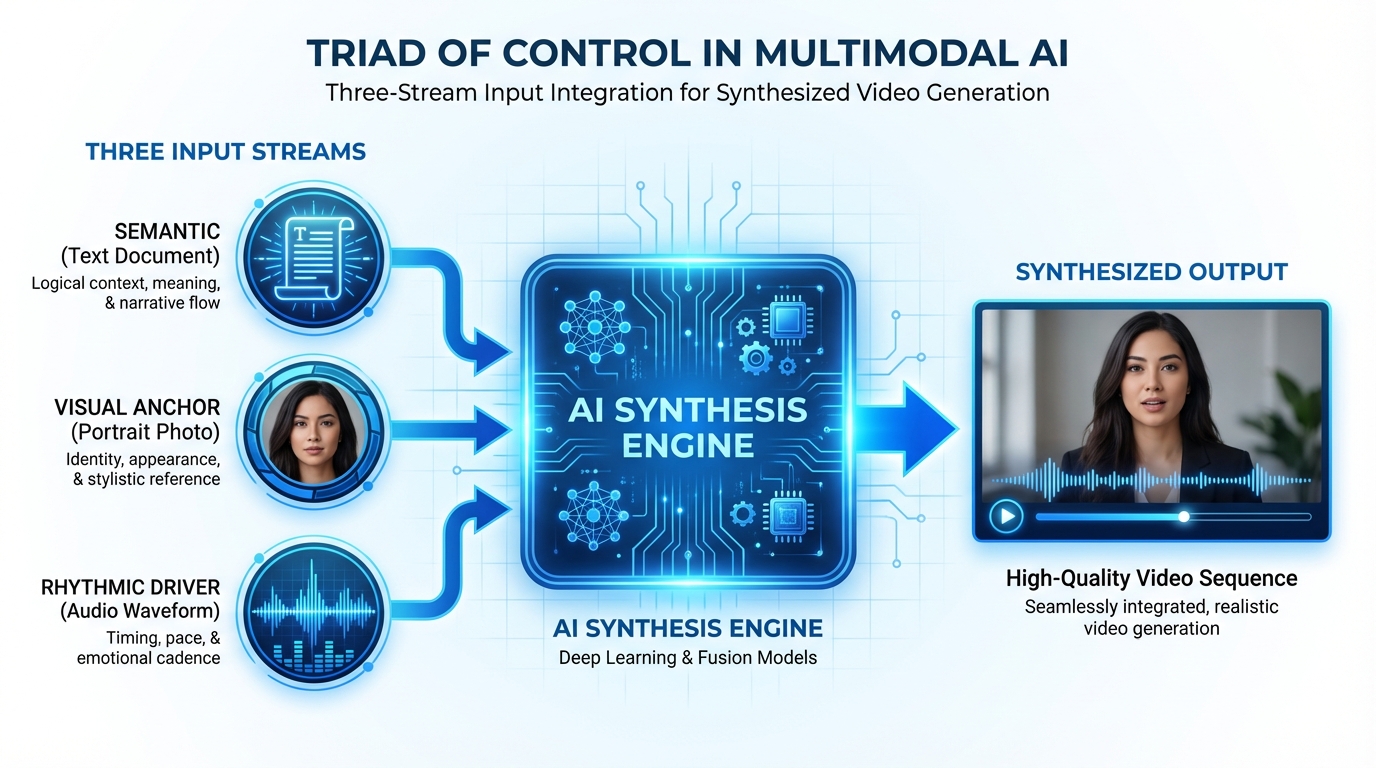

2. The Triad of Control: Text, Image, and Audio Integration

To understand how a modern text image audio video generator functions, one must look at the three pillars of input that drive the synthesis engine.

Text: The Semantic Controller

Text remains the primary driver of intent. In 2026, prompts have evolved into structured "scene directives." Instead of "a man drinking coffee," enterprise prompts look like: "30-second product demo; macro-to-hero shot; empathetic tone; warm 3200K lighting; focus on product label 'TrueBrew'." This level of semantic detail ensures the AI understands the narrative arc and emotional resonance required for the Indian consumer.

Image: The Visual Anchor

Image-guided video generation is the secret to brand fidelity. By uploading a reference image—such as a product packshot, a brand ambassador’s digital twin, or a specific architectural style—the AI locks these visual elements across the entire video. This eliminates the "flicker" and character inconsistency that plagued earlier models.

Audio: The Rhythmic Driver

Audio-driven video creation AI has revolutionized lip-sync and pacing. In 2026, the audio isn't just an overlay; it is the master clock. The AI analyzes phonemes (sounds) and maps them to visemes (mouth shapes) with sub-millisecond precision. Furthermore, music transients (beats) now trigger camera movements and scene transitions, creating a "rhythm-locked" edit that feels professionally produced.

Enterprise Use Case: The Multilingual CEO Address

Imagine a CEO delivering a quarterly update. With a multimodal workflow, the team provides:

1. Text: The script in English.

2. Image: A high-res photo of the CEO for identity locking.

3. Audio: A voice clone of the CEO.

The system then generates 12 versions in different Indian regional languages, each with perfect lip-sync and culturally adapted backgrounds, in under 10 minutes.

Source: Analytics India Magazine: 6 Text-to-Video Generative AI Models

3. Architecture of Multi-Input Video Generation Tools

The technical backbone of a unified AI video platform in 2026 relies on a "Controller Stack" that manages the weights of different inputs.

The Controller Stack

- Semantic Controller: Parses the text for "action tokens" and "safety constraints."

- Visual Controller: Uses reference image embeddings to enforce subject continuity. If the reference image is a specific Indian ethnic wear, the AI ensures the fabric texture and drape remain consistent throughout the motion.

- Audio Controller: Handles the viseme-phoneme alignment and beat detection.

Temporal Consistency and Identity Locking

One of the biggest hurdles in AI video was maintaining the same face or object across different shots. 2026 models use "Identity Locking" (ID-Lock) mechanisms. By using a reference image, the AI creates a 3D latent representation of the subject, allowing it to be placed in any environment or lighting condition while remaining 100% recognizable.

QA Hooks and Automated Moderation

For enterprises, safety is paramount. Modern integrated AI content creation tools include automated QA hooks. These systems scan every generated frame for:

- Lip-sync scores: Ensuring the mouth movements match the audio.

- Flicker detection: Removing visual jitter.

- Brand safety: Automatically flagging any content that violates pre-set corporate guidelines or Indian regulatory standards.

Source: MediaNama: India’s Deepfake Regulation and Compliance

4. India-First Requirements: Advanced AI Video Synthesis India

Creating video for the Indian market presents unique challenges that global, "one-size-fits-all" tools often fail to address. Advanced AI video synthesis India requires a deep understanding of localization, compliance, and distribution.

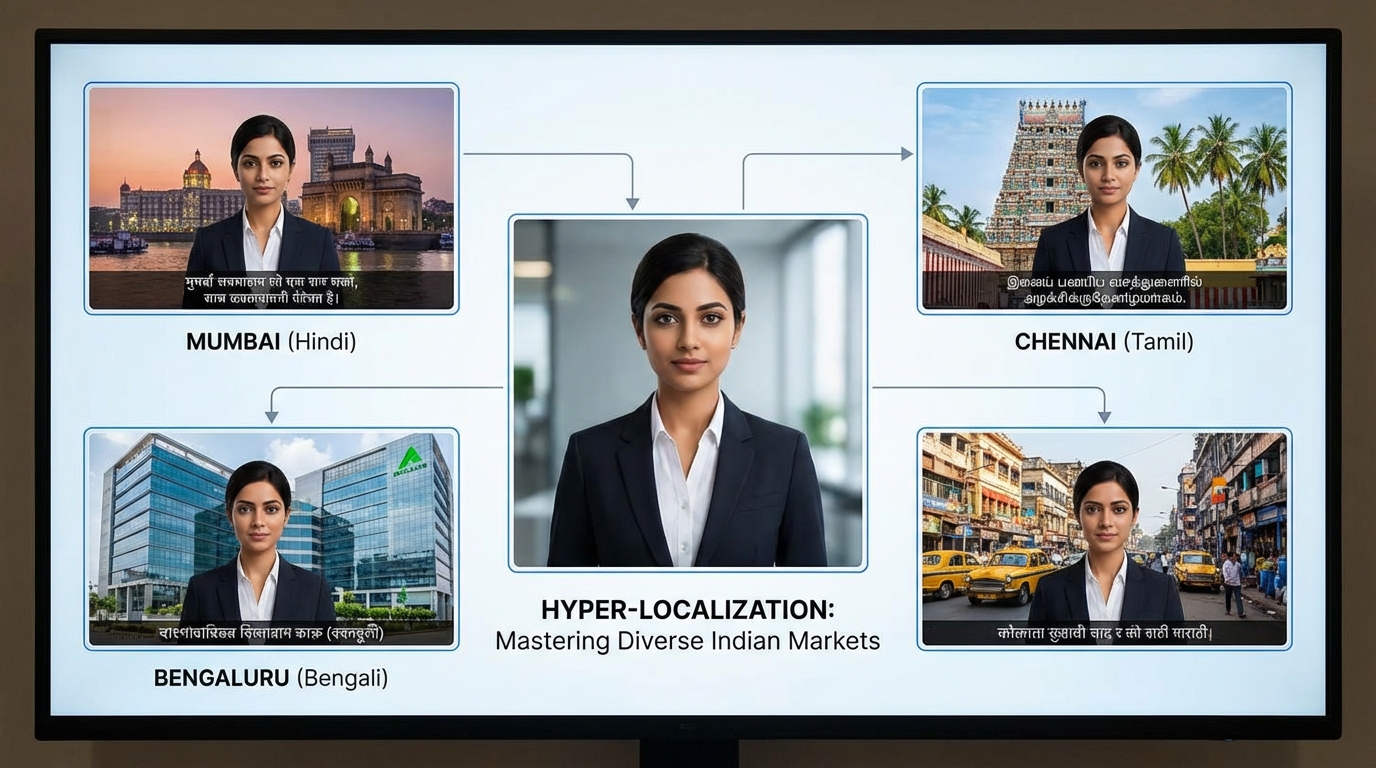

Hyper-Localization Beyond Translation

In 2026, localization isn't just about changing the language; it’s about "transcreation." This involves:

- Dialect Nuance: Moving beyond standard Hindi to support dialects like Bhojpuri, Marwari, or Hyderabadi Urdu.

- Cultural Context: Swapping background elements (e.g., a Mumbai skyline for a West India campaign vs. a Charminar backdrop for South India) based on the viewer's metadata.

- Regional Avatars: Using avatars that reflect the diverse ethnicities of India.

Studio by Truefan AI's 175+ language support and AI avatars ensure that a single campaign can resonate from Mumbai to Mizoram without losing cultural nuance.

Compliance with Indian IT Rules 2026

The Indian government has introduced stringent amendments to the IT Rules, requiring all AI-generated media to be clearly labeled. Platforms must now provide:

- Invisible Watermarking: Metadata embedded in the file that identifies it as AI-generated.

- Consent Logs: Proof that the digital twin or avatar used has been fully licensed.

- Rapid Takedown APIs: The ability to remove or modify content within hours of a regulatory request.

Low-Latency and WhatsApp Integration

India is a mobile-first, WhatsApp-first economy. A unified AI video platform must be able to render videos in under 60 seconds and push them directly through WhatsApp Business APIs. Research shows that personalized AI video messages delivered via WhatsApp see a 4.5x higher conversion rate compared to standard text-based marketing in 2026.

Source: YourStory: India’s AI Boom and Enterprise Adoption

5. The Multimodal Video Production Workflow

To achieve efficiency, enterprise teams must adopt a structured multimodal video production workflow. This moves the process from "trial and error" to a predictable manufacturing line.

Phase 1: The Multi-Input Brief

Instead of a traditional storyboard, teams now create a "Modality Brief."

- Text: The script and motion prompts.

- Image: The "Style Lock" images (product, talent, environment).

- Audio: The "Master VO" or music track.

Phase 2: Synthesis and Scene Weighting

In the platform, creators set "weights" for each input. For a product-heavy scene, the image weight might be 0.9 to ensure the product looks perfect. For a dialogue-heavy scene, the audio weight is prioritized to ensure the lip-sync is flawless.

Phase 3: Automated QA and Human-in-the-Loop

The AI generates the first draft. An automated "Compliance Agent" checks for brand violations and technical glitches. A human editor then performs a "final polish"—perhaps adjusting a camera angle or a subtitle placement—before hitting "Scale."

Phase 4: Regional Scaling

Once the master video is approved, the system automatically generates 20+ regional variants. This process, which used to take weeks of filming and editing, is now completed in under an hour. This efficiency is the core of integrated AI content creation.

Source: Analytics India Magazine: Practitioner Experimentation with AI Tools

6. Choosing a Unified AI Video Platform & ROI

For the CFO and CTO, the decision to invest in a unified AI video platform comes down to measurable ROI and risk mitigation.

Enterprise Selection Criteria

- Security Standards: ISO 27001 and SOC 2 certifications are mandatory for handling enterprise data.

- API-First Architecture: The platform must integrate with your existing CRM (Salesforce/HubSpot) and DAM (Adobe Experience Manager).

- Scalability: Can the platform handle 10,000 unique video renders in a single hour for a flash sale?

- Governance: Role-based access control (RBAC) and full audit trails for every video generated.

Solutions like Studio by Truefan AI demonstrate ROI through a 60% reduction in "time-to-first-edit" while maintaining 4K output standards.

The ROI Framework

- Production Cost Savings: Replacing physical shoots with AI synthesis can reduce costs by up to 90%.

- Speed to Market: Reducing the production cycle from 14 days to 14 minutes allows brands to react to trends in real-time.

- Performance Lift: Personalized, localized videos consistently outperform generic content in CTR (Click-Through Rate) and VTR (View-Through Rate).

Source: YourStory: Meta’s AI Ad Automation by 2026

7. Implementation & Compliance: The 30-Day Playbook

Transitioning to a multimodal AI video creation workflow doesn't happen overnight. It requires a strategic 30-day pilot.

Days 1-10: Asset Ingestion and Security

- Upload brand guidelines and reference image libraries.

- Set up SSO (Single Sign-On) and define user permissions.

- Configure the "Safety Filter" to align with corporate policy.

Days 11-20: The "Hero" Pilot

- Select one high-impact campaign (e.g., a new product launch).

- Generate the master video using text, image, and audio inputs.

- Run the automated QA and gather internal feedback.

Days 21-30: Regional Expansion and Distribution

- Generate regional variants in 5+ Indian languages.

- Integrate with the WhatsApp Business API for distribution.

- Measure the initial engagement metrics and calculate the ROI.

Conclusion

In 2026, "Consent-First" is the only way forward for enterprise video production. By adopting a unified AI video platform, brands in India can navigate the complexities of hyper-localization and regulatory compliance while achieving unprecedented creative scale. Whether it is through identity locking or agentic workflows, the integration of text, image, and audio ensures that every piece of content is not just generated, but crafted to meet the highest brand standards. As the digital landscape continues to shift, those who master multimodal synthesis today will lead the market tomorrow.

Frequently Asked Questions

Q1: How does multimodal AI video creation differ from standard text-to-video?

Standard text-to-video relies solely on a text prompt, which often leads to unpredictable results. Multimodal creation uses text, images, and audio as simultaneous inputs, providing the "guardrails" needed for professional brand consistency and precise control over the output.

Q2: Is it possible to maintain the same character across different scenes in 2026?

Yes. Through "Identity Locking" and image-guided video generation, you can use a single reference photo to ensure the character’s face, hair, and style remain identical across multiple shots, even if the lighting or environment changes.

Q3: How do I ensure my AI videos are compliant with Indian IT Rules?

Choosing a platform like Studio by Truefan AI ensures you have built-in watermarking and consent-based avatar usage. These platforms are designed to meet the 2026 regulatory requirements for transparency and traceability in AI-generated media.

Q4: Can these tools handle technical Indian languages and dialects?

Modern advanced AI video synthesis India tools now support over 175 languages, including deep support for Indian regional dialects. They don't just translate; they use localized voice models that capture the specific accent and cultural nuances of each region.

Q5: What is the average render time for a high-quality enterprise video?

While it varies by complexity, a unified AI video platform in 2026 can typically render a 60-second HD video in under 3 minutes. Some specialized "rapid-render" flows can produce social-ready content in as little as 30 seconds.

Q6: Can I integrate my own company spokesperson as an AI avatar?

Absolutely. Most enterprise platforms offer "Custom Avatar Training." By providing a few minutes of high-quality footage of your spokesperson, the AI creates a digital twin that can then "speak" any script in any language, 24/7.