Real-time interactive AI avatars India 2026: An enterprise implementation guide for two-way customer conversations, Zoom, and live CX

Key Takeaways

- Real-time interactive AI avatars combine streaming ASR, LLM + RAG, neural TTS, live animation, and WebRTC to enable human-like, two-way conversations.

- Latency and barge-in are critical for natural interactions; sub-300 ms audio and immediate interruptibility are must-haves.

- Indian enterprises must operationalize DPDP Act 2026 compliance with explicit consent, data residency, and watermarking.

- A structured 90-day rollout ties investment to KPIs like AHT, FCR, deflection rate, and CSAT.

- Platforms like Studio by TrueFan AI, HeyGen, and Tavus help scale live avatar CX across languages and channels including Zoom.

The landscape of customer experience is undergoing a seismic shift. As we navigate the digital-first economy, real-time interactive AI avatars India 2026 have emerged as the gold standard for enterprise engagement. No longer confined to static video messages or clunky chatbots, these interactive digital humans now conduct face-to-face AI conversations with the nuance, speed, and empathy of a human agent, but with the infinite scalability of cloud computing.

Executive Summary

- The Definition: Real-time interactive AI avatars are photorealistic digital presenters capable of two-way, low-latency video and audio communication, featuring interruptibility and dynamic emotional responses.

- The India Context: Driven by 95% urban 5G penetration and the Digital Personal Data Protection (DPDP) Act 2026, Indian enterprises are transitioning from “AI-assisted” to “AI-led” customer service.

- The Promise: This guide provides a vendor-agnostic blueprint for implementing live AI avatar customer service, covering everything from WebRTC architecture to Zoom integration and DPDP compliance.

For Customer Service Leaders and Enterprise Digital Transformation teams, the goal is clear: provide live AI avatar customer service that integrates seamlessly with existing CRM/CCaaS stacks while respecting India’s stringent data residency laws. This post outlines how to bridge the gap between experimental POCs and production-grade, two-way AI avatar conversations.

1. Defining the 2026 Standard: Architecture of Two-Way AI Avatar Conversations

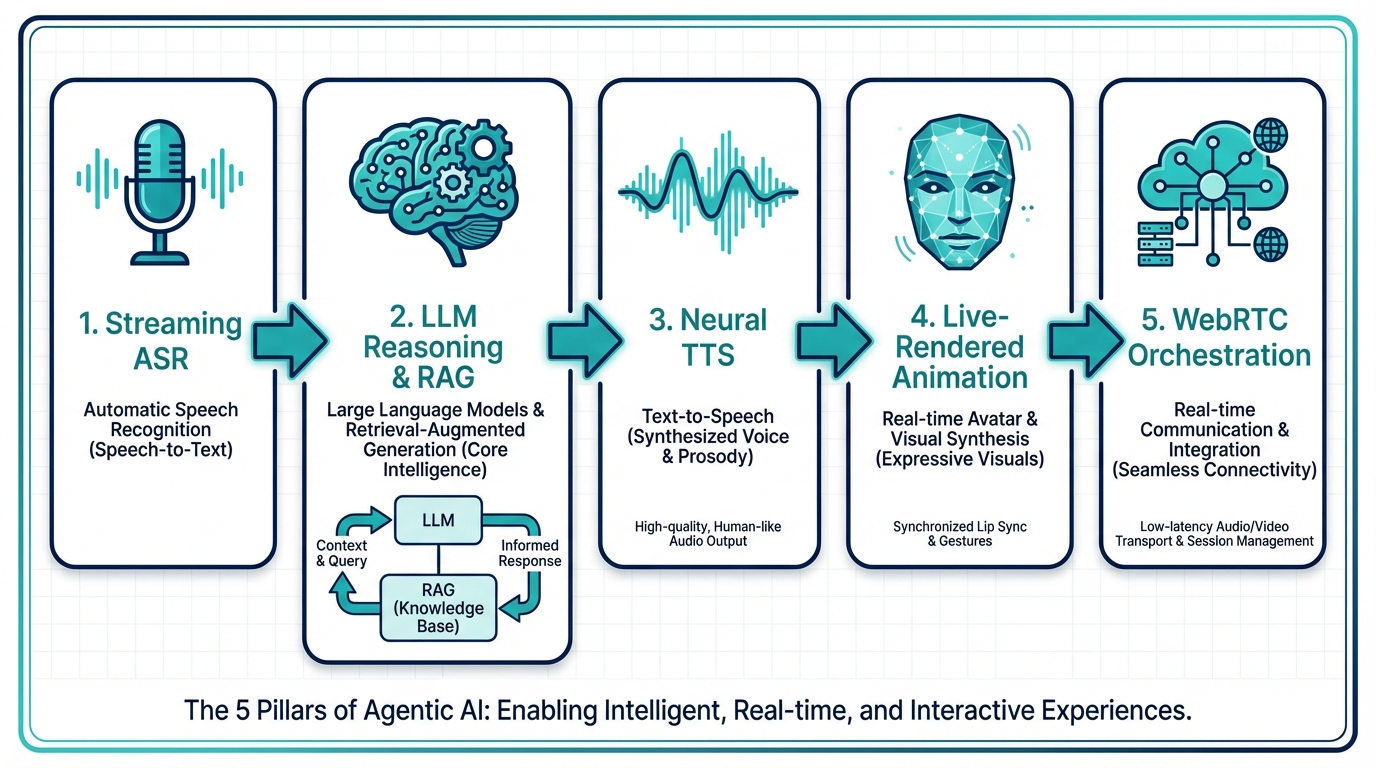

To understand why real-time interactive AI avatars India 2026 are revolutionary, we must look at the underlying “Agentic AI” stack. Unlike traditional video, which is pre-rendered and served, real-time avatars are “streamed” in a continuous loop of perception and action.

The Technical Blueprint

A production-grade interactive digital humans platform relies on five core pillars:

- Streaming ASR (Automatic Speech Recognition): Converts the user’s spoken audio into text in real-time. In the Indian context, this requires sophisticated models capable of “Hinglish” code-switching and regional dialect recognition (Tamil, Telugu, Bengali, etc.).

- LLM Reasoning & RAG: The “brain” of the avatar. Using Retrieval-Augmented Generation (RAG), the AI fetches verified snippets from your enterprise knowledge base to ensure accuracy.

- Neural TTS (Text-to-Speech): Converts the LLM’s response back into audio. By 2026, these voices include prosody control, allowing the avatar to sound concerned, excited, or professional based on the customer’s sentiment.

- Live-Rendered Animation (Visemes): The visual engine generates mouth shapes (visemes) and micro-expressions that sync perfectly with the audio.

- WebRTC Orchestration: The protocol that ensures the video and audio reach the user with sub-second latency.

Latency: The Make-or-Break Metric

In 2026, the “uncanny valley” is bridged not just by visuals, but by timing.

- Audio Round-trip: Must be <300 ms for natural turn-taking.

- Visual Sync: Mouth movements must respond within <1 second of the user finishing their sentence.

- Barge-in Capability: Using Voice Activity Detection (VAD), the avatar must stop speaking immediately if the user interrupts, just like a human would.

Platforms like Studio by TrueFan AI enable enterprises to orchestrate these complex video layers, ensuring that the pre-session and post-session interactions are as polished as the live conversation itself.

Source: Inside D-ID’s real-time AI avatar technology.

2. The 2026 Platform Landscape: HeyGen, Tavus, and Selection Criteria

Choosing the right interactive digital humans platform requires a balance between visual fidelity, API maturity, and regional compliance. While the market is crowded, three distinct leaders have emerged for different use cases.

HeyGen LiveAvatar

HeyGen has moved beyond its origins in marketing videos to offer a robust HeyGen LiveAvatar implementation guide for real-time sessions. Its strengths lie in its massive library of avatars and its “Streaming API,” which allows for bidirectional WebRTC communication. For Indian enterprises, HeyGen offers a balance of ease-of-use and high-quality visual output.

Tavus Conversational Video Interface (CVI)

Tavus focuses heavily on the “Conversational Video Interface.” Their CVI is designed specifically for developers who want to embed a “face” into their web applications. It excels in high-fidelity lip-syncing and offers deep hooks for React and other modern web frameworks, making it a favorite for product onboarding and technical support.

BHuman Alternatives Comparison

While BHuman is a pioneer in personalized video, it primarily focuses on asynchronous video that looks personalized. For live AI avatar customer service, enterprises need synchronous interaction.

| Feature | BHuman (Async) | HeyGen/Tavus (Real-time) | Studio by TrueFan AI (Orchestration) |

|---|---|---|---|

| Interaction Type | One-way (Video Message) | Two-way (Live Conversation) | Hybrid (Automation + Live) |

| Latency Focus | N/A (Pre-rendered) | Ultra-low (<500ms) | Optimized Delivery |

| Primary Use Case | Cold Outreach / Marketing | Customer Support / Sales | Enterprise CX / Scaling |

| Language Support | High | High | 175+ Languages |

When evaluating these tools, Indian enterprises must prioritize dynamic avatar responses India—the ability for the avatar to adjust its cultural cues and language based on the user's location within the subcontinent.

Source: AI Avatar Development Companies in India - Softlabs Group.

3. Implementation Deep Dive: API Integration and Zoom Meetings

Integrating real-time interactive AI avatars India 2026 into your existing workflow is no longer a “black box” project. Modern APIs have standardized the process into a predictable 90-day roadmap.

Conversational Avatar API Integration Patterns

The integration typically follows a session-based lifecycle:

- Session Initiation: Your backend calls the Avatar API to create a session, passing a “System Prompt” that defines the avatar’s persona (e.g., “You are a helpful banking assistant for HDFC”).

- Signaling: A WebRTC handshake is performed between the user's browser and the avatar server.

- Context Injection: Using function calling, the avatar can “pull” data from your CRM (like Salesforce or Zendesk). For example, “I see you are calling about your recent order #12345.”

- Event Handling: Your application listens for events like

on_user_interruptionoron_human_handoff_required.

AI Avatar Zoom Meetings Integration

One of the most requested features in 2026 is the ability for an AI avatar to join a Zoom or Microsoft Teams meeting as a participant.

- The Bot Approach: A virtual “meeting bot” joins the call, capturing the audio/video feed and projecting the avatar’s face as its camera output.

- Use Cases: Multilingual Q&A sessions, automated HR onboarding, and 24/7 technical training.

- Operational Note: Ensure your Zoom integration handles “consent to record” prompts automatically to remain compliant with Indian privacy laws.

Studio by TrueFan AI's 175+ language support and AI avatars provide the necessary linguistic breadth to ensure these Zoom meetings are accessible to every stakeholder in India, regardless of their primary language.

Source: HeyGen API LiveAvatar Pricing & Subscriptions.

4. Compliance-by-Design: Navigating India’s DPDP Act 2026

In 2026, data privacy is not an afterthought—it is a license to operate. The Digital Personal Data Protection (DPDP) Act has fundamentally changed how Indian enterprises handle biometric and video data.

Key Compliance Mandates for AI Avatars

- Explicit Consent: Before a session begins, users must be presented with a clear, plain-language modal explaining that they are interacting with an AI and how their voice/video data will be used.

- Data Residency: Under the DPDP Act, sensitive personal data must be processed and stored within India. Enterprises should look for vendors with AWS Mumbai or Azure Central India regions.

- The Right to Erasure: Users must have a simple way to request the deletion of their interaction logs and any voice prints captured during the session.

- Watermarking & Disclosure: To prevent deepfake misuse, all real-time AI video must contain a digital watermark or a persistent “AI Generated” overlay.

Implementing the “Consent UX”

A compliant implementation should include:

- Pre-session: A checkbox for “I consent to AI-driven video processing.”

- In-session: A visible watermark indicating the avatar is a digital twin.

- Post-session: An automated email summary of the data collected and a link to the privacy dashboard.

By adhering to these standards, solutions like Studio by TrueFan AI demonstrate ROI through reduced legal risk and increased customer trust, which are critical for long-term adoption in the Indian market.

Source: Official Digital Personal Data Protection Act 2023 - MeitY.

5. Measuring Success: ROI, KPIs, and the 90-Day Roadmap

Deploying real-time interactive AI avatars India 2026 is a significant investment. To justify the spend, enterprises must move beyond “cool factor” metrics and focus on hard business outcomes.

The ROI Framework

- AHT (Average Handle Time): AI avatars can resolve queries faster by instantly accessing documentation that a human agent might take minutes to find.

- FCR (First Contact Resolution): With RAG-enabled brains, avatars provide accurate answers 99% of the time, reducing follow-up calls.

- Deflection Rate: How many live human sessions were avoided? In 2026, top-tier Indian banks are seeing 40-60% deflection for Tier-1 support queries.

- CSAT (Customer Satisfaction): Many users prefer the “judgment-free” interaction of an AI avatar for sensitive issues like debt collection or health inquiries.

The 90-Day Rollout Roadmap

- Weeks 1-2 (Discovery): Identify a high-volume, low-complexity use case (e.g., KYC status checks). Conduct a DPDP gap analysis.

- Weeks 3-6 (POC): Build a web-embedded avatar using a Tavus conversational video interface or HeyGen API. Integrate with a sandbox CRM.

- Weeks 7-9 (Tuning): Optimize for “Hinglish” and regional accents. Conduct “red-team” testing to ensure the avatar doesn't hallucinate or provide biased advice.

- Weeks 10-12 (Scale): Go live with a 10% traffic split. Monitor latency and CSAT.

Source: 2026 AI Business Predictions - PwC.

6. The Future of Face-to-Face AI Conversations in India

As we look toward the end of 2026, the distinction between “human” and “digital” service will continue to blur. The rise of real-time video engagement tools means that every Indian brand, from e-commerce giants in Bengaluru to traditional retail houses in Mumbai, can offer a “personal concierge” to every customer.

The key to success lies in face-to-face AI conversations that feel authentic. This requires more than just good graphics; it requires a deep understanding of the Indian consumer's linguistic diversity and cultural nuances. Whether it's an avatar speaking Marathi to a farmer in Vidarbha or a “Gen-Z” avatar helping a student in Delhi, the technology is finally ready to meet the scale of India.

Conclusion

Final Thoughts for CX Leaders

The transition to real-time interactive AI avatars India 2026 is not just a technological upgrade; it is a strategic imperative. By combining the emotional intelligence of human-like visuals with the analytical power of modern LLMs, Indian enterprises can finally deliver personalized service at a scale previously thought impossible.

Ready to pilot your first live AI avatar?

- Action 1: Audit your top 5 customer support intents for “avatar readiness.”

- Action 2: Review the HeyGen LiveAvatar implementation guide for technical feasibility.

- Action 3: Ensure your data governance team is briefed on the DPDP Act 2026 requirements for biometric data.

The future of CX in India is vocal, visual, and—above all—interactive.

Data & Research Sources:

- PwC AI Predictions 2026: pwc.com/us/en/tech-effect/ai-analytics/ai-predictions.html

- Digital Personal Data Protection Act 2023 Summary: prsindia.org/files/bills_acts/bills_parliament/2023/Summary_Digital_Personal_Data_Protection_Bill_2023.pdf

- India 5G & AI Market Trends 2026: analyticsindiamag.com

- TrueFan AI Product Intelligence: Studio by TrueFan AI

- Tavus CVI Documentation: tavus.io

Frequently Asked Questions

How do real-time avatars handle poor internet connectivity in rural India?

Most modern platforms use adaptive bitrate streaming via WebRTC. If the connection drops below a certain threshold, the system can automatically downgrade the video quality while prioritizing the audio stream to ensure the conversation continues.

Is it possible to create a digital twin of our own CEO for these interactions?

Yes. Platforms like Studio by TrueFan AI specialize in creating digital twins of real people (influencers, executives, or actors). This ensures that your AI avatar maintains your brand’s unique visual identity and voice.

How does the AI handle “Hinglish” (mixing Hindi and English)?

In 2026, ASR models are specifically trained on Indian “code-switching” datasets. The LLM understands the intent regardless of the language mix and can respond in the same hybrid style to make the customer feel more comfortable.

What is the cost difference between async video and real-time interactive avatars?

Real-time avatars are generally more expensive due to the “always-on” GPU rendering required for live sessions. While async video (like BHuman) is billed per video generated, real-time sessions are typically billed per minute of interaction.

Can these avatars be integrated with WhatsApp?

Absolutely. While WhatsApp doesn't support live WebRTC video inside the chat interface yet, the common pattern is to send a “Magic Link” via WhatsApp that opens a mobile-optimized web session where the live avatar resides.

How do we ensure the AI doesn't give financial or legal advice it shouldn't?

This is handled through guardrails. By using a RAG (Retrieval-Augmented Generation) architecture, the AI is restricted to answering only from a verified knowledge base. If a user asks a question outside that scope, the avatar is programmed to say, “I'm sorry, I'm not authorized to discuss that. Would you like to speak to a human specialist?”