Edge AI Video Processing India: A 2026 Enterprise Guide to Private, Latency-Free AI Video

Estimated reading time: 9 minutes

Key Takeaways

- The DPDP Act and sub-100 ms needs make edge AI video processing a strategic necessity in India

- On-premise architectures cut cloud egress and bandwidth by 60–65% while improving privacy

- Latency-free processing at the edge enables real-time interventions for safety and operations

- Compliance-by-design supports DPDP and CERT-In with local redaction, logging, and auditability

- Studio by TrueFan AI integrates with edge stacks for secure, localized, multilingual video generation

The paradigm of enterprise video intelligence in India has shifted. As we enter 2026, the reliance on cloud-only video pipelines has become a liability for Indian enterprises facing the dual pressures of the Digital Personal Data Protection (DPDP) Act and the need for sub-100 ms response times. Edge AI video processing India has emerged not just as a technical preference, but as a strategic necessity for privacy-first, latency-free operations.

Executive Summary

- Definition: Edge AI video processing involves running AI inference and generative workflows on or near the data source—such as branch servers, micro-data centers, or NPUs (Neural Processing Units)—to keep raw video data local and secure.

- The 2026 Shift: With the DPDP Act reaching full enforcement in March 2026, enterprises are moving away from centralized cloud processing to decentralized video AI India topologies to ensure data residency and minimize breach risks.

- Strategic Advantage: By adopting on-premise AI video infrastructure, organizations achieve a 65% reduction in cloud egress costs and eliminate the 300-500 ms latency typical of cloud round-trips.

1. The 2026 Landscape: Why Edge AI is Dominating India’s Enterprise Sector

The Indian enterprise landscape in 2026 is defined by a “privacy-first” mandate. The era of uploading raw CCTV or sensitive operational footage to public clouds for analysis is ending. Several key drivers have accelerated the adoption of edge AI video processing India:

The DPDP Enforcement Milestone

As of March 2026, the Digital Personal Data Protection Act is fully operational. Indian enterprises are now legally bound by strict data minimization and purpose limitation principles. Processing PII (Personally Identifiable Information) at the edge—where it can be redacted or anonymized before any data leaves the premises—is the only viable way to meet these obligations without crippling operational efficiency.

Hardware Sovereignty and Local Innovation

2026 has seen a surge in local hardware capabilities. The July 2025 funding of ₹107 crore for Kerala-based semiconductor startup Netrasemi signals India’s intent to lead in edge AI chipsets. Furthermore, the landmark collaboration between Qualcomm and CP PLUS has ushered in a new generation of AI-enabled video intelligence specifically designed for the Indian security sector. This partnership allows for sophisticated AI models to run directly on NVRs and edge boxes, making latency-free video processing accessible to even mid-market enterprises.

Market Statistics for 2026

- Market Growth: India’s edge AI market is projected to grow at a 32% CAGR through 2026, driven by smart city initiatives and BFSI security upgrades.

- Compliance Burden: Multinational companies operating in India report a 300% increase in data governance complexity since 2023, necessitating automated edge-based compliance tools.

- Cost Efficiency: Enterprises deploying edge computing video production report an average of 60-65% savings on upstream bandwidth and cloud storage costs.

- Breach Response: Under the 2026 DPDP rules, the 72-hour breach reporting window has made on-premise data isolation a critical fail-safe for CISOs.

Source: Qualcomm Press Note (Dec 2025); Netrasemi Funding Report (July 2025); Deloitte 2026 Compute Trends

2. Core Benefits: Privacy, Latency, and the ROI of Local Processing

The transition to edge computing video production is driven by three non-negotiable enterprise requirements: privacy, performance, and price.

Privacy-First AI Video Creation

In a cloud-centric model, every frame of video—including faces of employees, customers, and sensitive documents—is transmitted over the internet. Privacy-first AI video creation at the edge flips this script. By running detection and redaction models locally, enterprises can ensure that only “feature vectors” or anonymized metadata (e.g., “Person detected at 14:00” rather than the actual image of the person) are sent to the central dashboard. This aligns perfectly with the DPDP’s “Data Minimization” mandate.

Latency-Free Video Processing

For mission-critical applications like assembly-line QA or real-time incident triage in smart cities, every millisecond counts. Cloud processing typically introduces 300ms to 2 seconds of latency. In contrast, latency-free video processing at the edge delivers “glass-to-glass” latency of sub-50ms. This enables immediate automated interventions, such as halting a robotic arm if a safety violation is detected.

Bandwidth and Egress Optimization

Streaming multiple 4K video feeds to the cloud is prohibitively expensive in terms of bandwidth. Edge AI allows for “intelligent streaming,” where the system only uploads high-resolution footage when an event of interest is triggered. Platforms like Studio by TrueFan AI enable enterprises to generate high-quality communication assets locally, reducing the need for massive data transfers during the content creation phase.

3. Architecture Blueprints for On-Premise AI Video Infrastructure

Building a robust on-premise AI video infrastructure requires a multi-layered approach that balances compute power with security.

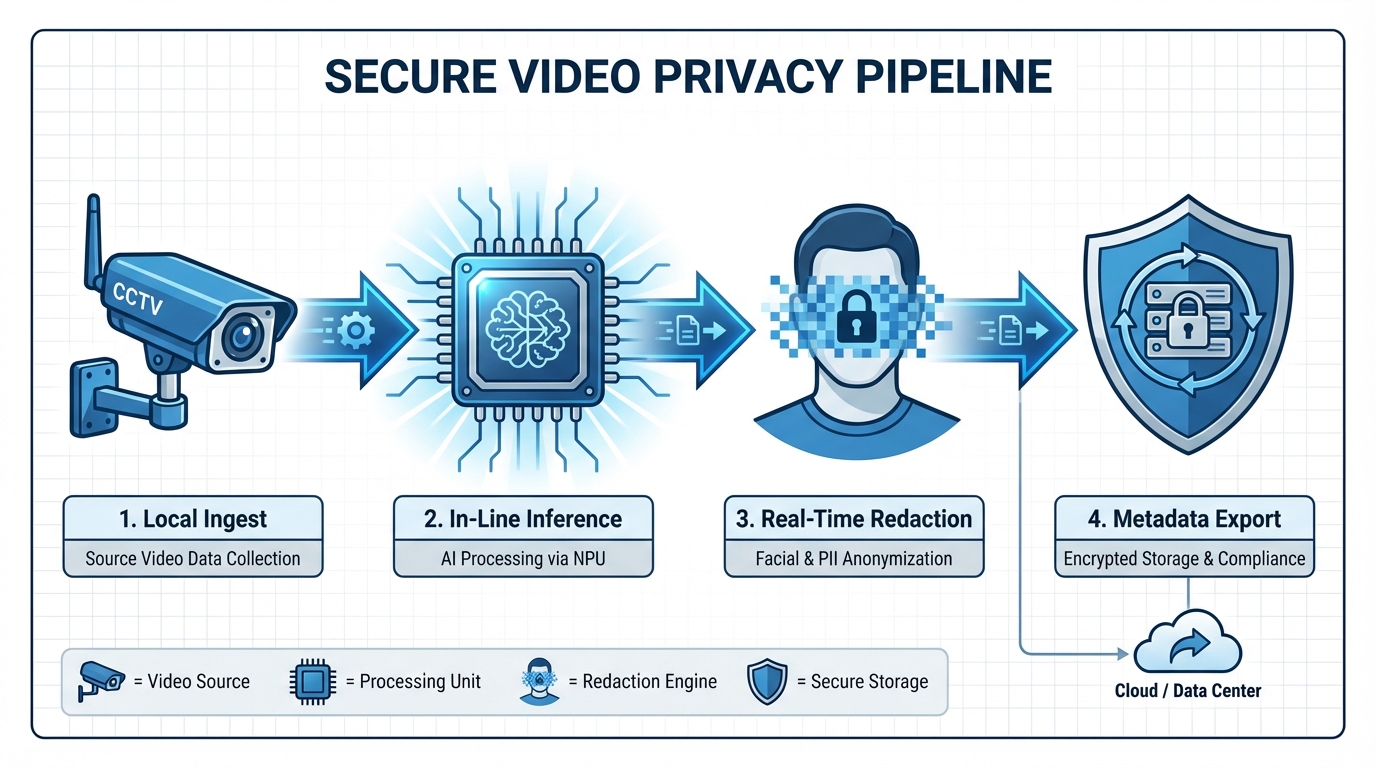

The Secure Video Privacy Pipeline

A modern edge architecture follows a strict “Ingest-Process-Redact-Export” workflow:

- Local Ingest: High-resolution streams (RTSP/ONVIF) are captured by local edge nodes (NVIDIA Jetson, Qualcomm Edge Boxes, or Intel NPUs).

- In-Line Inference: AI models perform real-time detection, classification, and tracking.

- Real-Time Redaction: Faces, license plates, and sensitive areas are blurred or masked at the source.

- Metadata Export: Only encrypted event logs and redacted clips are sent to the central VMS (Video Management System).

Secure Video Generation Edge AI

Beyond analytics, 2026 has seen the rise of secure video generation edge AI. This involves using Trusted Execution Environments (TEEs) to run generative models locally. For instance, a bank can generate personalized customer service videos within its own private network, ensuring that customer data never touches a third-party AI server.

Offline AI Avatar Rendering

For remote sites like mines, oil rigs, or rural bank branches with intermittent connectivity, offline AI avatar rendering is a game-changer. By caching model weights and avatar assets locally, these sites can generate updated safety briefings or SOP (Standard Operating Procedure) videos in real-time without an active internet connection. Studio by TrueFan AI’s 175+ language support and AI avatars are particularly effective here, allowing for localized, multilingual training content to be rendered on-site, ensuring that critical information is always accessible in the local dialect.

Source: UnifiedAIHub: Edge AI in 2026; TrendMinds: Top Edge AI Use Cases 2026

4. Compliance-by-Design: Navigating DPDP and CERT-In

In India, technical architecture and legal compliance are now inseparable. An edge AI video processing India strategy must account for two primary regulatory frameworks.

The Digital Personal Data Protection (DPDP) Act, 2023

The DPDP Act imposes heavy penalties (up to ₹250 crore) for non-compliance. Edge AI facilitates compliance through:

- Consent Management: Local nodes can cross-reference video data with a local consent database before processing.

- Right to Erasure: On-premise storage allows for granular control over data deletion, ensuring that “Right to be Forgotten” requests are honored across all local backups.

- Data Fiduciary Obligations: By keeping data on-prem, enterprises maintain “exclusive control,” simplifying the audit trail required for Significant Data Fiduciaries.

CERT-In Logging Directives

The April 2022 CERT-In directives require enterprises to maintain 180 days of logs within Indian jurisdiction. Edge AI systems must implement:

- Synchronized Time-Stamping: Using NTP/PTP to ensure all edge nodes are perfectly synced for forensic accuracy.

- Tamper-Proof Logging: Utilizing hash-chaining or local immutable ledgers to ensure that video logs cannot be altered after the fact.

Source: PRS India: DPDP Act Summary; CERT-In Directions (April 2022)

5. Sector-Specific Deployment Playbooks

The application of decentralized video AI India patterns varies significantly across industries.

BFSI: The “Private Branch” Model

Banks are deploying micro-data centers in regional hubs to process ATM and branch footage.

- Use Case: Real-time detection of “shoulder surfing” or suspicious loitering.

- Privacy Control: All customer faces are automatically blurred in the central monitoring feed, accessible only via a “break-glass” protocol during a confirmed security incident.

Smart Cities: The “Ward-Level” Topology

Rather than a single massive command center, Indian smart cities in 2026 are using a decentralized video AI India approach.

- Use Case: Traffic flow optimization and flood detection at the ward level.

- Benefit: Reduces the risk of a single point of failure and prevents the city’s backbone network from being choked by thousands of raw video streams.

Manufacturing: The “Offline SOP” Playbook

Factories often face network “dead zones.” Edge computing video production allows for:

- High-FPS Defect Detection: Processing 120+ frames per second on the assembly line to catch micro-fractures.

- Localized Training: Using offline AI avatar rendering to provide instant, localized feedback to workers when they deviate from a safety protocol.

Source: DigitalDefynd: AI in India Use-Cases; ET Edge Insights: The Great Operational Shift 2026

6. 2026 Readiness: On-Device Video Generation and Decentralization

As we look toward the latter half of 2026, two trends are redefining the “Edge.”

On-Device Video Generation 2026

The latest generation of smartphones and ruggedized field tablets now feature NPUs capable of on-device video generation 2026. This allows field agents—such as insurance adjusters or safety inspectors—to generate professional, narrated video reports directly on their devices. These reports use local AI avatars to summarize findings, ensuring that sensitive site data never leaves the mobile device’s encrypted storage.

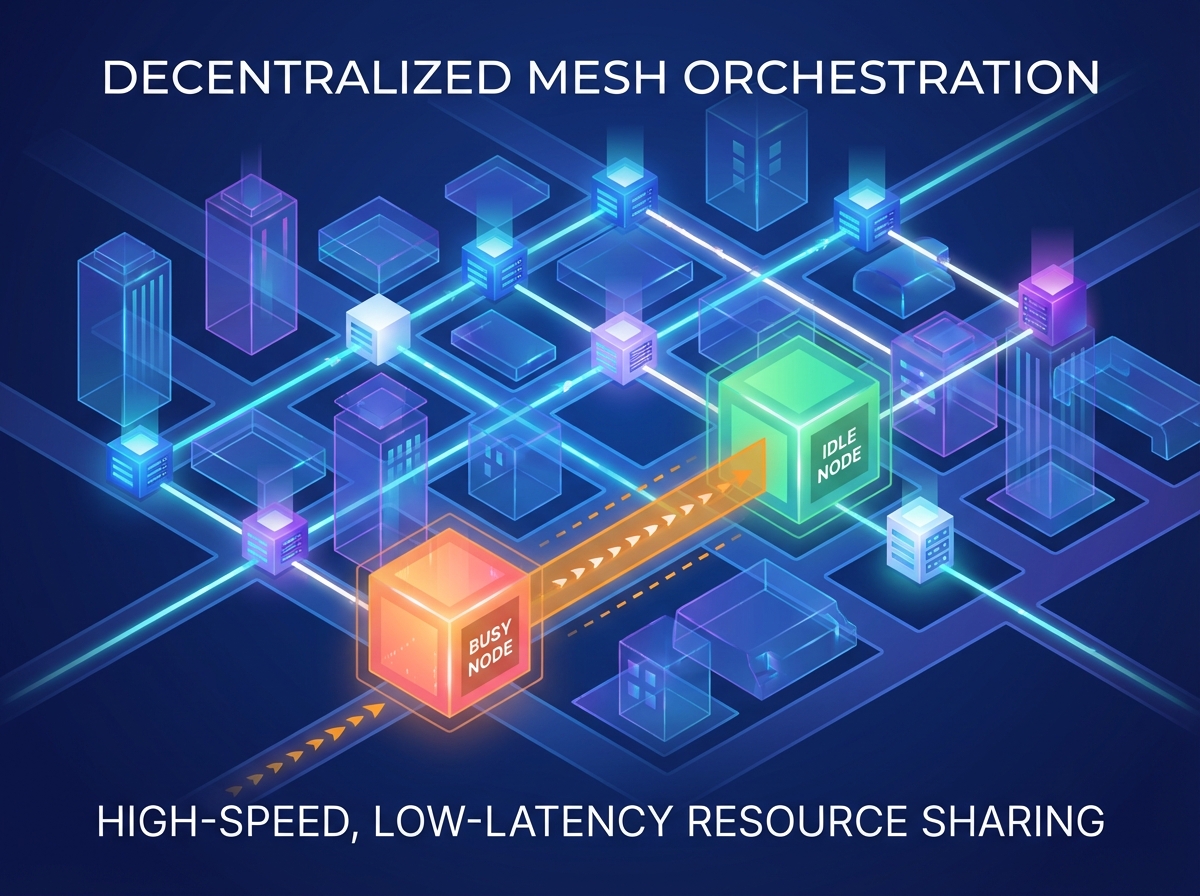

Decentralized Mesh Orchestration

The next evolution is the “Edge Mesh,” where local nodes share compute resources. If one edge server is overloaded with video processing tasks, it can offload inference to a nearby idle node within the same private network. This ensures latency-free video processing even during peak activity periods, such as during a city-wide festival or a factory shift change.

7. Enterprise Governance with Studio by TrueFan AI

For enterprises, the challenge isn’t just generating video; it’s doing so within a framework of absolute security and brand safety. Solutions like Studio by TrueFan AI demonstrate ROI through their ability to bridge the gap between high-end generative AI and strict enterprise governance.

Compliance and Safety Standards

Studio by TrueFan AI is built for the regulated enterprise. With ISO 27001 and SOC 2 certifications, the platform ensures that the “walled garden” approach to AI video generation is maintained. This includes:

- Content Moderation: Real-time filters that block political endorsements, hate speech, and explicit content before a single frame is rendered.

- Traceability: Every video generated is watermarked and logged, providing a clear audit trail for compliance officers.

Integration with Edge Workflows

While the core engine is cloud-agnostic, the platform’s robust APIs allow it to integrate seamlessly with on-premise AI video infrastructure. For example, a retail chain can use edge analytics to identify a “low stock” situation and automatically trigger a request to the Studio by TrueFan AI API to generate a localized promotional video for that specific branch, which is then rendered and displayed on in-store screens.

Localization at Scale

Privacy-compliant video generation requires more than just security; it requires relevance. With support for over 175 languages and a library of pre-licensed, photorealistic avatars (including real influencers like Gunika and Aryan), enterprises can scale their communication without the risks associated with unauthorized deepfakes. Platforms like Studio by TrueFan AI enable this level of scale while ensuring that all “digital twins” are used with full consent and legal backing.

Action Plan: Implementing Your Edge AI Strategy

To transition to a privacy-first AI video creation model, follow this 6-week enterprise pilot:

- Week 1 (Audit): Conduct a site survey to identify latency bottlenecks and perform a Data Protection Impact Assessment (DPIA) for your video workflows.

- Week 2-3 (Infrastructure): Deploy edge nodes (K8s-managed) and establish your local AI video processing tools. Integrate your VMS with local redaction pipelines.

- Week 4 (Integration): Connect your edge stack with Studio by TrueFan AI via APIs to enable governed, localized content generation.

- Week 5 (Testing): Run “disconnected mode” drills to ensure offline AI avatar rendering and local failovers work as expected.

- Week 6 (Review): Evaluate KPIs—specifically looking for a >90% reduction in glass-to-glass latency and a >60% reduction in cloud bandwidth costs.

By moving intelligence to the edge, Indian enterprises can finally achieve the holy grail of video operations: total privacy, zero latency, and radical cost efficiency. The tools are ready; the regulations are clear. The only question is how fast your organization can adapt.

Conclusion

Edge AI video processing is reshaping India’s enterprise landscape in 2026. By keeping sensitive data on-premise, achieving sub-50 ms responses, and aligning with DPDP and CERT-In, organizations unlock secure, real-time video intelligence at scale. With robust architectures, sector-specific playbooks, and platforms like Studio by TrueFan AI, enterprises can confidently modernize their video operations while reducing costs and strengthening compliance.

Frequently Asked Questions

How does edge AI help with DPDP compliance specifically?

Edge AI enables “Data Minimization.” By processing video locally, you can redact PII (faces, plates) before data is stored or transmitted. This ensures you only collect the data necessary for the specific “purpose” (e.g., security), as mandated by the Act.

What is the difference between Edge AI and On-Premise AI?

While often used interchangeably, “Edge” refers to processing at the extreme periphery (cameras, sensors), while “On-Premise” can include local servers or micro-data centers. A robust on-premise AI video infrastructure usually incorporates both.

Can I use AI avatars for offline training in remote locations?

Yes, solutions like Studio by TrueFan AI can be integrated into edge environments to allow for offline AI avatar rendering. By caching the necessary model components on a local server, you can generate and play back high-quality, lip-synced training videos even without an internet connection.

What are the hardware requirements for latency-free video processing?

In 2026, the standard is moving toward NPUs (Neural Processing Units) and specialized AI accelerators like NVIDIA Jetson Orin or Qualcomm’s latest edge platforms. These provide the TOPS (Tera Operations Per Second) required for real-time inference.

How does decentralized video AI India reduce costs?

By processing data locally, you eliminate the “Cloud Tax”—the high cost of bandwidth for uploading 24/7 video and the egress fees for retrieving it. Enterprises typically see a 60%+ reduction in these operational expenses.

Is on-device video generation 2026 ready for mainstream use?

Absolutely. With the integration of high-performance NPUs in enterprise-grade mobile devices, generating short, narrated AI videos for field reports or internal comms is now a sub-60-second process that happens entirely on the device.