AI Video Content Moderation Tools in 2026: Enterprise Brand Safety, Compliance Automation, and Reputation Protection in India

Estimated reading time: 8 minutes

Key Takeaways

- Modern tools utilize multimodal detection (audio + text-on-screen + visuals) to enforce policy with automated audit trails.

- Compliance with India's DPDP Act 2025 and ASCI influencer rules is mandatory to avoid heavy financial and reputational penalties.

- Enterprises are seeing a 40% reduction in incident response time and a 60% decrease in manual QA costs through automation.

- API-first integration allows for pre-flight and in-flight scanning, ensuring 100% brand suitability across thousands of video variants.

AI video content moderation tools are now table stakes for brand safety. In 2026, enterprise legal, compliance, and brand teams need API-first guardrails to filter controversial content, enforce brand guidelines, and ensure consistent, compliant video at scale—especially under India’s DPDP Act and ASCI influencer rules. As the global content moderation services market exceeds $12.48 billion in 2025, the shift from manual review to automated, multimodal AI detection is no longer optional; it is a survival mechanism for the digital-first enterprise.

Section 1: Why Brand Safety in AI Video Matters in 2026 (India Context)

In the current landscape, the distinction between brand safety and brand suitability has become the frontline of digital strategy. While brand safety focuses on avoiding "unsafe" categories like illegal acts or explicit content, brand suitability is a nuanced alignment with a brand’s specific tolerance levels across regional sensitivities, political contexts, and news cycles.

In India, the stakes have never been higher. According to the ASCI Half-Yearly Complaints Report (2025–26), digital media accounted for a staggering 97% of all ad violations. Furthermore, 76% of top influencers were found to be in breach of disclosure norms. This "risk spillover" means that even if your brand is safe, the creators or AI-generated content you deploy might not be.

The regulatory environment is also tightening:

- DPDP Act (2023) & Rules (2025): These emphasize auditable consent for any personal data—including faces and voices—used in video campaigns.

- ASCI Influencer Guidelines (2025): Mandatory superimposed disclosures on video are now required, moving beyond simple caption hashtags.

- The Rise of CTV: Connected TV in India is projected to reach ₹2,000 Cr in ad spend, offering a more curated, "safer" environment that combines TV-like controls with digital precision.

Source: MeitY DPDP Act PDF

Source: ASCI Influencer Guidelines 2025

Source: exchange4media on CTV Brand Safety

Section 2: Enterprise Video Compliance Automation: End-to-End Architecture

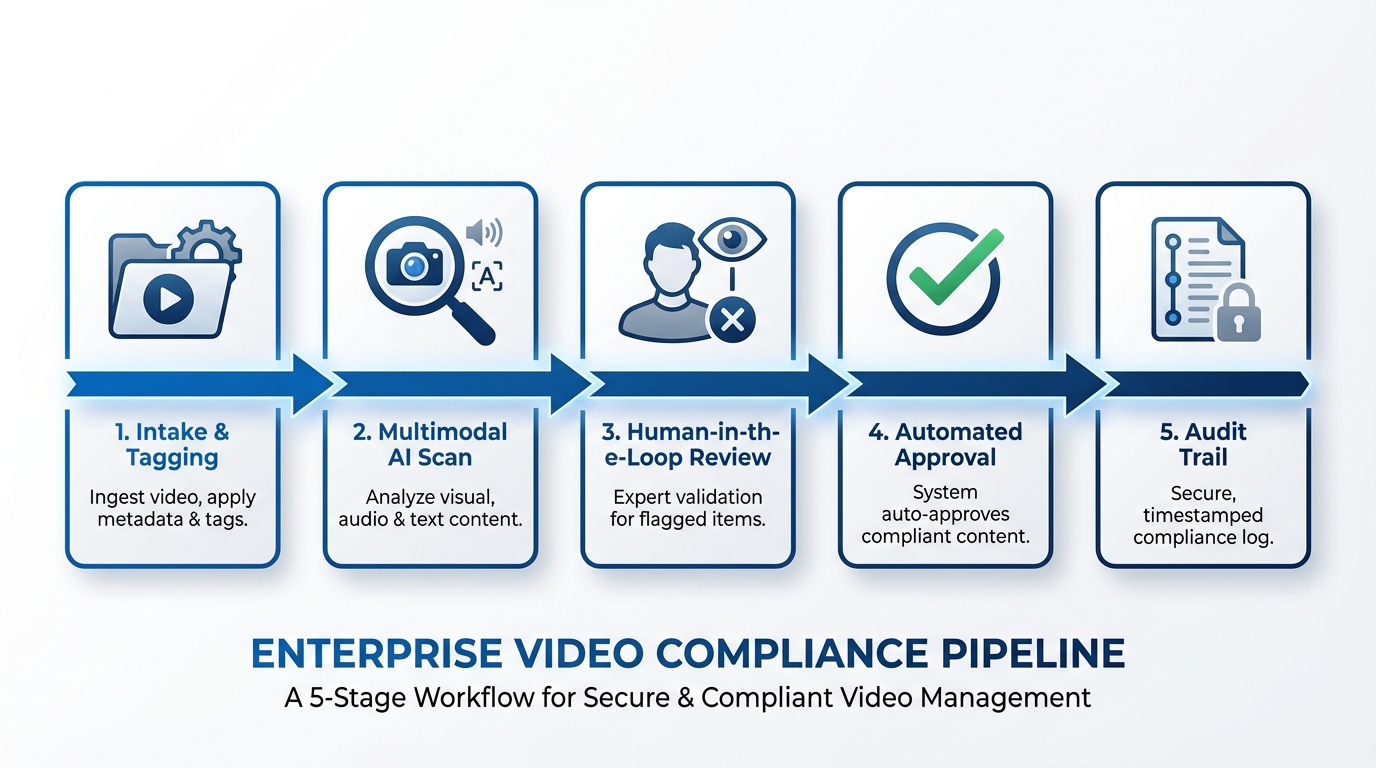

Enterprise video compliance automation is a policy-driven pipeline that programmatically scans creative assets before they ever reach a consumer's screen. For a Tier-1 enterprise, this architecture typically follows a five-stage flow:

- Intake & Metadata Tagging: Every asset is ingested with context—target geography (e.g., Maharashtra vs. Tamil Nadu), product category (e.g., FinTech vs. FMCG), and platform (e.g., Instagram vs. CTV).

- Multimodal AI Content Filtering: The system runs simultaneous scans on audio (speech-to-text), visual (object/scene detection), and OCR (text-on-screen).

- Human-in-the-Loop (HITL): Any asset with a "confidence score" below 85% or those flagged for "nuanced cultural risk" are routed to a human reviewer queue.

- Automated Approval & Watermarking: Compliant videos receive a digital signature or watermark, ensuring only "safe" versions are pushed to ad servers.

- Audit Trail Generation: A time-stamped record of who approved what, based on which policy version, is stored for regulatory readiness.

This systematic approach ensures that content policy enforcement video strategies are not just reactive but integrated into the very fabric of the creative lifecycle.

Section 3: Brand Guideline Enforcement AI and Brand Consistency

Maintaining brand consistency AI videos across 10+ Indian languages and thousands of personalized variants is impossible for manual teams. This is where brand guideline enforcement AI steps in to act as a digital brand custodian.

Key guardrails implemented by these systems include:

- Visual Integrity: Computer vision ensures logo placement, size, and color hex codes adhere to the brand book. It also detects unauthorized third-party IP or background clutter that might dilute the brand message.

- Audio & Lexicon Rules: Automated Speech Recognition (ASR) flags prohibited claims (e.g., "guaranteed returns" in finance) or off-brand tone.

- Regulatory Overlays: The AI automatically checks if the required legal disclaimers are present, legible, and on-screen for the mandatory duration as per Indian law.

- Drift Detection: The system flags if a localized variant in Kannada has drifted in meaning or tone from the original English master creative.

Section 4: Video Moderation API Enterprise: The Integration Blueprint

To achieve speed, enterprises must move away from manual uploads to a video moderation API enterprise model. This allows for low-latency, programmatic scanning at two critical points:

- Pre-flight (CI/CD Gating): Before a video is finalized in the production tool, the API scans the render. If a violation is found, the render is blocked, saving compute costs and preventing "bad" content from entering the DAM (Digital Asset Management) system.

- In-flight (Real-time): For user-generated content (UGC) or live-streamed events, the API provides real-time webhooks that can trigger immediate takedowns or blur filters if controversial content is detected.

Non-functional Requirements for 2026:

- Latency: P95 response times must be <1 second for metadata checks and <5 seconds for full multimodal scans.

- Observability: Every API call must generate an evidence snapshot—a frame-by-frame breakdown of why a piece of content was flagged.

Section 5: Controversial Content Detection Video: Multimodal Methods

Detecting "risk" in 2026 requires more than just keyword filtering. Controversial content detection video platforms now use a unified multimodal stack:

- Audio Analysis: Beyond ASR, AI now detects "sentiment" and "toxicity" in tone. It can identify coded language or slang used to bypass traditional filters.

- OCR & Text-on-Screen: This is critical for detecting ASCI-mandated disclosures like "#ad" or "#partnership." If the text is too small or lacks contrast against the background, the AI flags it as a compliance risk.

- Visual Scene Classification: AI identifies "unsafe gestures," regional political symbols, or culturally sensitive imagery (e.g., improper use of national symbols) that could trigger a backlash in specific Indian states.

- Deepfake & Synthetic Integrity: For AI-generated avatars, the system verifies the presence of a "synthetic content" disclosure and checks for "traceability watermarks" to prevent unauthorized tampering.

Section 6: Automated Brand Safety Checks Across the Campaign Lifecycle

Automated brand safety checks are no longer a "final step" before launch; they are a continuous loop:

- Pre-Bid: Integration with DSPs (Demand Side Platforms) to ensure the placement environment matches the video's suitability tier.

- Pre-Publish: The final "Compliance Gate" where the content policy enforcement video engine gives the green light.

- Post-Publish Monitoring: The AI monitors social sentiment and "watchdog" alerts. If a video starts receiving negative traction due to a cultural misunderstanding, the system can trigger an automated "pause" on all active ad sets.

Section 7: Content Policy Enforcement for India: DPDP + ASCI Alignment

The Indian regulatory landscape is unique due to the intersection of the DPDP Act 2023 and ASCI guidelines.

DPDP Compliance Checklist for Video:

- Lawful Basis: Do you have auditable consent for the "digital twin" or actor used in the video?

- Data Minimization: Are you processing only the biometric or personal data necessary for the render?

- Right to Erasure: If an influencer withdraws consent, can your system programmatically identify and "takedown" all videos featuring their likeness across all platforms?

ASCI Compliance Checklist:

- Disclosure Visibility: Is the disclosure superimposed on the video for at least 3 seconds or 1/4th of the video duration?

- Due Diligence: For health or finance claims, does the system have a linked "evidence file" to substantiate the claim if challenged?

Source: MeitY DPDP Rules 2025

Source: Storyboard18 on ASCI Violations

Section 8: AI Video Quality Assurance India: Localization and QA

AI video quality assurance India requires a deep understanding of the "Many Indias." A video that is perfectly acceptable in urban Mumbai might be culturally insensitive in rural Uttar Pradesh.

The QA Checklist for 2026:

- Multilingual Fidelity: Ensuring that the lip-sync and tone in a Marathi translation don't change the intent of the original message.

- Cultural Sensitivity Triggers: Scanning for regional triggers—religious symbols, local political colors, or specific gestures.

- Safe Areas for Disclosures: Ensuring that platform UI elements (like the "Like" button on Reels) don't obscure the ASCI-mandated disclosures.

Section 9: Reputation Management Video AI India: Detection-to-Response

When a brand safety incident occurs, the reputation management video AI India playbook must be executed in minutes.

- Detection: AI monitors for "spikes" in negative sentiment or mentions of the brand alongside "violation" keywords.

- Triage: The system scores the severity. A "misplaced logo" is a Level 1 (Internal fix), while a "DPDP consent breach" is a Level 5 (Legal/PR escalation).

- Response: Automated triggers can pause campaigns, while the AI generates replacement creative that is pre-vetted for compliance to fill the ad slot.

Section 10: Choosing Brand Safety AI Video Platforms 2025/2026

When evaluating brand safety AI video platforms 2025, enterprise leaders should look for these five "Must-Haves":

- Multimodal Depth: Does the platform scan audio, visual, and OCR simultaneously?

- India-Specific Policy Packs: Does it have pre-built templates for ASCI and DPDP compliance?

- Explainable AI (XAI): Does the platform tell you why a video was rejected, or is it a "black box"?

- Scale & Latency: Can it handle 10,000+ variants per hour with <5 second latency? AI Voice Agent for SaaS

- Data Sovereignty: Does it offer India-based data residency to comply with DPDP localization requirements?

Section 11: Capability Mapping: How TrueFan AI Supports Enterprise Brand Safety

For enterprises looking to balance rapid content creation with rigorous safety, the TrueFan AI ecosystem provides a "walled garden" of compliance.

Platforms like Studio by Truefan AI enable marketers to generate high-quality video content without the risks associated with unauthorized deepfakes or unvetted AI models. By using a "consent-first" model, the platform ensures that every avatar used—whether it’s a digital twin of a real influencer or a custom brand spokesperson—is fully licensed and compliant with global and Indian standards.

Studio by Truefan AI's 175+ language support Quick Commerce Festival Offers 2025 and AI avatars are backed by a robust moderation engine that performs real-time filtering of profanity, political endorsements, and explicit content. This ensures that the brand guideline enforcement AI is active from the moment a script is typed into the browser.

Solutions like Studio by Truefan AI demonstrate ROI through their 100% content compliance record and the ability to generate "safe-by-design" variants in seconds. By integrating video moderation API enterprise capabilities and webhooks, TrueFan AI allows teams to automate their AI content filtering video campaigns across WhatsApp, social media, and e-commerce platforms seamlessly.

Key Safety Features of TrueFan AI:

- ISO 27001 & SOC 2 Certified: Enterprise-grade security for data handling.

- Watermarked Outputs: Ensuring every AI-generated asset is traceable.

- ASCI-Ready: Tools to easily add disclosure overlays and localized disclaimers.

- DPDP Alignment: Consent-driven avatar library with strict data retention policies.

Section 12: Implementation Blueprint (90-Day Rollout)

Implementing enterprise video compliance automation requires a structured approach:

- Day 0–15 (Requirements): Define your "Brand Floor" (what you will never show) and "Suitability Tiers." Map out ASCI and DPDP requirements for your specific sector (e.g., Finance vs. Gaming).

- Day 16–45 (Integration): Connect your video generation tools (like TrueFan AI) to your moderation API. Set up webhooks for automated escalations.

- Day 46–75 (Calibration): Run a pilot campaign. Tune the AI's confidence thresholds to minimize false positives while ensuring no "unsafe" content slips through.

- Day 76–90 (Scaling): Roll out to all regional teams. Finalize executive dashboards for real-time compliance monitoring.

Section 13: KPIs and Governance Scorecard

To measure the success of your content policy enforcement video strategy, track these metrics:

| KPI | Target (2026) | Why it Matters |

|---|---|---|

| Unsafe Rate | <0.01% | Measures the effectiveness of the "Compliance Gate." |

| Disclosure Compliance | 100% | Essential for avoiding ASCI penalties and "Shadowbans." |

| Time-to-Approve | <10 Minutes | Measures the efficiency of the automated brand safety checks. |

| Incident MTTR | <30 Minutes | How fast can you "kill" a live campaign if a risk is detected? |

| Consent Auditability | 100% | Critical for DPDP Act compliance and legal defense. |

Frequently Asked Questions

What are AI video content moderation tools for enterprises?

These are API-first software systems that use multimodal AI (scanning audio, visuals, and text) to automatically detect policy violations, enforce brand guidelines, and ensure legal compliance in video content at scale.

How do brand safety AI video platforms in 2025/2026 differ from older versions?

Modern platforms offer deeper multimodal coverage, specific "Policy Packs" for regional laws like India's DPDP Act, and lower latency for real-time integration into creative workflows. They move beyond simple "keyword blocking" to "contextual suitability."

How to implement a video moderation API in enterprise stacks?

Integration typically involves "Pre-flight" checks in the CI/CD pipeline and "In-flight" monitoring via webhooks. This ensures that content is scanned both before it is saved to a DAM and while it is live on social platforms.

What does the DPDP Act mean for AI video in India?

The DPDP Act requires brands to have a "lawful basis" and auditable consent for any personal data (faces/voices) used in videos. It also mandates strict data minimization and gives users the "right to erasure," meaning brands must be able to delete specific AI-generated content upon request.

How should influencer/AI avatar videos comply with ASCI?

Videos must have superimposed disclosures (like "#ad") that are clearly visible and stay on screen for a significant duration. Studio by Truefan AI helps automate this by allowing creators to overlay these disclosures directly during the generation process, ensuring 100% compliance.

Section 15: Compliance and Quality Checklist (Pre-Publish)

Before any AI video goes live in 2026, ensure it passes this brand consistency AI videos checklist:

- [ ] Multimodal Scan: Has the audio, visual, and OCR been checked for controversial content?

- [ ] ASCI Disclosure: Is the "#ad" or "#partnership" label superimposed and legible?

- [ ] DPDP Consent: Is there a linked record of consent for the avatar or voice used?

- [ ] Regional Sensitivity: Has the video been scanned for local political or religious triggers in the target Indian state?

- [ ] Watermarking: Does the video contain a traceability watermark for synthetic content?

- [ ] Language QA: Is the translation in the 10+ Indian languages accurate and culturally appropriate?

Section 16: Visualizing the Pipeline

The Enterprise Video Compliance Automation Flow

- Creative Input: Script + Avatar Selection (e.g., via TrueFan AI).

- API Scan: Multimodal check for Brand Safety + Suitability.

- Policy Engine: Comparison against ASCI/DPDP/Brand Guidelines.

- Decision: Auto-Approve, Escalate to Human, or Auto-Reject.

- Output: Compliant, Watermarked Video + Audit Log.

Section 17: Strategic Conclusion

In 2026, the speed of content creation must be matched by the speed of compliance. By deploying AI video content moderation tools and integrating them into an enterprise video compliance automation framework, brands can innovate without fear. Whether you are using AI content filtering video campaigns to protect your reputation or leveraging platforms like TrueFan AI to scale your message, the goal remains the same: building trust through consistent, safe, and compliant storytelling.

Ready to secure your brand's video future?

- Request an Enterprise Demo of Studio by Truefan AI

- Download our 2026 India Compliance Whitepaper

- Explore our Video Moderation API Documentation

Recommended Internal Links

- AI Voice Agent for SaaS: Lead Generation That Converts — API orchestration, webhook workflows, and latency/compliance best practices highly relevant to enterprise-grade moderation pipelines and integration patterns.

- Quick Commerce Festival Offers 2025: Instant Delivery & AI — Strong coverage of consent-first operations, ISO/SOC2, WhatsApp Business API orchestration, and large-scale personalized video that complements compliance, localization, and rapid creative replacement.