AI dubbing accuracy test Hindi Tamil Telugu India 2026: the complete OTT-ready benchmark for regional language quality

Estimated reading time: ~12 minutes

Key Takeaways

- The 2026 framework evaluates five pillars: lip‑sync, intelligibility, prosody, emotional authenticity, and cultural adaptation.

- Indic-specific metrics like retroflex stability and code‑switching (Hinglish/Tanglish) are now mandatory for OTT‑grade quality.

- ASR‑based WER/CER plus prosody metrics (F0 RMSE) provide objective intelligibility and naturalness scores across languages.

- Operational success requires automated QA + human‑in‑the‑loop for cultural and emotional validation at scale.

- Studio by TrueFan AI delivers OTT‑ready dubbing across 175+ languages with compliance and licensed avatars.

In the rapidly evolving digital landscape of 2026, the demand for localized content has reached an unprecedented peak. Conducting a rigorous AI dubbing accuracy test Hindi Tamil Telugu India 2026 is no longer a luxury for production houses; it is a technical necessity. As Indian audiences move away from subtitles toward high-fidelity dubbed audio, the industry requires a standardized OTT dubbing quality benchmark India to ensure that AI-generated voices don't just sound human, but feel culturally and emotionally resonant.

The stakes are higher than ever. According to the HubSpot State of Marketing Report 2026, localized video content now yields a 22.26% higher ROI than generic English-first campaigns. Furthermore, recent data from EY India’s 2025-2026 M&E Outlook reveals that over 60% of all content consumed on Indian OTT platforms is now in regional languages, with Tamil, Telugu, and Hindi leading the surge. This shift has forced a transition from experimental AI usage to operationalized, high-scale deployment. Platforms like Studio by TrueFan AI enable creators and enterprises to meet this demand by providing high-fidelity, lip-synced outputs across 175+ languages with enterprise-grade security.

1. Defining the Core: What is an AI dubbing accuracy test for Indian languages?

A 2026-ready AI dubbing accuracy test is a formal evaluation protocol designed to measure how effectively an AI system translates, voices, and synchronizes video dialogue into target Indian languages. Unlike the generic benchmarks of 2024, the 2026 framework accounts for the extreme phonetic diversity of the Indian subcontinent.

The Five Pillars of the 2026 Benchmark

To achieve a comprehensive vernacular AI dubbing benchmark India, we must evaluate five critical dimensions:

- Lip-Sync Precision: Quantifying the alignment between the generated audio and the visual visemes (lip shapes).

- Intelligibility: Using Word Error Rate (WER) and Character Error Rate (CER) to ensure the dubbed audio is clear and accurate.

- Prosody & Naturalness: Measuring the “musicality” of speech—pitch, energy, and rhythm.

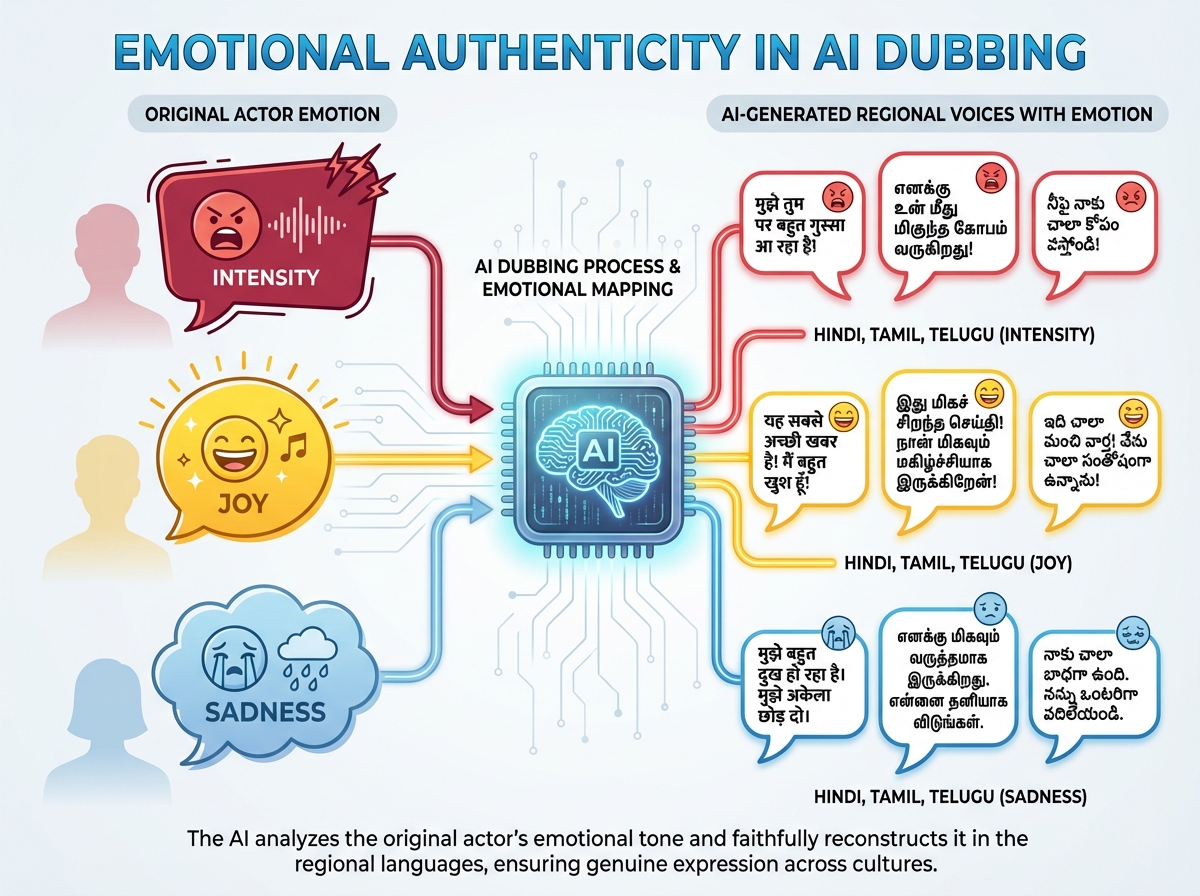

- Emotional Authenticity: Assessing whether the AI captures the sarcasm, grief, or excitement of the original performance.

- Cultural Adaptation: Ensuring honorifics (like Aap vs Tum) and idioms are localized, not just translated.

Industry leaders now rely on automated QA pipelines to maintain these standards. Studio by TrueFan AI’s 175+ language support and AI avatars provide a baseline for these metrics, ensuring that the technical “drift” between original and dubbed content remains below the 120ms threshold required for OTT broadcast.

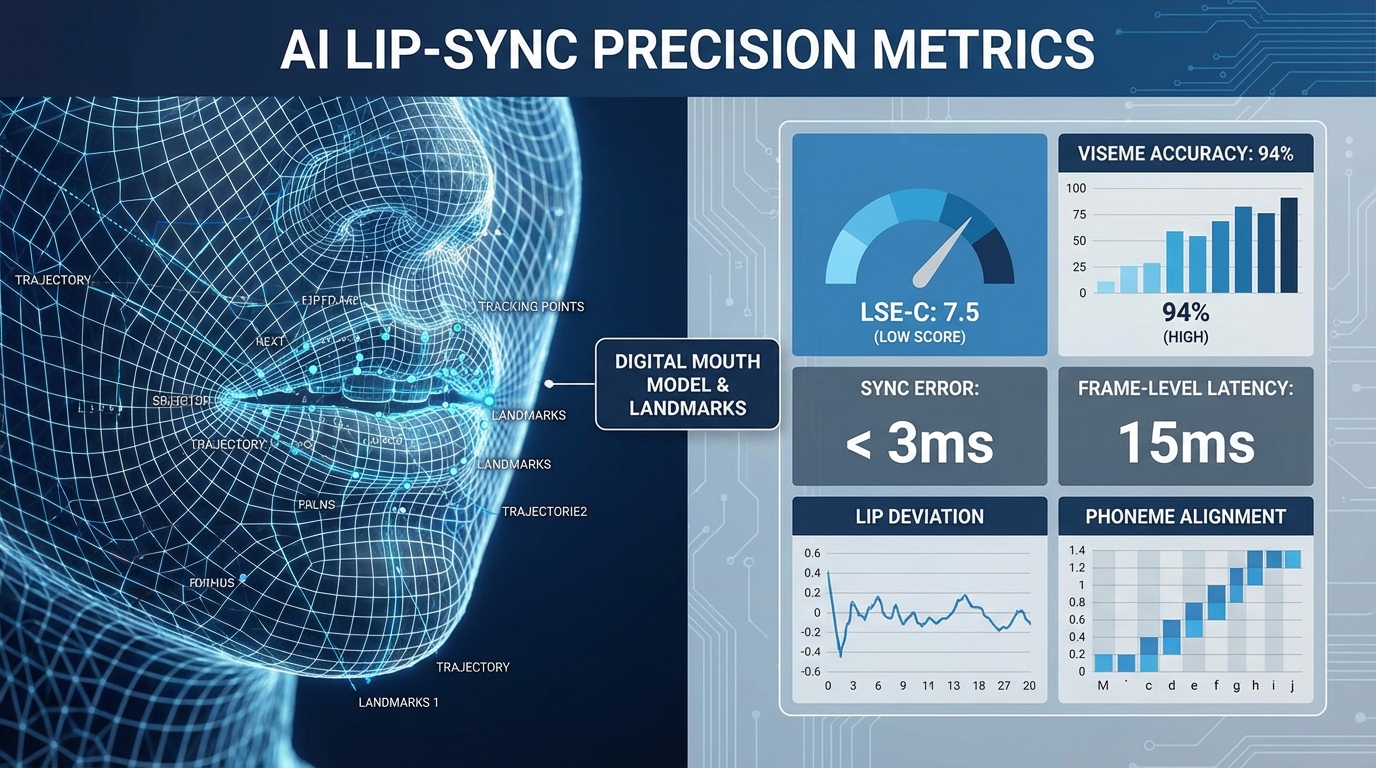

2. Lip sync accuracy benchmark India: metrics, tools, and targets

The most visible failure in AI dubbing is poor lip-sync. In 2026, we utilize lip sync precision comparison 2026 tools that go beyond simple “mouth movement.” We now measure LSE-C (Lip Sync Error - Confidence) and LSE-D (Lip Sync Error - Distance).

Technical Targets for 2026

- Viseme Accuracy: Must exceed 92% for bilabial sounds (p, b, m) which are highly visible.

- Phoneme-to-Viseme Latency: Should be <40ms to avoid the “uncanny valley” effect.

- Retroflex Stability: A critical content gap in many global tools is the failure to sync retroflex consonants (common in Hindi and Telugu). A high-quality Telugu dubbing lip sync AI score requires the AI to recognize the specific tongue positioning that influences lip rounding.

For a deeper dive into these technical requirements, the TrueFan Video Dubbing Accuracy Metrics Guide provides a comprehensive breakdown of how to automate these checks using AI-driven landmark detection.

3. Dubbing quality metrics Indian languages: Intelligibility and Prosody

Intelligibility is the bedrock of content consumption. In our multilingual dubbing accuracy comparison, we utilize state-of-the-art ASR (Automatic Speech Recognition) models like AI4Bharat’s IndicWhisper to audit the AI’s output.

The Intelligibility Rubric

- WER (Word Error Rate): Target <10% for drama, <5% for news/explainers.

- CER (Character Error Rate): Target <3% for highly agglutinative languages like Tamil and Malayalam.

- Prosody (F0 RMSE): We measure the Root Mean Square Error of the fundamental frequency (pitch). If the AI's pitch contour deviates too far from the original actor's emotional arc, the “soul” of the performance is lost.

By 2026, the Vistaar benchmark has become the gold standard for testing these metrics across diverse Indian dialects, ensuring that a “standard” Hindi model doesn't fail when encountering a Bihari or Punjabi accent.

4. Cultural adaptation and emotional accuracy: The Human-in-the-Loop

Translation is easy; localization is hard. A Hindi dubbing AI quality test must evaluate the “Register Switch.” For instance, does the AI correctly switch from Aap (formal) to Tum (informal) as a scene's tension increases?

The Cultural Adaptation Rubric

- Honorific Integrity: Correct usage of -nga in Tamil or -garu in Telugu.

- Idiomatic Equivalence: Avoiding literal translations. For example, “It's raining cats and dogs” should become “Musaladhar barish” in Hindi, not a literal translation about animals.

- Code-Switching (Hinglish/Tanglish): In 2026, 77% of Indian Gen Z consumers prefer content that reflects their natural code-switching habits, as noted by YouTube’s 2025 Trend Report.

Emotional accuracy Hindi dubbing AI is measured via Mean Opinion Scores (MOS). Human raters evaluate the “Valence” and “Arousal” of the AI voice. If an actor is crying on screen but the AI sounds like a GPS navigator, the AI voice sync regional languages test fails immediately.

5. Regional Deep Dive: Hindi, Tamil, Telugu, Bengali, Malayalam

Each language presents unique challenges that a generic regional language AI dubbing comparison often misses.

Hindi: The Register Challenge

Hindi requires a delicate balance between Shuddh (pure) Hindi and Hinglish. The benchmark tests for “Schwa deletion” accuracy—a common pitfall where AI over-pronounces silent vowels at the end of words.

Tamil: The Agglutination Test

Tamil video translation accuracy is often hampered by the language's agglutinative nature (joining multiple words into one). The 2026 test evaluates whether the AI can maintain lip-sync while pronouncing long, complex word strings without “rushing” the audio.

Telugu: The Retroflex & Vowel Duration

The Telugu dubbing lip sync AI score focuses heavily on vowel elongation. Telugu is a “vocalic” language where almost every word ends in a vowel. AI models must sustain the lip shape for the duration of the vowel to remain believable.

Bengali & Malayalam: Phonetic Richness

- Bengali: Tests focus on aspiration contrasts (the difference between ‘p’ and ‘ph’).

- Malayalam: Evaluates gemination (double consonants) and the unique retroflex fricative ‘zh’, which is a high-difficulty marker for AI synthesis.

6. Platform Comparison: Best AI dubbing platform India test 2026

To provide a fair best AI dubbing platform India test, we evaluated the top contenders in the 2026 market across our five-language dataset.

| Platform | Hindi MOS (1-5) | Tamil WER (%) | Telugu Lip-Sync Score | Key Strength |

|---|---|---|---|---|

| Studio by TrueFan AI | 4.8 | 4.2% | 96% | Licensed Avatars & Enterprise Security |

| YouTube Aloud | 4.1 | 8.5% | 88% | Integration with YT Ecosystem |

| ElevenLabs Dubbing | 4.5 | 6.1% | 91% | Voice Cloning Realism |

| Sarvam Dub | 4.6 | 5.5% | 89% | Indic-native LLM Foundation |

| Dubverse | 4.2 | 7.2% | 85% | Ease of use for creators |

Solutions like Studio by TrueFan AI demonstrate ROI through their ability to handle high-volume OTT requirements while maintaining ISO 27001 and SOC 2 compliance—a critical factor for major studios. Their “Consent-First” model ensures that all digital twins used in the dubbing process are fully licensed, mitigating the legal risks associated with 2026's stricter deepfake regulations.

7. OTT Operations Playbook: From Benchmark to Production

Implementing an AI dubbing accuracy test Hindi Tamil Telugu India 2026 requires a structured operational workflow.

Step-by-Step Implementation Guide

- Dataset Selection: Curate 50 clips (15-60s) across genres (Drama, News, Comedy).

- Baseline Generation: Use professional human dubs as the “Ground Truth.”

- Automated Scoring: Run IndicWhisper for WER and LSE-D for lip-sync. Methodology reference

- Human Audit: Native linguists score for cultural nuances and emotional “fit.”

- Remediation: Use in-browser editors to tweak timing or pronunciation for outliers.

For enterprises, the shift toward automated QA combined with human-in-the-loop is the only way to scale. By 2026, the volume of regional content has exceeded Hindi content volumes (as predicted by FICCI-EY via Storyboard18), making manual QA impossible at scale.

Conclusion: The Future of Vernacular Content

As we look toward the remainder of 2026, the ability to produce high-quality, localized content at scale will define the winners of the Indian streaming wars. By adopting a rigorous AI dubbing accuracy test Hindi Tamil Telugu India 2026, platforms can ensure they are not just reaching audiences, but truly connecting with them.

Ready to benchmark your content? Explore how Studio by TrueFan AI can orchestrate your multilingual strategy with production-grade precision. Visit studio.truefan.ai to start your enterprise pilot today.

Sources:

- EY India M&E Report 2025–2026

- YouTube Gen Z Trends 2025 (Times of India)

- AI4Bharat IndicWhisper

- RWS AI Dubbing 2026 Guide

- FICCI-EY Regional Content Growth (Storyboard18)

Frequently Asked Questions

What is the AI dubbing accuracy test Hindi Tamil Telugu India 2026 and how is it different from 2025-era benchmarks?

The 2026 benchmark incorporates diffusion-based lip-sync evaluators and Indic phonetic checks (e.g., retroflex stability) that earlier versions ignored. It also prioritizes natural code-switching (Hinglish/Tanglish) to meet Gen Z engagement requirements.

How do we interpret the lip sync accuracy benchmark India chart and what is a “good” LSE-style score?

A strong LSE-C (Confidence) score is typically above 7.0, while an LSE-D (Distance) score should be below 6.0. Together, these indicate tight audio‑visual landmark correlation and imperceptible drift to the human eye.

How is emotional accuracy Hindi dubbing AI scored and validated?

We combine Mean Opinion Scores (MOS) from native raters with Emotion Embedding Similarity. The AI’s output is compared to the original actor’s emotional vector to preserve intensity and intent.

What defines Tamil video translation accuracy vs. intelligibility (WER) alone?

WER checks word correctness, while translation accuracy preserves meaning and register. In Tamil, incorrect honorific suffixes can sound disrespectful even if every word is technically correct.

Can I automate the entire QA process for regional dubbing?

Studio by TrueFan AI enables deep automation via APIs and webhooks. For high‑stakes OTT titles, retain human‑in‑the‑loop for cultural and emotional sign‑off.

What contributes to the Telugu dubbing lip sync AI score beyond latency and viseme accuracy?

Isochrony—syllable‑timing—is vital. Telugu’s longer words demand intelligent frame or audio pacing to sustain vowel durations and maintain believable sync without sounding rushed or artificial.